Fast-SCNN

A PyTorch implementation of Fast-SCNN: Fast Semantic Segmentation Network from the paper by Rudra PK Poudel, Stephan Liwicki.

Installation

- Python 3.x. Recommended using Anaconda3

- PyTorch 1.0. Install PyTorch by selecting your environment on the website and running the appropriate command. Such as:

conda install pytorch torchvision cudatoolkit=9.0 -c pytorch - Clone this repository.

- Download the dataset by following the instructions below.

- Note: For training, we currently support cityscapes, and aim to add VOC and ADE20K.

Datasets

- You can download cityscapes from here. Note: please download leftImg8bit_trainvaltest.zip(11GB) and gtFine_trainvaltest(241MB).

Training-Fast-SCNN

- By default, we assume you have downloaded the cityscapes dataset in the

./datasets/citysdir. - To train Fast-SCNN using the train script the parameters listed in

train.pyas a flag or manually change them.

python train.py --model fast_scnn --dataset citys

Evaluation

To evaluate a trained network:

python eval.py

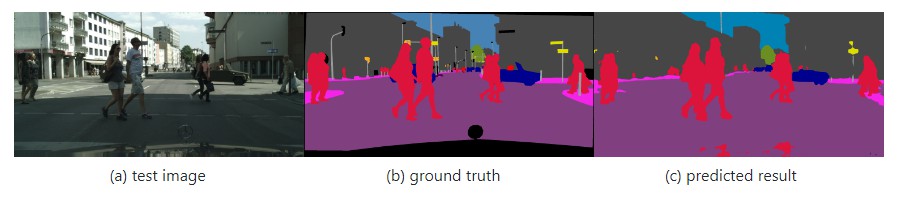

Demo

Running a demo:

python demo.py --model fast_scnn --input-pic './png/berlin_000000_000019_leftImg8bit.png'

Results

| Method | Dataset | crop_size | mIoU | pixAcc |

|---|---|---|---|---|

| Fast-SCNN(paper) | cityscapes | |||

| Fast-SCNN(ours) | cityscapes | 768 | 54.84% | 92.37% |

Note: The result based on crop_size=768, which is different with paper.

TODO

- [ ] add distributed training

- [ ] Support for the VOC, ADE20K dataset

- [ ] Support TensorBoard

- [x] save the best model

- [x] add Ohem Loss