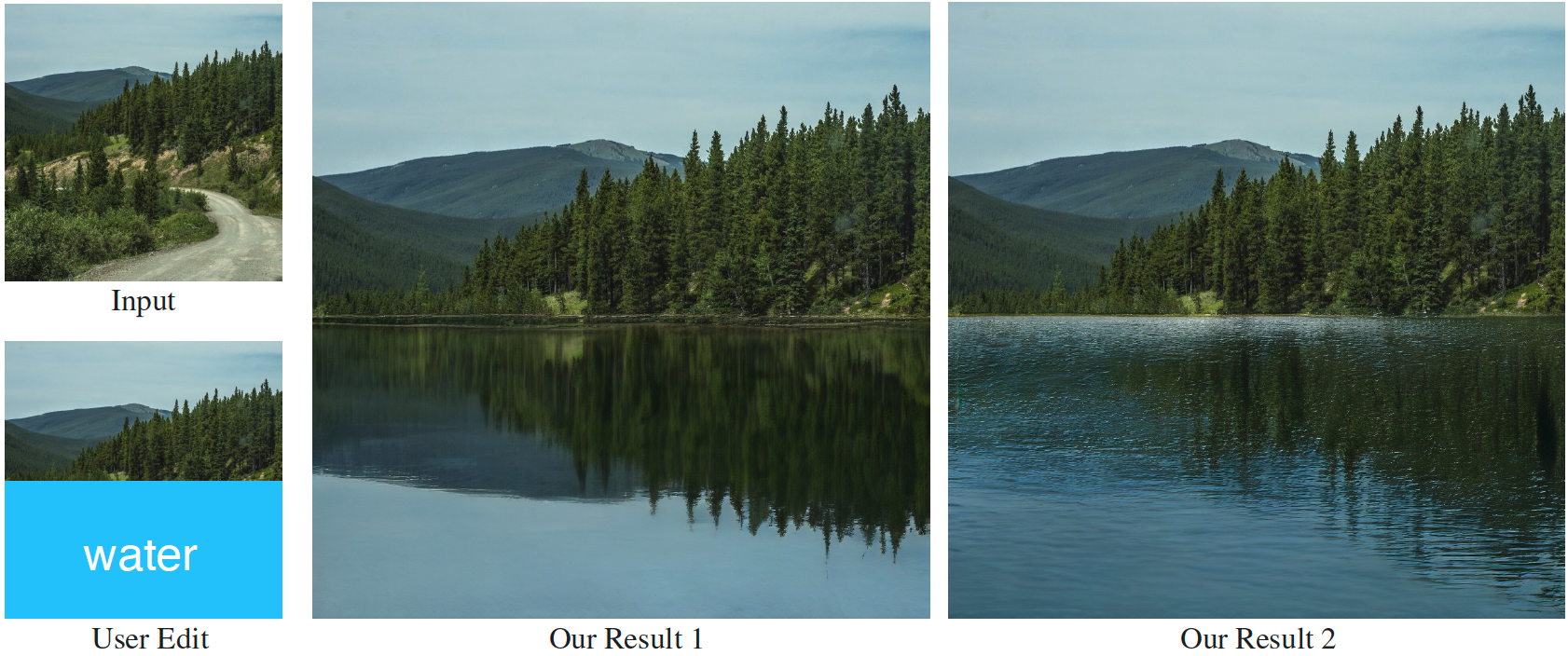

ASSET: Autoregressive Semantic Scene Editing with Transformers at High Resolutions

Difan Liu, Sandesh Shetty, Tobias Hinz, Matthew Fisher, Richard Zhang, Taesung Park, Evangelos Kalogerakis

UMass Amherst and Adobe Research

ACM Transactions on Graphics (SIGGRAPH 2022)

Project page | Paper

Requirements

- The code has been tested with PyTorch 1.7.1 (GPU version) and Python 3.6.12.

- Python packages: OpenCV (4.5.1), pytorch-lightning (1.4.2), omegaconf (2.0.6), einops (0.3.0), PyYAML (5.4.1), test-tube (0.7.5), albumentations (1.0.0), transformers (4.10.0).

Testing

Flickr-Landscape

Download pretrained model, replace the config keys in configs/landscape_test.yaml with the path of pretrained model, run

python test.py -t configs/landscape_test.yaml -i data_test/landscape_input.jpg -s data_test/landscape_seg.png -m data_test/landscape_mask.png -c waterother -n water_reflection

COCO-Stuff

Download pretrained model, replace the config keys in configs/coco_test.yaml with the path of pretrained model, run

python test.py -t configs/coco_test.yaml -i data_test/coco_input.png -s data_test/coco_seg.png -m data_test/coco_mask.png -c pizza -n coco_pizza

Training

Datasets

- The Flickr-Landscape dataset is not sharable due to license issues. But the images were scraped from Mountains Anywhere and Waterfalls Around the World, using the Python wrapper for the Flickr API. Please contact Taesung Park with title “Flickr Dataset for Swapping Autoencoder” for more details. We include an example from the Flickr-Landscape dataset in

data/landscapeso you can run the training without preparing the dataset. - For the COCO-Stuff dataset, create a symlink

data/coco/imgscontaining the images from the 2017 split intrain2017andval2017, and their annotations indata/coco/annotations. Files can be obtained from the COCO webpage. In addition, we use the Stuff+thing PNG-style annotations on COCO 2017 trainval annotations from COCO-Stuff, which should be placed underdata/coco/cocostuffthings.

Flickr-Landscape

Train a VQGAN with:

python main.py --base configs/landscape_vqgan.yaml -t True --gpus -1 --num_gpus 8 --save_dir <path to ckpt>

In configs/landscape_guiding_transformer.yaml, replace the config key model.params.first_stage_config.params.ckpt_path with the pretrained VQGAN, replace the config key model.params.cond_stage_config.params.ckpt_path with landscape_VQ_seg_model.ckpt (check Taming Transformers for the training of VQ_seg_model), train the guiding transformer at 256 resolution with:

python main.py --base configs/landscape_guiding_transformer.yaml -t True --gpus -1 --num_gpus 8 --user_lr 3.24e-5 --save_dir <path to ckpt>

In configs/landscape_SGA_transformer_512.yaml, replace the config key model.params.guiding_ckpt_path and model.params.ckpt_path with the pretrained guiding transformer, finetune the SGA transformer at 512 resolution with:

python main.py --base configs/landscape_SGA_transformer_512.yaml -t True --gpus -1 --num_gpus 8 --user_lr 1.25e-5 --save_dir <path to ckpt>

In configs/landscape_SGA_transformer_1024.yaml, replace the config key model.params.guiding_ckpt_path with the pretrained guiding transformer, replace the config key model.params.ckpt_path with the SGA transformer finetuned at 512 resolution, finetune the SGA transformer at 1024 resolution with:

python main.py --base configs/landscape_SGA_transformer_1024.yaml -t True --gpus -1 --num_gpus 8 --user_lr 5e-6 --save_iters 4000 --val_iters 16000 --accumulate_bs 4 --save_dir <path to ckpt>

COCO-Stuff

Train a VQGAN with:

python main.py --base configs/coco_vqgan.yaml -t True --gpus -1 --num_gpus 2 --save_dir <path to ckpt>

In configs/coco_guiding_transformer.yaml, replace the config key model.params.first_stage_config.params.ckpt_path with the pretrained VQGAN, replace the config key model.params.cond_stage_config.params.ckpt_path with coco_VQ_seg_model.ckpt, train the guiding transformer at 256 resolution with:

python main.py --base configs/coco_guiding_transformer.yaml -t True --gpus -1 --num_gpus 8 --user_lr 3.24e-5 --save_dir <path to ckpt>

In configs/coco_SGA_transformer_512.yaml, replace the config key model.params.guiding_ckpt_path and model.params.ckpt_path with the pretrained guiding transformer, finetune the SGA transformer at 512 resolution with:

python main.py --base configs/coco_SGA_transformer_512.yaml -t True --gpus -1 --num_gpus 8 --user_lr 1.25e-5 --save_dir <path to ckpt>

BibTex:

@article{liu2022asset,

author = {Liu, Difan and Shetty, Sandesh and Hinz, Tobias and Fisher, Matthew and Zhang, Richard and Park, Taesung and Kalogerakis, Evangelos},

title = {ASSET: Autoregressive Semantic Scene Editing with Transformers at High Resolutions},

year = {2022},

volume = {41},

number = {4},

journal = {ACM Trans. Graph.},}

Acknowledgment

Our code is developed based on Taming Transformers.

Contact

To ask questions, please email.