AdaDSR

PyTorch implementation of Deep Adaptive Inference Networks for Single Image Super-Resolution.

Results

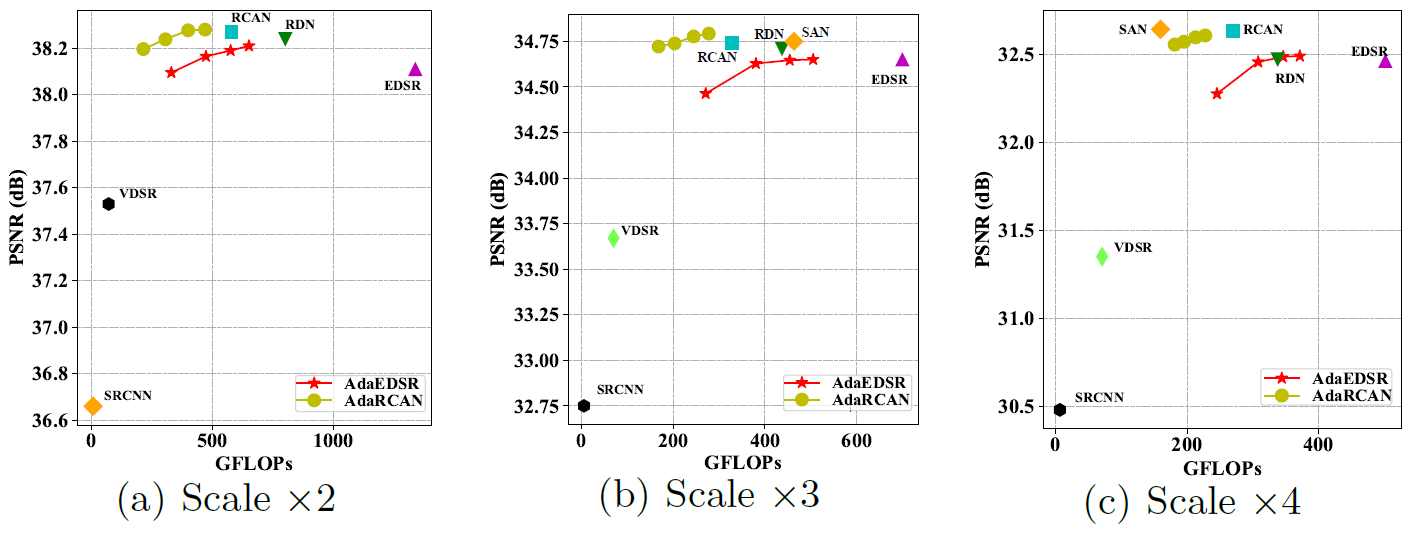

SISR results on Set5. More results please refer to the paper.

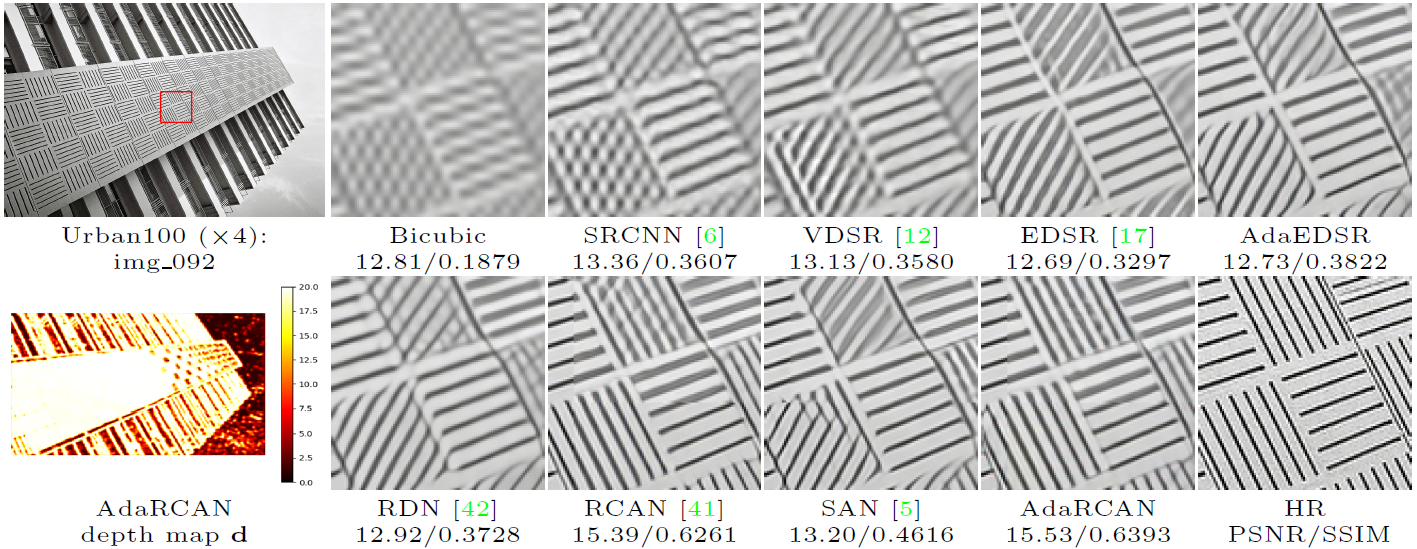

An exemplar visualization of the SR results and depth map.

Preparation

- Prerequisites

- PyTorch (v1.2)

- Python 3.x, with OpenCV, Numpy, Pillow, tqdm and matplotlib, and tensorboardX is used for visualization

- [optional] Make sure that matlab is in your PATH, if you want to calculate the PSNR/SSIM indices and use the argument

--matlab True - [Sparse Conv]

cd masked_conv2d; python setup.py install. Note that currently we provide a version modified from open-mmlab/mmdetection, which supports inference with 3x3 sparse convolution layer. We will provide a more general version in the future.

- Dataset

- Training

- Testing

- Models

- Download the pre-trained models (~2.2GB) from Google Drive or Baidu Yun (cyps), and put the two folders in the root folder.

Quick Start

We show some exemplar commands here for better introduction, and more useful scripts are given in the scripts folder.

Testing

-

AdaEDSR

python test.py --model adaedsr --name adaedsr_x2 --scale 2 --load_path ./ckpt/adaedsr_x2/AdaEDSR_model.pth --dataset_name set5 set14 b100 urban100 manga109 --depth 32 --chop True --sparse_conv True --matlab True --gpu_ids 0 -

AdaRCAN

python test.py --model adarcan --name adarcan_x2 --scale 2 --load_path ./ckpt/adarcan_x2/AdaRCAN_model.pth --dataset_name set5 set14 b100 urban100 manga109 --depth 20 --chop True --sparse_conv True --matlab True --gpu_ids 0

Training

-

AdaEDSR (Load pre-trained EDSR model for more stable training)

python train.py --model adaedsr --name adaedsr_x2 --scale 2 --load_path ./pretrained/EDSR_official_32_x${scale}.pth -

AdaRCAN (Load pre-trained RCAN model for more stable training)

python train.py --model adarcan --name adarcan_x2 --scale 2 --load_path ./pretrained/RCAN_BIX2.pth

Note

- You should set data root by

--dataroot DIV2K_ROOT(train) or--dataroot BENCHMARK_ROOT(test), or you can add your own path in the rootlist of div2k_dataset or benchmark_dataset. - You can specify which GPU to use by

--gpu_ids, e.g.,--gpu_ids 0,1,--gpu_ids 3,--gpu_ids -1(for CPU mode). In the default setting, all GPUs are used. - You can refer to options for more arguments.

Citation

If you find AdaDSR useful in your research, please consider citing:

@article{AdaDSR,

title={Deep Adaptive Inference Networks for Single Image Super-Resolution},

author={Liu, Ming and Zhang, Zhilu and Hou, Liya and Zuo, Wangmeng and Zhang, Lei},

journal={arXiv preprint arXiv:2004.03915},

year={2020}

}

Acknowledgement

This repo is built upon the framework of CycleGAN, and we borrow some code from DPSR, mmdetection, EDSR, RCAN and SAN, thanks for their excellent work!