RAdam-Tensorflow

Simple Tensorflow implementation of "On The Variance Of The Adaptive Learning Rate And Beyond".

Usage

from RAdam import RAdamOptimizer

train_op = RAdamOptimizer(learning_rate=0.001, beta1=0.9, beta2=0.999, weight_decay=0.0).minimize(loss)

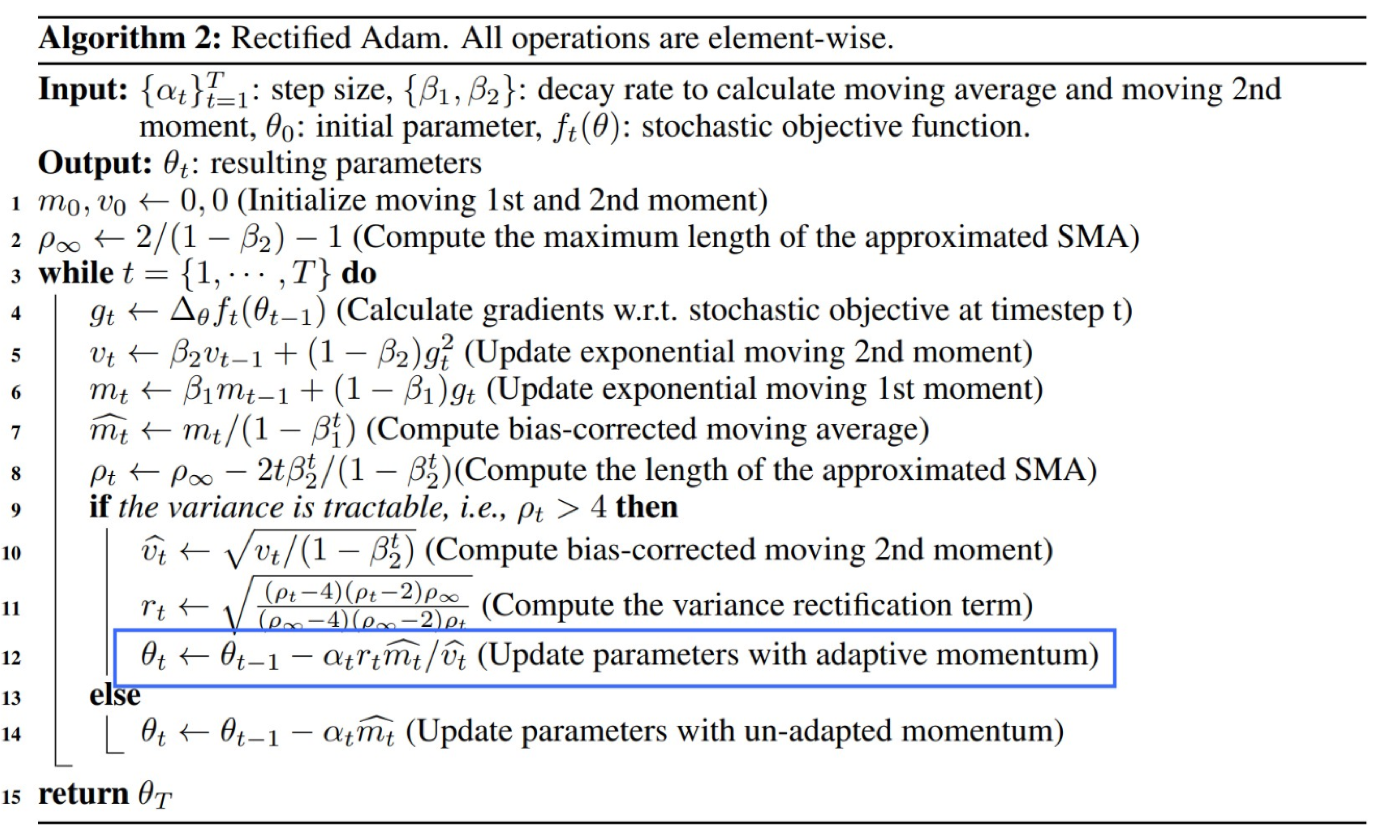

Algorithm