FAIR Self-Supervision Benchmark

This code provides various benchmark (and legacy) tasks for evaluating quality of visual representations learned by various self-supervision approaches. This code corresponds to our work on Scaling and Benchmarking Self-Supervised Visual Representation Learning. The code is written in Python and uses Caffe2 frontend as available in PyTorch 1.0. We hope that this benchmark release will provided a consistent evaluation strategy that will allow measuring the progress in self-supervision easily.

Introduction

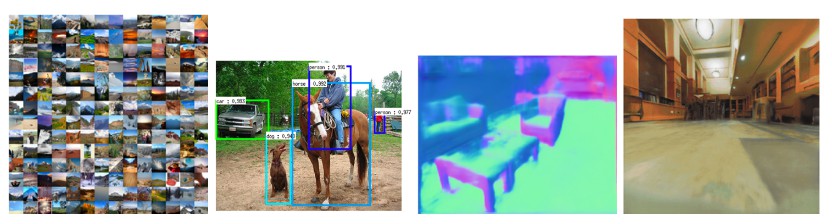

The goal of fair_self_supervision_benchmark is to standardize the methodology for evaluating quality of visual representations learned by various self-supervision approaches. Further, it provides evaluation on a variety of tasks as follows:

Benchmark tasks: The benchmark tasks are based on principle: a good representation (1) transfers to many different tasks, and, (2) transfers with limited supervision and limited fine-tuning. The tasks are as follows.

- Image Classification

- Low-Shot Image Classification

- Object Detection on VOC07 and VOC07+12 with frozen backbone for detectors:

- Surface Normal Estimation

- Visual Navigation in Gibson Environment

These Benchmark tasks use the network architectures:

Legacy tasks: We also classify some commonly used evaluation tasks as legacy tasks for reasons mentioned in Section 7 of paper. The tasks are as follows:

- ImageNet-1K classification task

- VOC07 full finetuning

- Object Detection on VOC07 and VOC07+12 with full tuning for detectors: