DANNet

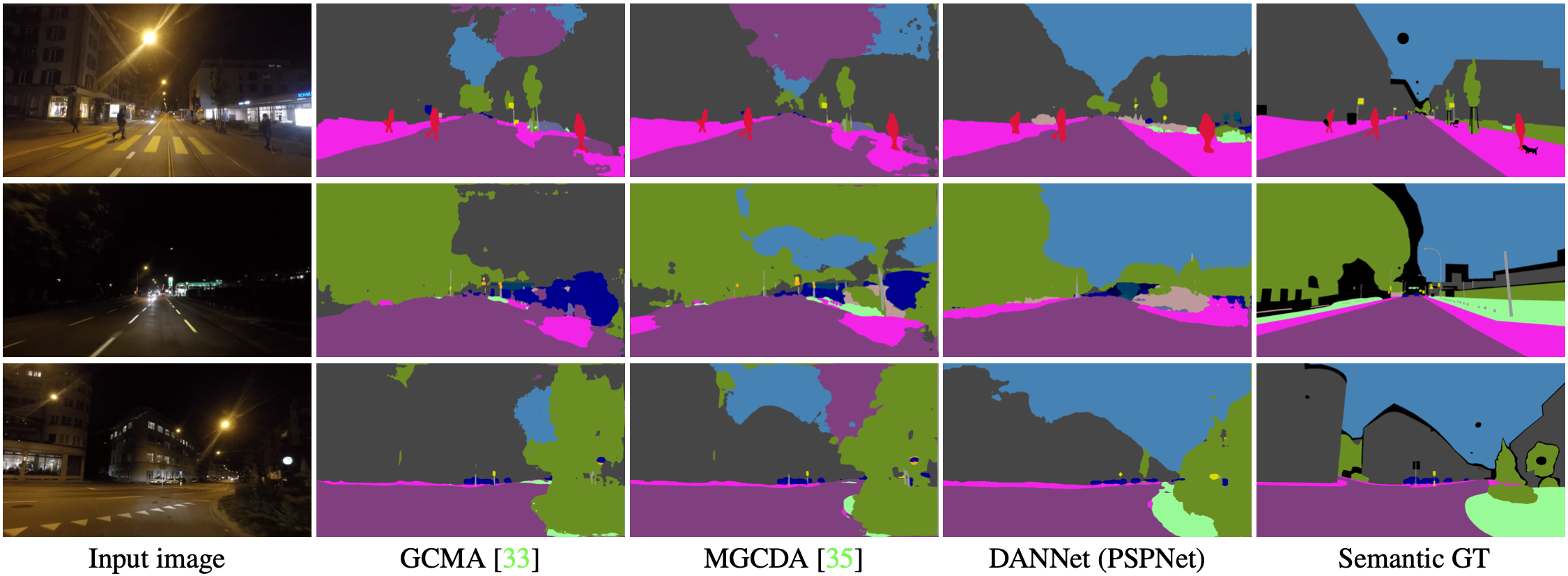

DANNet: A One-Stage Domain Adaptation Network for Unsupervised Nighttime Semantic Segmentation

Requirements

- python3.7

- pytorch==1.5.0

- cuda10.2

Datasets

Cityscapes: Please follow the instructions in Cityscape to download the training set.

Dark-Zurich: Please follow the instructions in Dark-Zurich to download the training/val/test set.

Testing

If needed, please directly download the visualization results of our method for Dark-zurich-val and Dark-zurich-test.

To reproduce the reported results in our paper (on Dark-Zurich val), please follow these steps:

Step1: download the [trained models](https://www.dropbox.com/s/fmlq806p2wqf311/trained_models.zip?dl=0) and put it in the root.

Step2: change the data and model paths in configs/test_config.py

Step3: run "python evaluation.py"

Step4: run "python compute_iou.py"

If you want to evaluate your methods on the test set, please visit this challenge for more details.

Training

If you want to train your own models, please follow these steps:

Step1: download the [pre-trained models](https://www.dropbox.com/s/3n1212kxuv82uua/pretrained_models.zip?dl=0) and put it in the root.

Step2: change the data and model paths in configs/train_config.py

Step3: run "python train.py"

Acknowledgments

The code is based on AdaptSegNet, PSPNet, Deeplab-v2 and RefineNet.

Related works

Citation

If you think this paper is useful for your research, please cite our paper:

@InProceedings{WU_2021_CVPR,

author = {Wu, Xinyi and Wu, Zhenyao and Guo, Hao and Ju, Lili and Wang, Song},

title = {DANNet: A One-Stage Domain Adaptation Network for Unsupervised Nighttime Semantic Segmentation},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021}

}

Contact

- Xinyi Wu ([email protected])

- Zhenyao Wu ([email protected])