assembled-cnn

Official implementation of "Compounding the Performance Improvements of Assembled Techniques in a Convolutional Neural Network".

Abstract

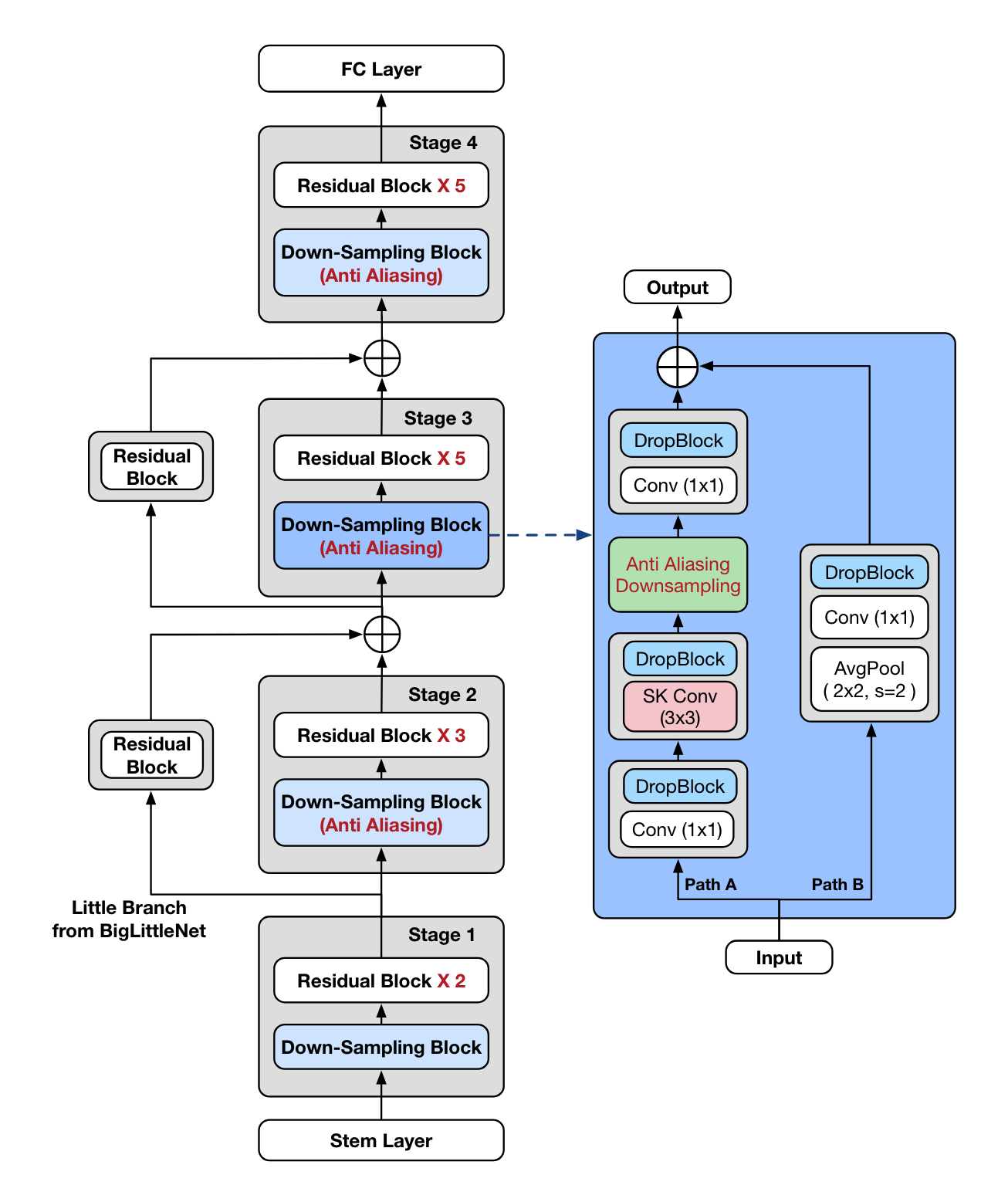

Recent studies in image classification have demonstrated a variety of techniques for improving the performance

of Convolutional Neural Networks (CNNs). However, attempts to combine existing techniques to create a practical model

are still uncommon. In this study, we carry out extensive experiments to validate that carefully assembling these techniques

and applying them to a basic CNN model in combination can improve the accuracy and robustness of the model while minimizing

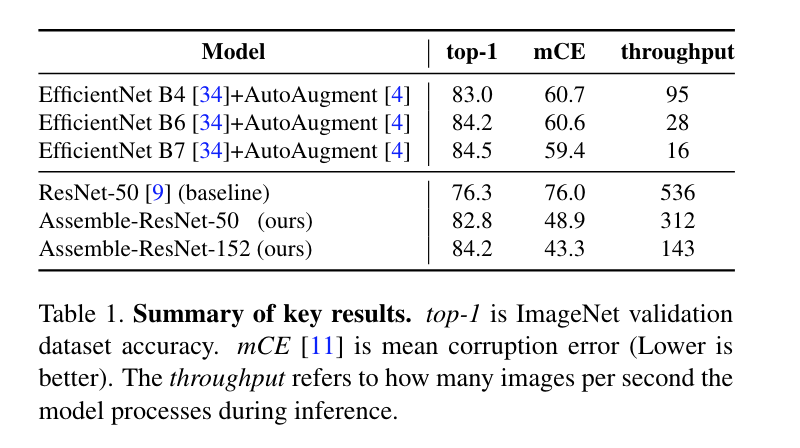

the loss of throughput. For example, our proposed ResNet-50 shows an improvement in top-1 accuracy from 76.3% to 82.78%,

and mCE improvement from 76.0% to 48.9%, on the ImageNet ILSVRC2012 validation set. With these improvements, inference

throughput only decreases from 536 to 312. The resulting model significantly outperforms state-of-the-art models with similar

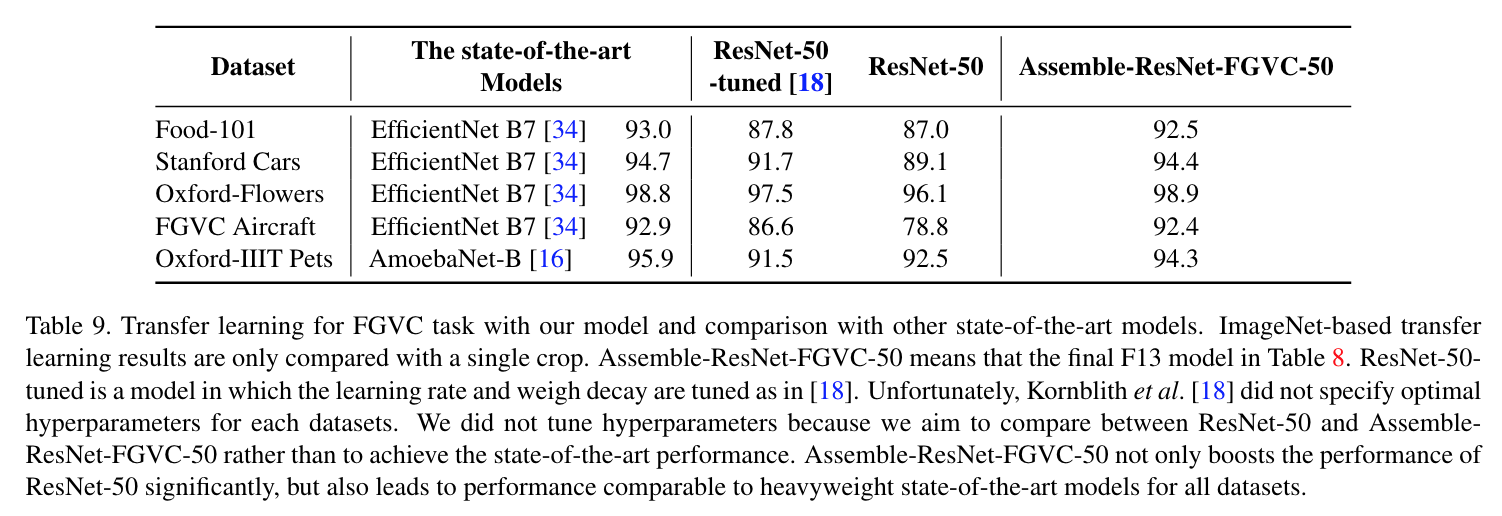

accuracy in terms of mCE and inference throughput. To verify the performance improvement in transfer learning,

fine grained classification and image retrieval tasks were tested on several open datasets and showed that the improvement

to backbone network performance boosted transfer learning performance significantly. Our approach achieved 1st place

in the iFood Competition Fine-Grained Visual Recognition at CVPR 2019.

Main Results

Summary of key results

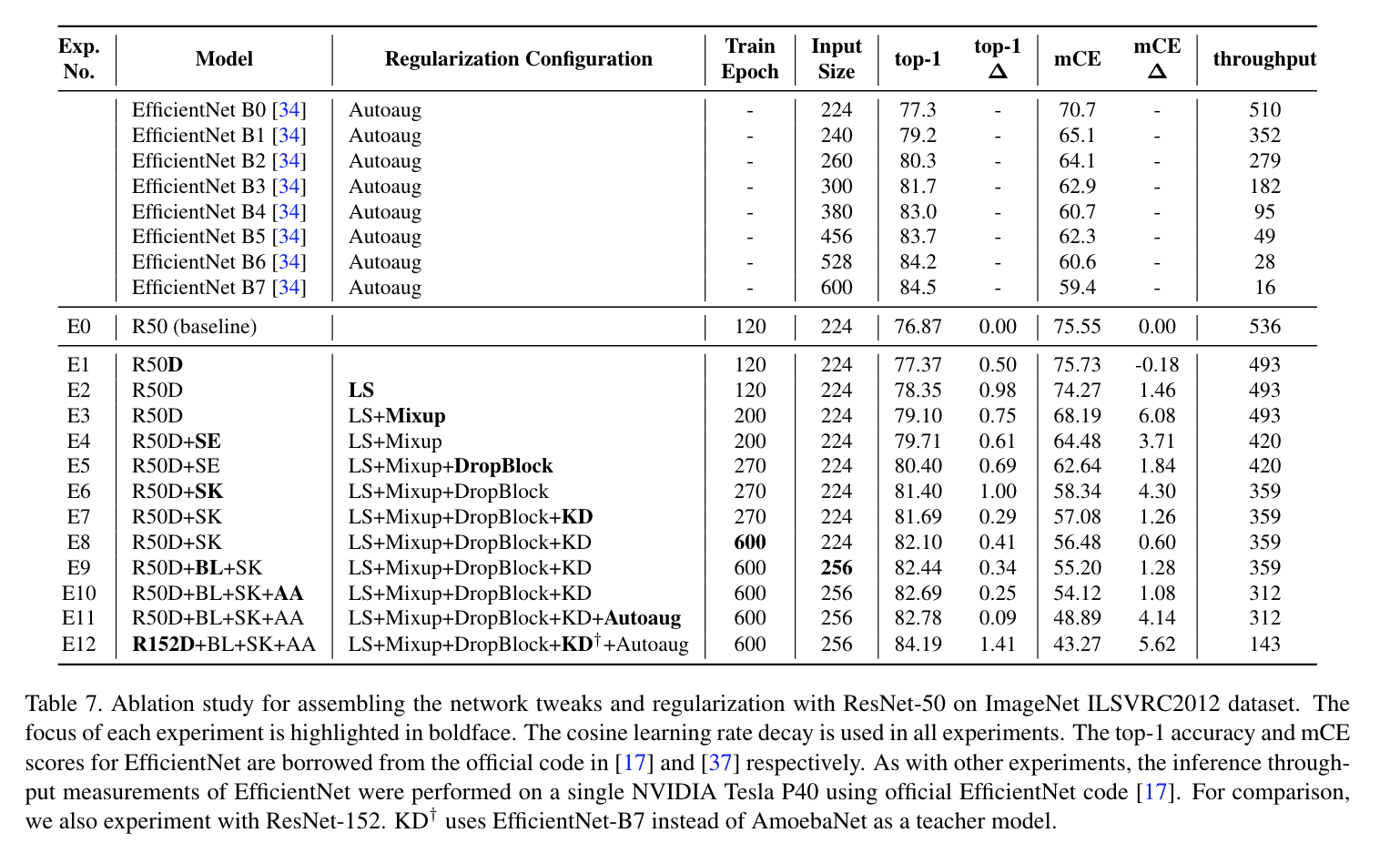

Ablation Study

Transfer learning

Honor

Based on our repository, we achieved 1st place in iFood Competition Fine-Grained Visual Recognition at CVPR 2019.

Getting Started

- This work was tested with Tensorflow 1.14.0, CUDA 10.0, python 3.6.

Requirements

pip install Pillow sklearn requests Wand tqdm

Data preparation

We assume you already have the following data:

- ImageNet2012 raw images and tfrecord. For this data, please refer to here

- For knowledge distillation, you need to add the teacher's logits to the TFRecord according to here

- For transfer learing datasets, refer to scripts in here

- To download pretrained model, visit here

- To make mCE evaluation dataset. refer to here

Reproduce Results

First, download pretrained models from here.

DATA_DIR=/path/to/imagenet2012/tfrecord

MODEL_DIR=/path/pretrained/checkpoint

CUDA_VISIBLE_DEVICES=1 python main_classification.py \

--eval_only=True \

--dataset_name=imagenet \

--data_dir=${DATA_DIR} \

--model_dir=${MODEL_DIR} \

--preprocessing_type=imagenet_224_256a \

--resnet_version=2 \

--resnet_size=152 \

--bl_alpha=1 \

--bl_beta=2 \

--use_sk_block=True \

--anti_alias_type=sconv \

--anti_alias_filter_size=3

The expected final output is:

...

| accuracy: 0.841860 |

...

Training a model from scratch.

For training parameter information, refer to here

Train vanila ResNet50 on ImageNet from scratch.

$ ./scripts/train_vanila_from_scratch.sh

Train all-assemble ResNet50 on ImageNet from scratch.

$ ./scripts/train_assemble_from_scratch.sh

Fine-tuning the model.

In the previous section, you train the pretrained model from scratch.

You can also download pretrained model to finetune from here.

Fine-tune vanila ResNet50 on Food101.

$ ./scripts/finetuning_vanila_on_food101.sh

Train all-assemble ResNet50 on Food101.

$ ./scripts/finetuning_assemble_on_food101.sh

mCE evaluation

You can calculate mCE on the trained model as follows:

$ ./eval_assemble_mCE_on_imagenet.sh

Acknowledgements

This implementation is based on these repository:

- resnet official: https://github.com/tensorflow/models/tree/master/official/r1/resnet

- mce: https://github.com/hendrycks/robustness

- autoaugment: https://github.com/tensorflow/tpu/blob/master/models/official/efficientnet/autoaugment.py

Citation

@misc{lee2020compounding,

title={Compounding the Performance Improvements of Assembled Techniques in a Convolutional Neural Network},

author={Jungkyu Lee and Taeryun Won and Kiho Hong},

year={2020},

eprint={2001.06268},

archivePrefix={arXiv},

primaryClass={cs.CV}

}