InstanceRefer

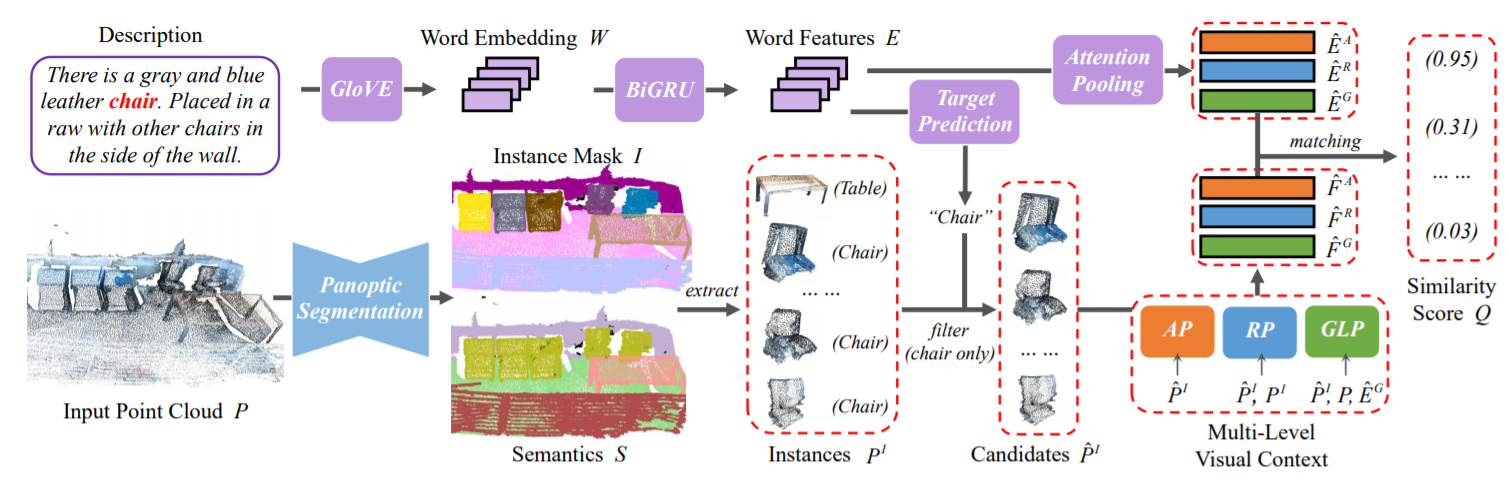

InstanceRefer: Cooperative Holistic Understanding for Visual Grounding on Point Clouds through Instance Multi-level Contextual Referring

This repository is for the ICCV 2021 paper and 1st method on ScanRefer benchmark [arxiv paper].

Zhihao Yuan, Xu Yan, Yinghong Liao, Ruimao Zhang, Zhen Li*, Shuguang Cui

If you find our work useful in your research, please consider citing:

@article{yuan2021instancerefer,

title={Instancerefer: Cooperative holistic understanding for visual grounding on point clouds through instance multi-level contextual referring},

author={Yuan, Zhihao and Yan, Xu and Liao, Yinghong and Zhang, Ruimao and Li, Zhen and Cui, Shuguang},

journal={ICCV},

year={2021}

}

News

- 2021-07-23 InstanceRefer is accepted at ICCV 2021 :fire:!

- 2021-04-22 We release evaluation codes and pre-trained model!

- 2021-03-31 We release InstanceRefer v1 :rocket:!

- 2021-03-18 We achieve 1st place in ScanRefer leaderboard :fire:.

Getting Started

Setup

The code is tested on Ubuntu 16.04 LTS & 18.04 LTS with PyTorch 1.6 CUDA 10.1 and PyTorch 1.8 CUDA 10.2 installed.

conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.1 -c pytorch

Install the necessary packages listed out in requirements.txt:

pip install -r requirements.txt

After all packages are properly installed, please run the following commands to compile the torchsaprse 1.2:

pip install --upgrade git+https://github.com/mit-han-lab/torchsparse.git

sudo apt-get install libsparsehash-dev

Before moving on to the next step, please don't forget to set the project root path to the CONF.PATH.BASE in lib/config.py.

Data preparation

- Download the ScanRefer dataset and unzip it under

data/. - Downloadand the preprocessed GLoVE embeddings (~990MB) and put them under

data/. - Download the ScanNetV2 dataset and put (or link)

scans/under (or to)data/scannet/scans/(Please follow the ScanNet Instructions for downloading the ScanNet dataset). After this step, there should be folders containing the ScanNet scene data under thedata/scannet/scans/with names likescene0000_00 - Used official and pre-trained PointGroup generate panoptic segmentation in

PointGroupInst/. We provide pre-processed data in Baidu Netdisk [password: 0nxc]. - Pre-processed instance labels, and new data should be generated in

data/scannet/pointgroup_data/

cd data/scannet/

python prepare_data.py --split train --pointgroupinst_path [YOUR_PATH]

python prepare_data.py --split val --pointgroupinst_path [YOUR_PATH]

python prepare_data.py --split test --pointgroupinst_path [YOUR_PATH]

Finally, the dataset folder should be organized as follows.

InstanceRefer

├── data

│ ├── glove.p

│ ├── ScanRefer_filtered.json

│ ├── ...

│ ├── scannet

│ │ ├── meta_data

│ │ ├── pointgroup_data

│ │ │ ├── scene0000_00_aligned_bbox.npy

│ │ │ ├── scene0000_00_aligned_vert.npy

│ │ ├──├── ... ...

Training

Train the InstanceRefer model. You can change hyper-parameters in config/InstanceRefer.yaml:

python scripts/train.py --log_dir instancerefer

Evaluation

You need specific the use_checkpoint with the folder that contains model.pth in config/InstanceRefer.yaml and run with:

python scripts/eval.py

Pre-trained Models

| Input | [email protected] | [email protected] | Checkpoints |

|---|---|---|---|

| xyz+rgb | 37.6 | 30.7 | Baidu Netdisk [password: lrpb] |

TODO

- [ ] Updating to the best version.

- [ ] Release codes for prediction on benchmark.

- [x] Release pre-trained model.

- [ ] Merge PointGroup in an end-to-end manner.

Acknowledgement

This project is not possible without multiple great opensourced codebases.

License

This repository is released under MIT License (see LICENSE file for details).