ResidualMaskingNetwork

Facial Expression Recognition using Residual Masking Network, in PyTorch.

A PyTorch implementation of my thesis with the same name.

Live Demo:

Approach 1:

- Install from pip

pip install rmn

- Run video demo by the following Python scripts

from rmn import video_demo

video_demo()

Approach 2:

- clone the repo and install package via pip

git clone [email protected]:phamquiluan/ResidualMaskingNetwork.git

cd ResidualMaskingNetwork

pip install -e .

- call

video_demoinrmnpackage

from rmn import video_demo

video_demo()

Approach 3:

- Model file: download (this checkpoint is trained on VEMO dataset, locate it at

./saved/checkpoints/directory) - Download 2 files: prototxt, and res10_300x300_ssd for face detection OpenCV. Locate at current directory or checking file path with

ssd_infer.pyfile.

python ssd_infer.py

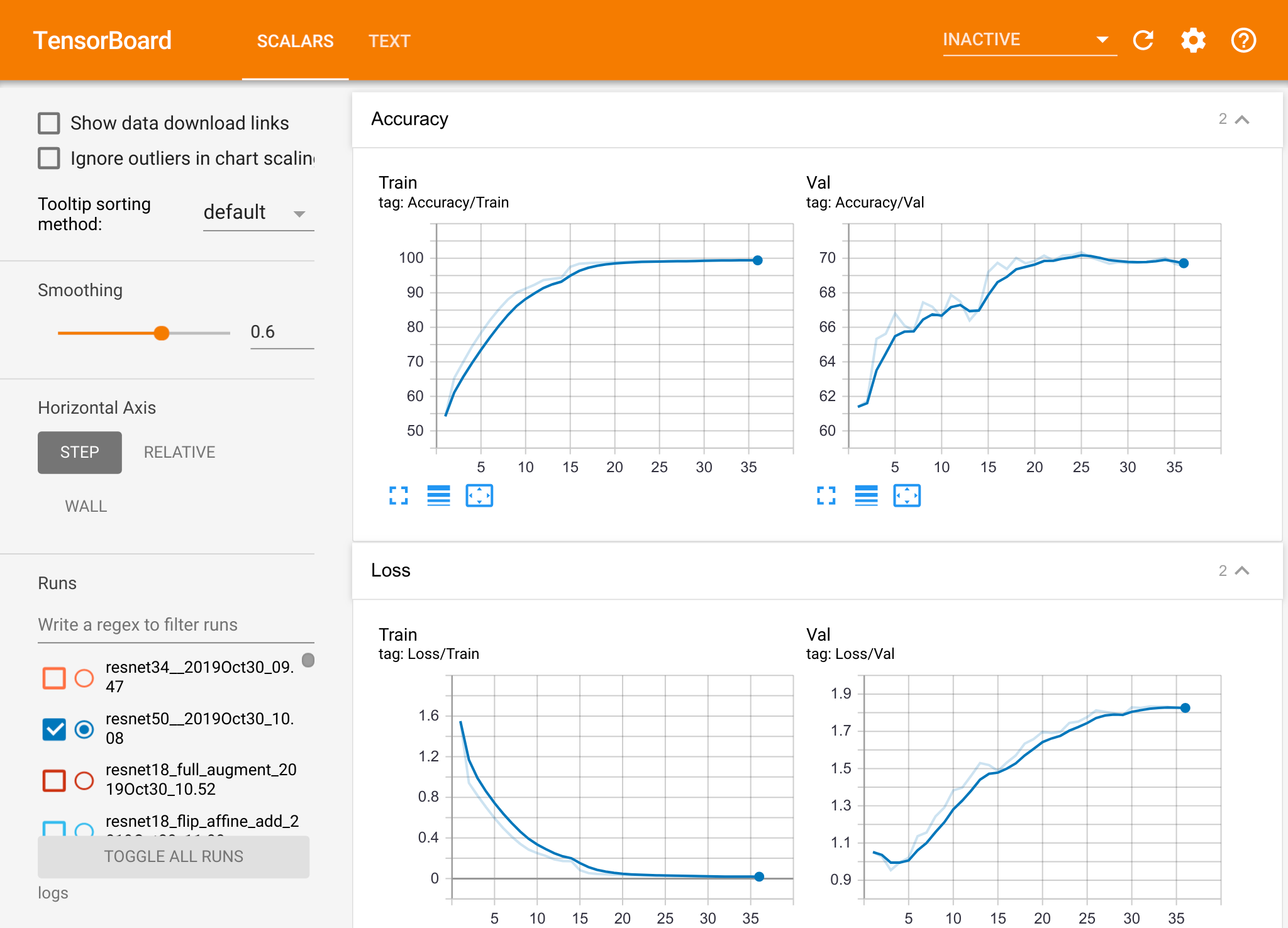

Benchmarking on FER2013

We benchmark our code thoroughly on two datasets: FER2013 and VEMO. Below are the results and trained weights:

| Model | Accuracy |

|---|---|

| VGG19 | 70.80 |

| EfficientNet_b2b | 70.80 |

| Googlenet | 71.97 |

| Resnet34 | 72.42 |

| Inception_v3 | 72.72 |

| Bam_Resnet50 | 73.14 |

| Densenet121 | 73.16 |

| Resnet152 | 73.22 |

| Cbam_Resnet50 | 73.39 |

| ResMaskingNet | 74.14 |

| ResMaskingNet + 6 | 76.82 |

Results in VEMO dataset could be found in my thesis or slide (attached below)

Benchmarking on ImageNet

We also benchmark our model on ImageNet dataset.

| Model | Top-1 Accuracy | Top-5 Accuracy |

|---|---|---|

| Resnet34 | 72.59 | 90.92 |

| CBAM Resnet34 | 73.77 | 91.72 |

| ResidualMaskingNetwork | 74.16 | 91.91 |

Installation

- Install PyTorch by selecting your environment on the website and running the appropriate command.

- Clone this repository and install package prerequisites below.

- Then download the dataset by following the instructions below.

prerequisites

- Python 3.6+

- PyTorch 1.3+

- Torchvision 0.4.0+

- requirements.txt

Datasets

- FER2013 Dataset (locate it in

saved/data/fer2013likesaved/data/fer2013/train.csv) - ImageNet 1K Dataset (ensure it can be loaded by torchvision.datasets.Imagenet)

Training on FER2013

- To train network, you need to specify model name and other hyperparameters in config file (located at configs/*) then ensure it is loaded in main file, then run training procedure by simply run main file, for example:

python main_fer.py # Example for fer2013_config.json file

- The best checkpoints will chosen at term of best validation accuracy, located at

saved/checkpoints - The TensorBoard training logs are located at

saved/logs, to open it, usetensorboard --logdir saved/logs/

- By default, it will train

alexnetmodel, you can switch to another model by editconfigs/fer2013\_config.jsonfile (toresnet18orcbam\_resnet50or my networkresmasking\_dropout1.

Training on Imagenet dataset

To perform training resnet34 on 4 V100 GPUs on a single machine:

python ./main_imagenet.py -a resnet34 --dist-url 'tcp://127.0.0.1:12345' --dist-backend 'nccl' --multiprocessing-distributed --world-size 1 --rank 0

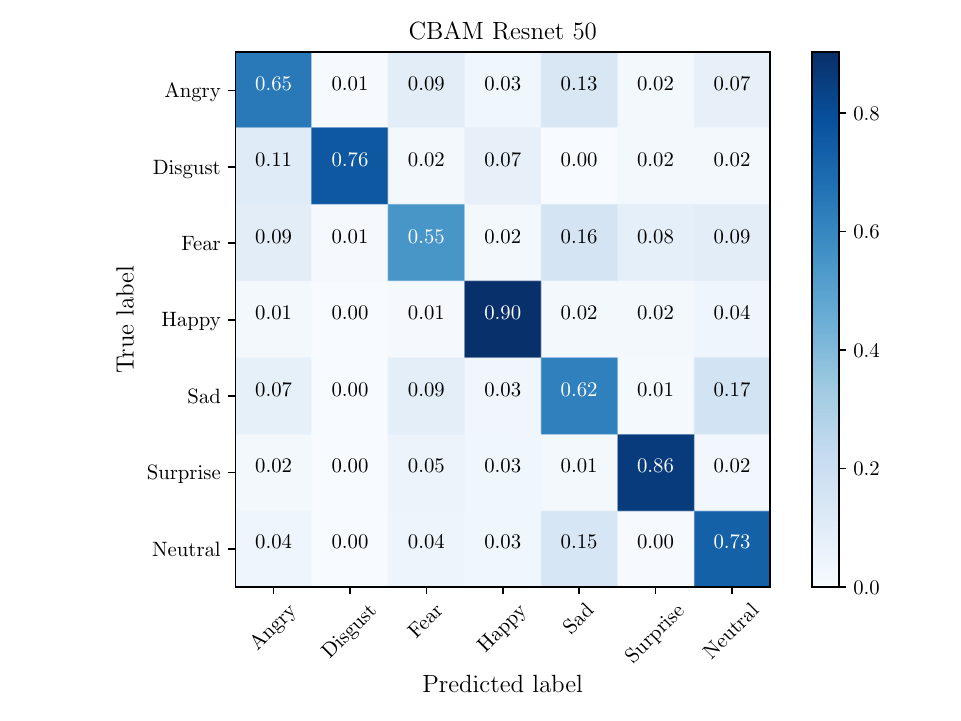

Evaluation

For student, who takes care of font family of confusion matrix and would like to write things in LaTeX, below is an example for generating a striking confusion matrix.

(Read this article for more information, there will be some bugs if you blindly run the code without reading).

python cm_cbam.py

Ensemble method

I used no-weighted sum avarage ensemble method to fusing 7 different models together, to reproduce results, you need to do some steps:

- Download all needed trained weights and located on

./saved/checkpoints/directory. Link to download can be found on Benchmarking section. - Edit file

gen_resultsand run it to generate result offline for each model. - Run

gen_ensemble.pyfile to generate accuracy for example methods.

Dissertation and Slide

- Dissertation PDF (in Vietnamese)

- Dissertation Overleaf Source

- Presentation slide PDF (in English) with full appendix

- Presentation slide Overleaf Source

TODO

We have accumulated the following to-do list, which we hope to complete in the near future

- Still to come:

- [x] Upload all models and training code.

- [x] Test time augmentation.

- [x] GPU-Parallel.

- [x] Pretrained model.

- [x] Demo and inference code.

- [x] Imagenet trained and pretrained weights.

- [ ] GradCAM visualization and Pooling method for visualize activations.

- [ ] Centerloss Visualizations.

Authors

Note: Unfortunately, I am currently join a full-time job and research on another topic, so I'll do my best to keep things up to date, but no guarantees. That being said, thanks to everyone for your continued help and feedback as it is really appreciated. I will try to address everything as soon as possible.

References

- Same as in dissertation.

Citation

@misc{luanresmaskingnet2020,

Author = {Luan Pham & Tuan Anh Tran},

Title = {Facial Expression Recognition using Residual Masking Network},

url = {https://github.com/phamquiluan/ResidualMaskingNetwork},

Year = {2020}

}