Stock-market-forecasting

Forecasting directional movements of stock-prices for intraday trading using LSTM and random-forest

https://arxiv.org/abs/2004.10178

Pushpendu Ghosh, Ariel Neufeld, Jajati K Sahoo

We design a highly profitable trading stratergy and employ random forests and LSTM networks (more precisely CuDNNLSTM) to analyze their effectiveness in forecasting out-of-sample directional movements of constituent stocks of the S&P 500, for intraday trading, from January 1993 till December 2018.

Bibtex

@article{ghosh2021forecasting,

title={Forecasting directional movements of stock prices for intraday trading using LSTM and random forests},

author={Ghosh, Pushpendu and Neufeld, Ariel and Sahoo, Jajati Keshari},

journal={Finance Research Letters},

pages={102280},

year={2021},

publisher={Elsevier}

}

Requirements

pip install scikit-learn==0.20.4

pip install tensorflow==1.14.0

Plots

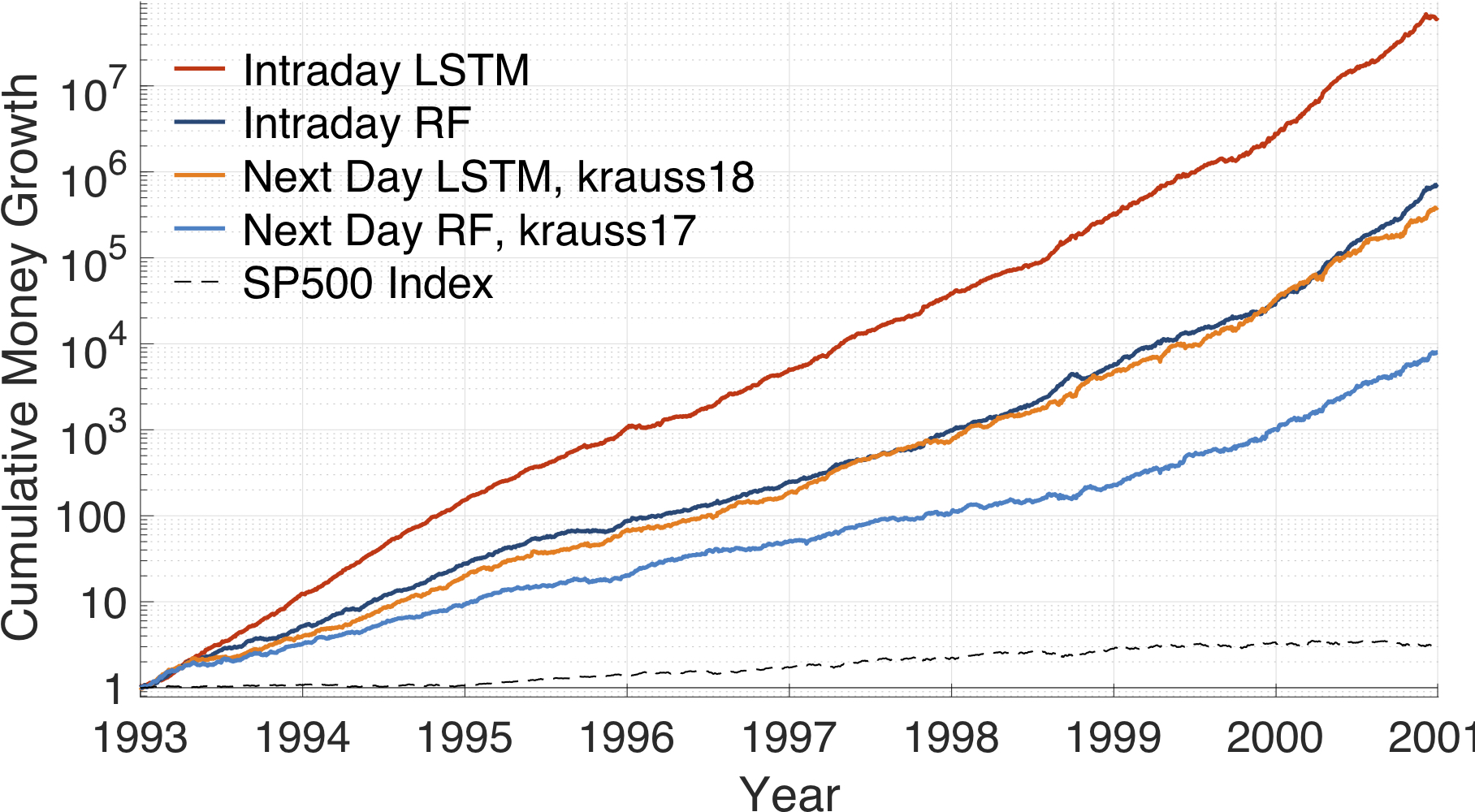

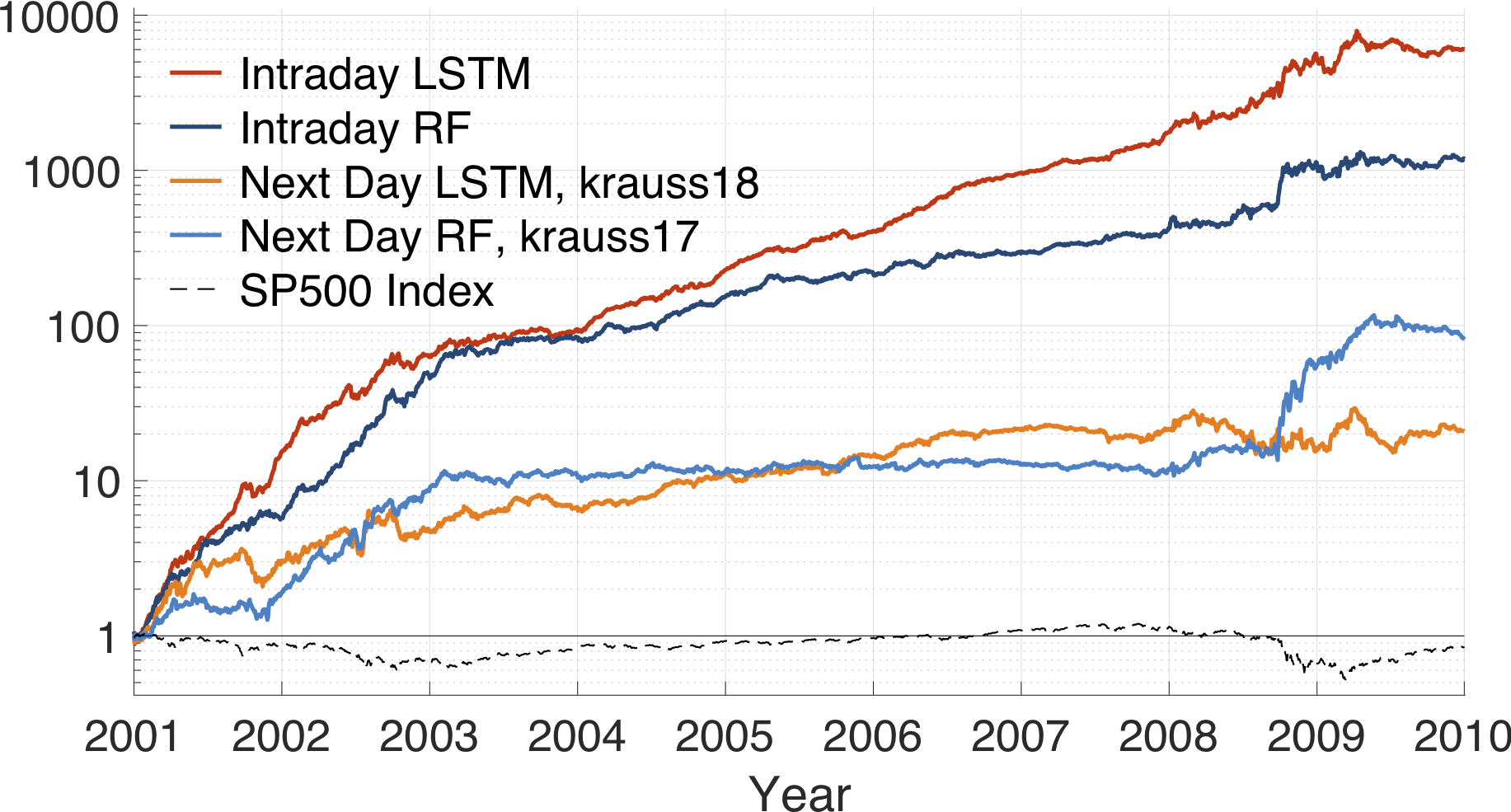

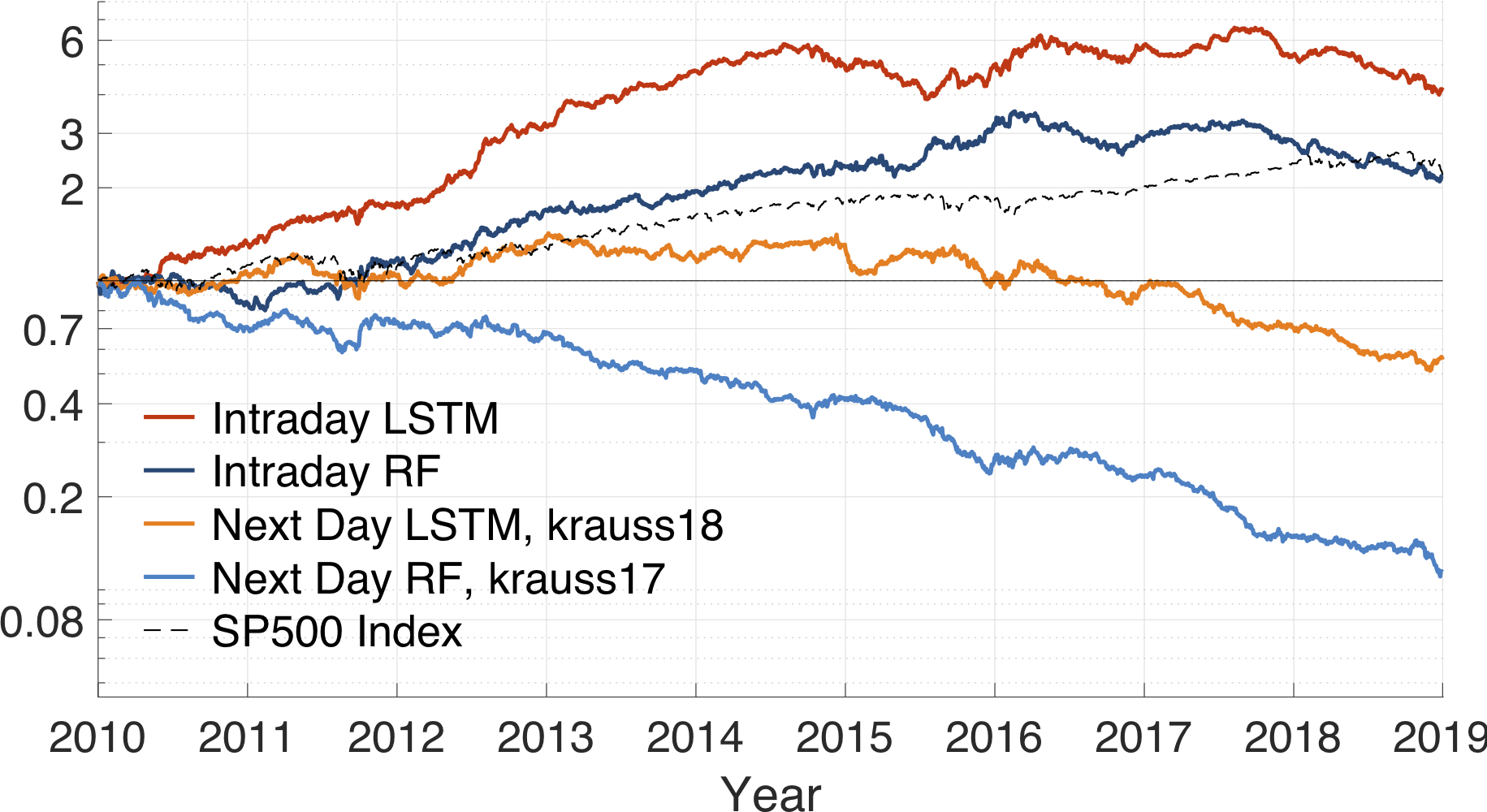

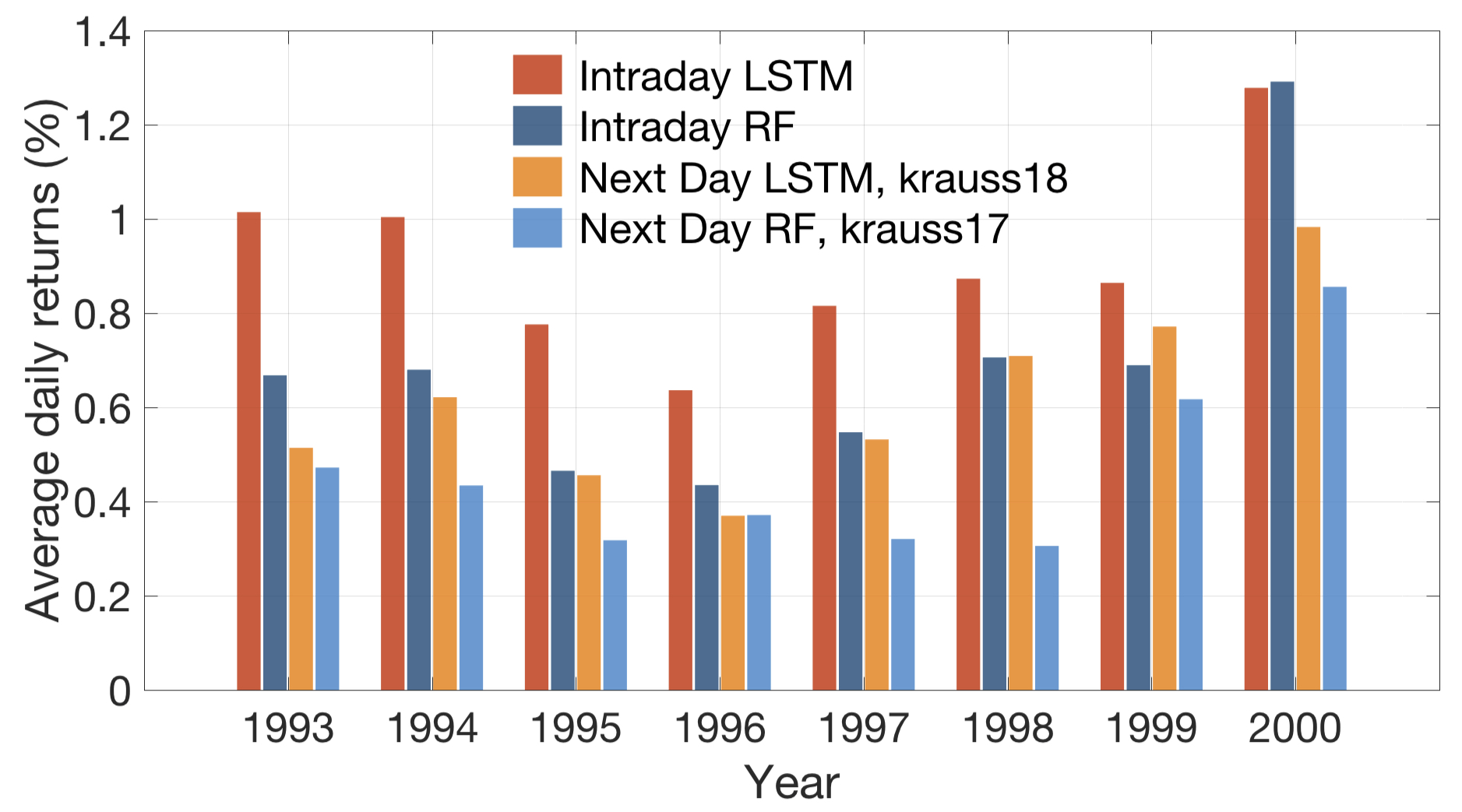

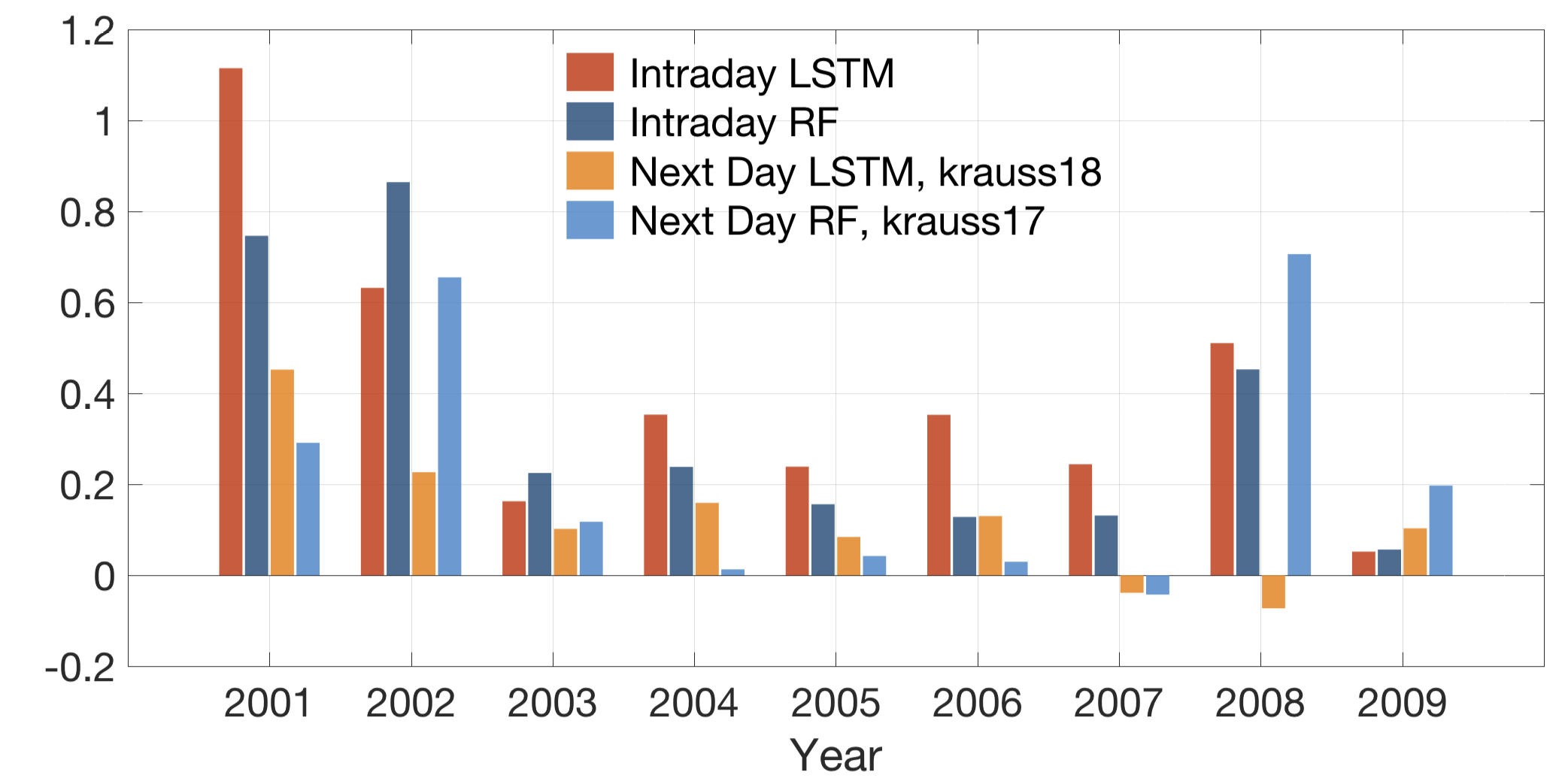

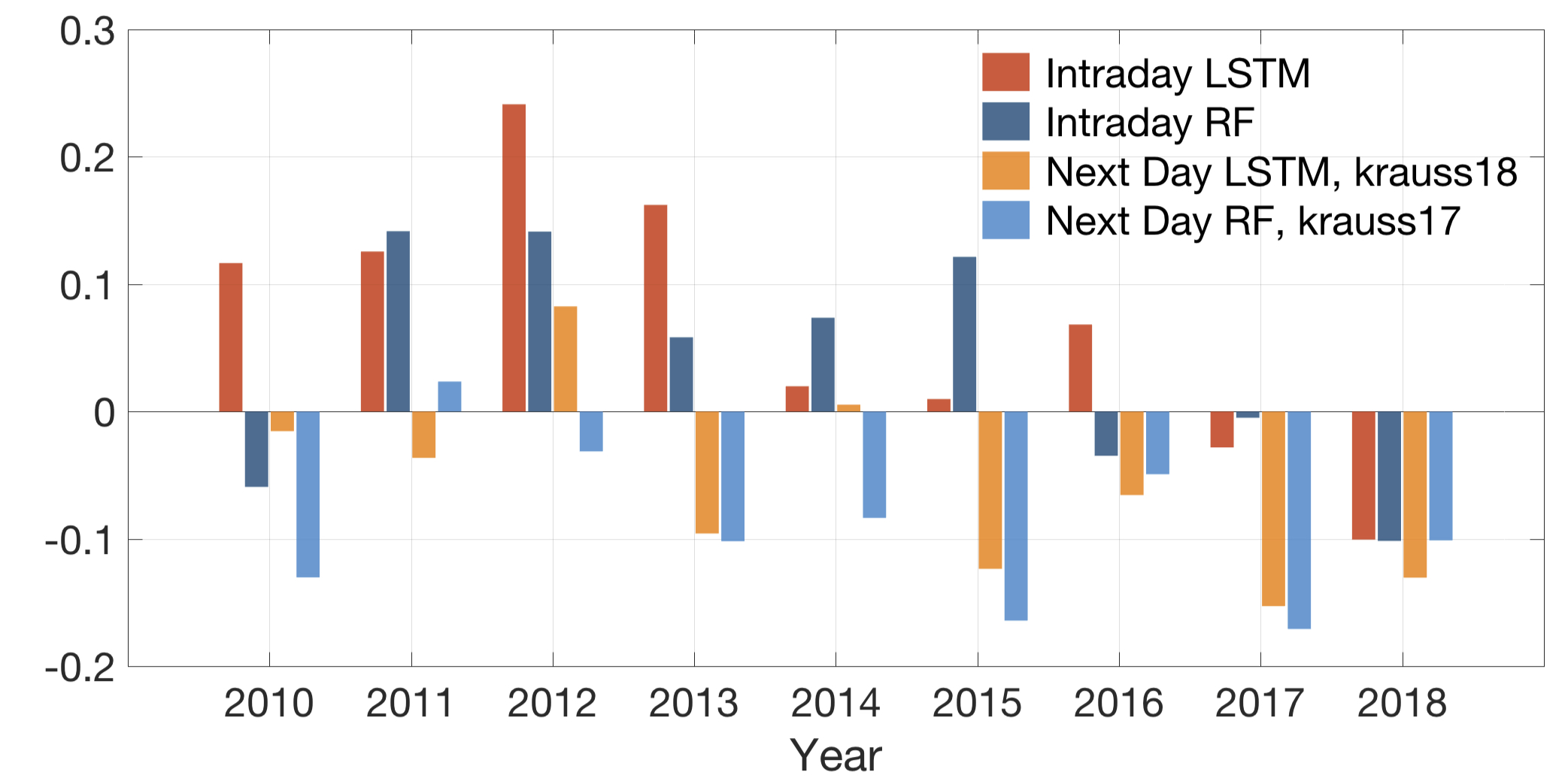

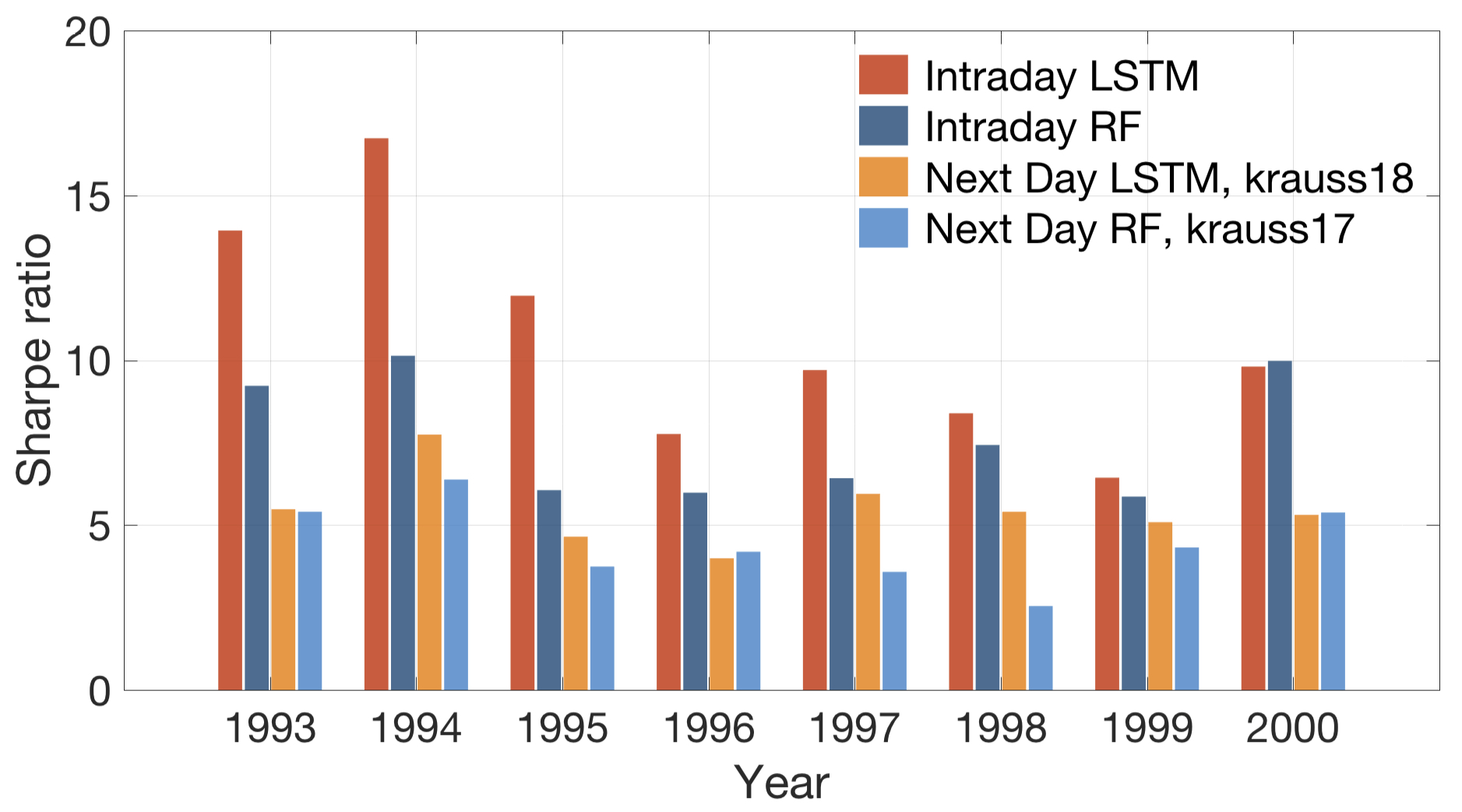

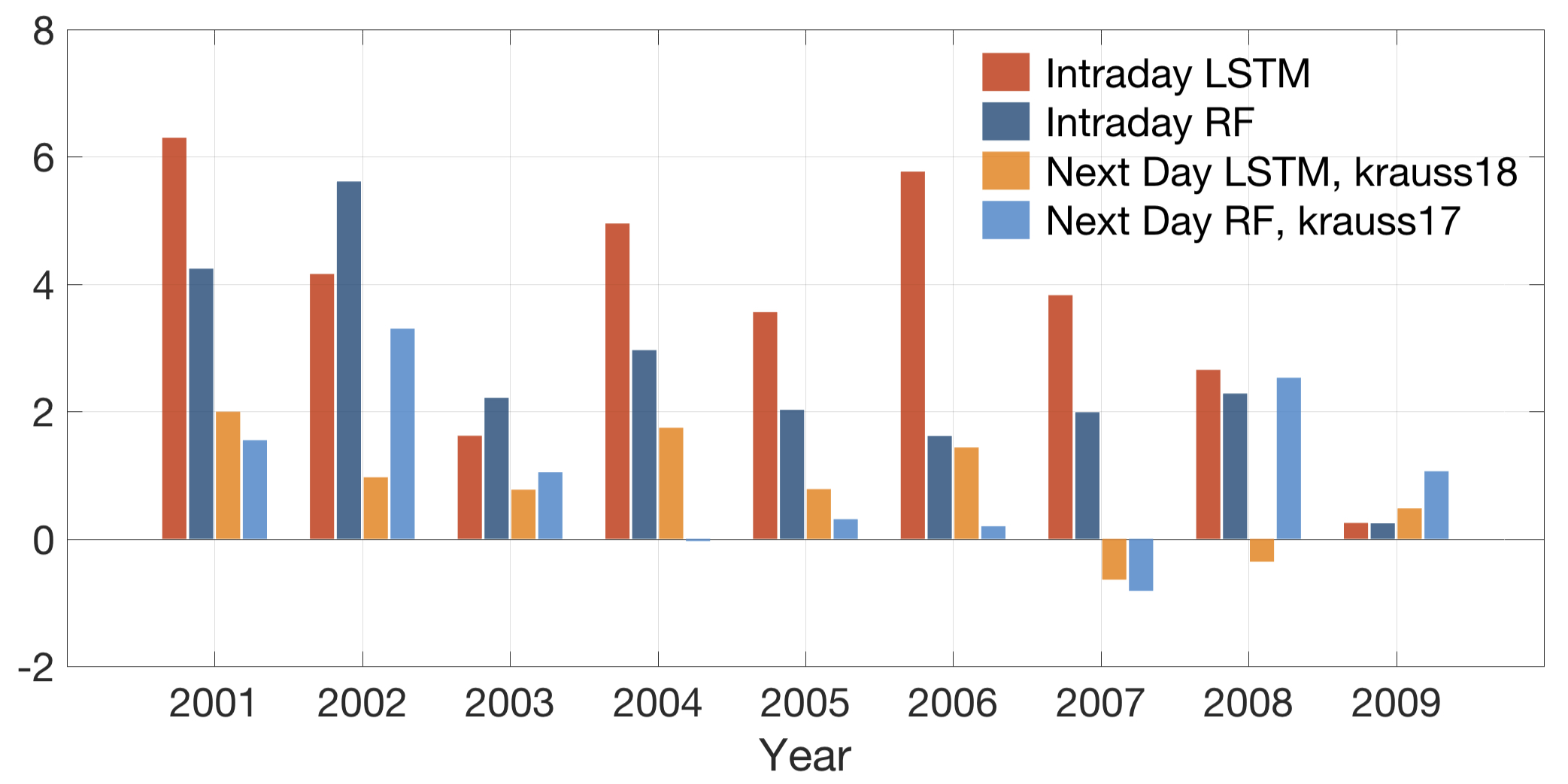

We plot three important metrics to quantify the effectiveness of our model: Intraday-240,3-LSTM.py and Intraday-240,3-RF.py, in the period January 1993 till December 2018.

Intraday LSTM: Intraday-240,3-LSTM.py

Intraday RF: Intraday-240,3-RF.py

Next Day LSTM, krauss18: NextDay-240,1-LSTM.py [1]

Next Day RF, krauss17: NextDay-240,1-RF.py [2]

Cumulative Money growth (after transaction cost)

Average daily returns (after transaction cost)

Average (Annualized) Sharpe ratio (after transaction cost)

Appendix

Feature Importance

The result suggest that or (returns from close price to next day open price) has the highest importance. This is justifiable by the fact that it is the only feature which considers the latest available data (the trading day's open price) at the time of making the trading decision. We also see that our 3-features setting achieves the highest Sharpe Ratio and hence outperforms each single feature.

LSTM hyperparameter tuning

Benchmark against other LSTM architectures

We consider various new LSTM architectures. Note that the other LSTM architectures involve much more parameters than the one we chose for our empirical study and do not achieve better results in terms of Sharpe Ratio. Moreover, we also compare our LSTM architecture with GRU, which is a relatively simpler variation of LSTM, and see that it generates reasonably good but still slightly lower Sharpe ratio than our chosen LSTM architecture.

References to the LSTM models:

- Single Layer GRU: https://arxiv.org/abs/1412.3555

- Stacked LSTM: https://www.sciencedirect.com/science/article/pii/S1877050920304865

- Stacked Residual LSTM: https://arxiv.org/abs/1610.03098

Acknowledgements

The first author gratefully acknowledges the NTU-India Connect Research Internship Programme which allowed him to carry out part of this research project while visiting the Nanyang Technological University, Singapore.

The second author gratefully acknowledges financial support by his Nanyang Assistant Professorship Grant (NAP Grant) Machine Learning based Algorithms in Finance and Insurance.