Image Classification Using Deep Learning

Learning and Building Convolutional Neural Networks using PyTorch. Models, selected are based on number of citation of the paper with the help of paperwithcode along with unique idea deviating from typical architecture like using transformers for CNN.

Image Classification is a fundamental computer vision task with huge scope in various applications like self driving cars, medical imaging, video frame prediction etc. Each model is either a new idea or built upon exisiting idea. We'll capture each of these idea and experiment and benchmark on predefined criteria.

Try Out In Google Colab

? Papers With Implementation

Base Config: { epochs: 10, lr: 0.001, batch_size: 128, img_resolution: 224, optim: adam }.

Some architecture like SqueezeNet, ShuffleNet, InceptionV3, EfficientNet, Darknet53 and others didn't work at base config because of increased complexity of the architecture, thus by reducing the batch size the architecture was executed in Google Colab and Kaggle.

Optimizer script: Includes the approx setup of the optimizer used in the original paper. Few setup led to Nan loss and were tweaked to make it work.

I've noticed that Google Colab has 12GB GPU while Kaggle has 16 GB GPU. So in worst case scenario, I've reduced the batch size in accordance to fit the Kaggle GPU. Just to mention, I use RTX2070 8GB.

| CNN Based | Accuracy | Parameters | FLOPS | Configuration | LR-Scheduler(Accuracy) |

|---|---|---|---|---|---|

| AlexNet | 71.27 | 58.32M | 1.13GFlops | - | CyclicLR(79.56) |

| VGGNet | 75.93 | 128.81M | 7.63GFlops | - | - |

| Network In Network | 71.03 | 2.02M | 0.833GFlops | - | - |

| ResNet | 83.39 | 11.18M | 1.82GFlops | - | CyclicLR(74.9) |

| DenseNet-Depth40 | 68.25 | 0.18M | - | B_S = 8 | - |

| MobileNetV1 | 81.72 | 3.22M | 0.582GFlops | - | - |

| MobileNetV2 | 83.99 | 2.24M | 0.318GFlops | - | - |

| GoogLeNet | 80.28 | 5.98M | 1.59GFlops | - | - |

| InceptionV3 | - | - | 209.45GFlops | H_C_R | |

| Darknet-53 | - | - | 7.14GFlops | H_C_R | |

| Xception | 85.9 | 20.83M | 4.63GFlops | B_S = 96 | - |

| ResNeXt | - | 69.41GFlops | H_C_R | ||

| SENet | 83.39 | 11.23M | 1.82GFlops | - | CyclicLR(78.10) |

| SqueezeNet | 62.2 | 0.73M | 2.64GFlops | B_S = 64 | |

| ShuffleNet | 2.03GFlops | B_S = 32 | - | ||

| EfficientNet-B0 | - | 4.02M | 0.4GFlops | ||

| Transformer Based | |||||

| ViT | - | 53.59M | - | - | WarmupCosineSchedule(55.34) |

| MLP Based | |||||

| MLP-Mixer | 68.52 | 13.63M | - | - | WarmupLinearSchedule(69.5) |

| ResMLP | 65.5 | 14.97M | - | - | - |

B_S - Batch Size \

H_C_R - High Compute Required

Note: Marked few cells as high compute required because even with batch_size = 8, the kaggle compute was not enough. The performance of the model especially with regards to accuracy is less because the model runs only for 10 epochs, with more epochs the model converges further. Learning rate scheduler is underestimated, try out various learning rate scheduler to get the maximum out of the network.

Google Colab Notebook to tune hyperparameters with Weights and Biases Visualizations

Create Environment

python -m venv CNNs

source CNNs/bin/activate

git clone https://github.com/Mayurji/CNNs-PyTorch.git

Installation

pip install -r requirements.txt

Run

python main.py --model=resnet

To Save Model

python main.py --model=resnet --model_save=True

To Create Checkpoint

python main.py --model=resnet --checkpoint=True

Note: Parameters can be changed in YAML file. The module supports only two datasets, MNIST and CIFAR-10, but you can modify the dataset file and include any other datasets.

Plotting

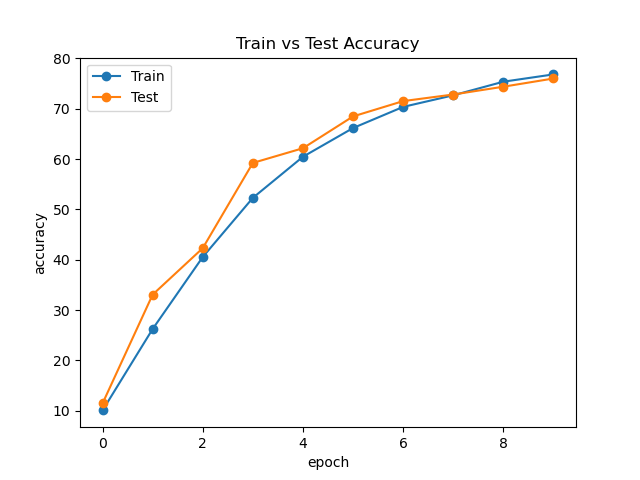

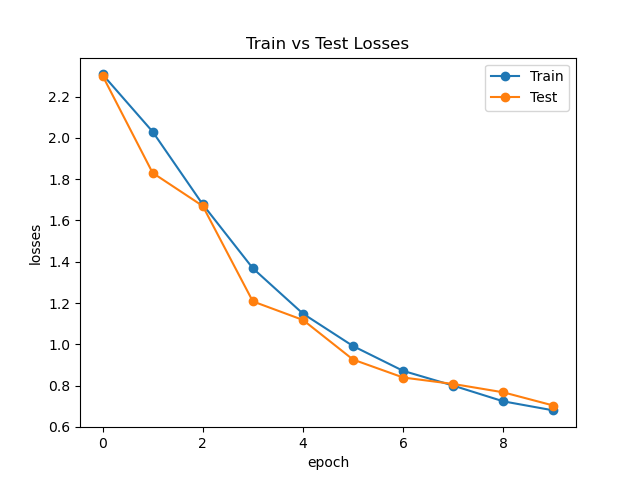

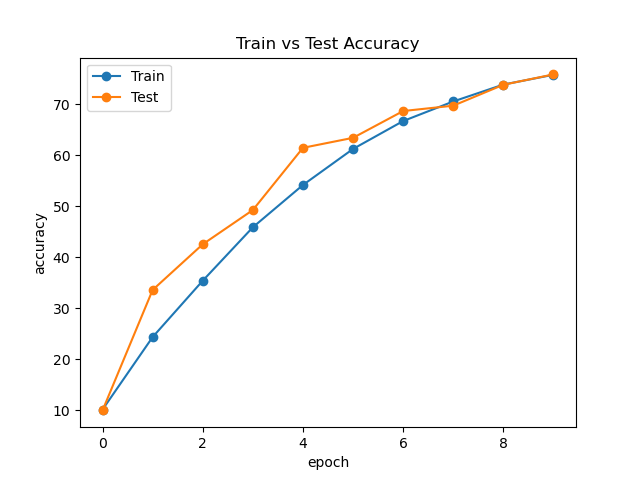

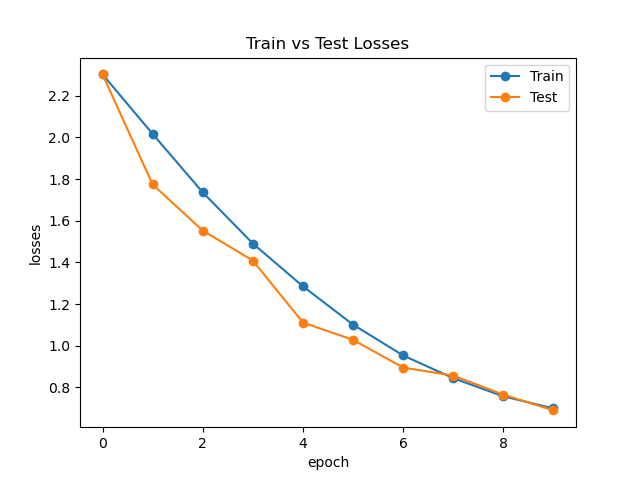

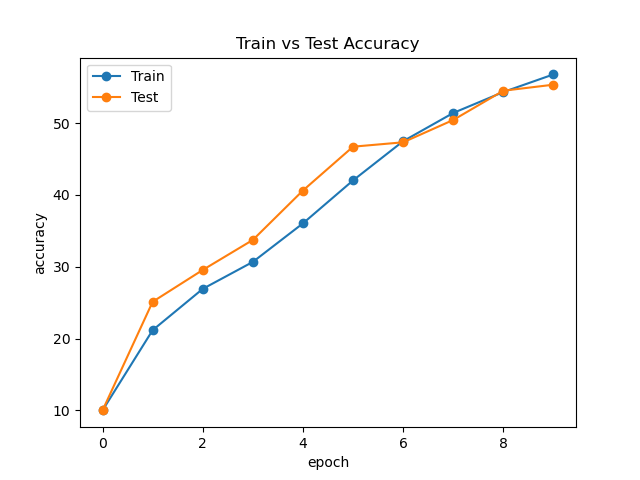

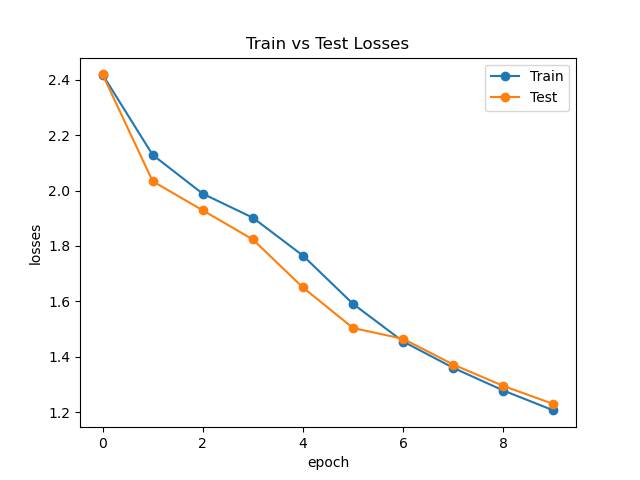

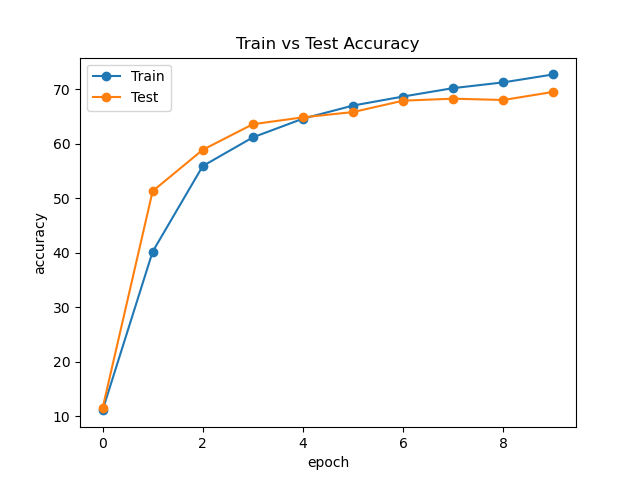

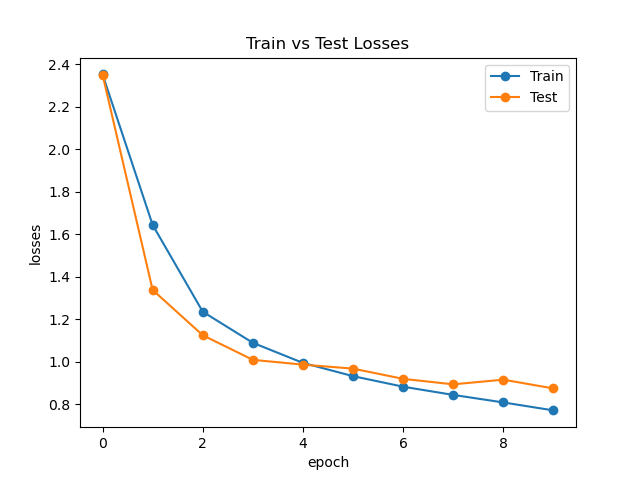

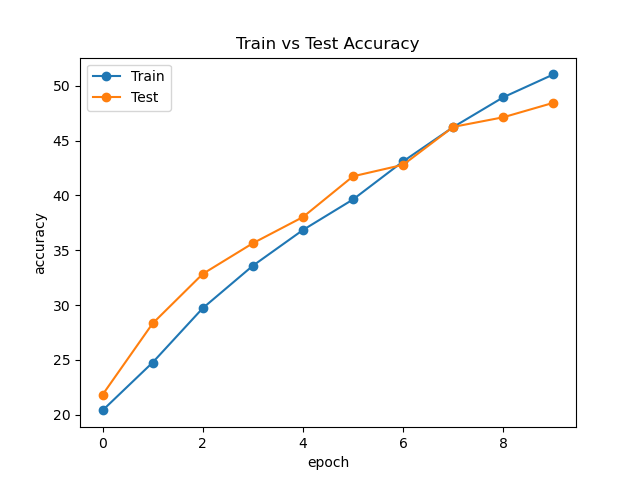

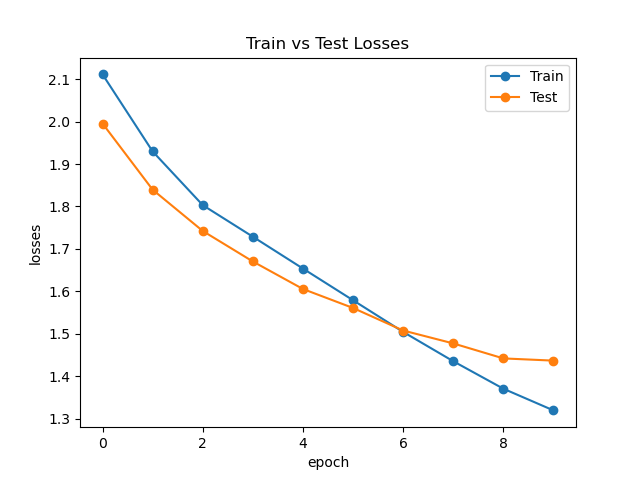

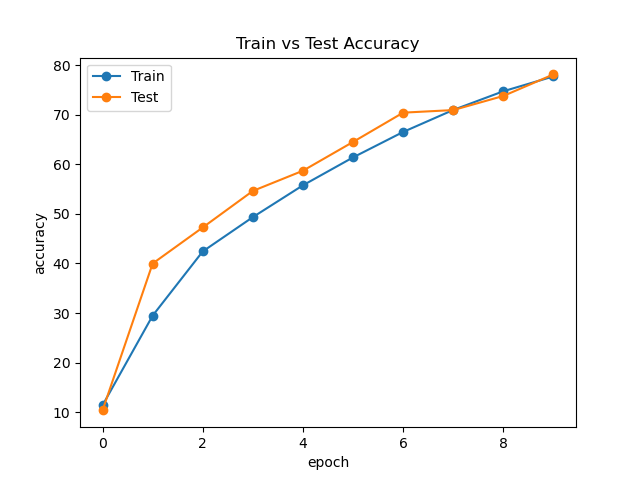

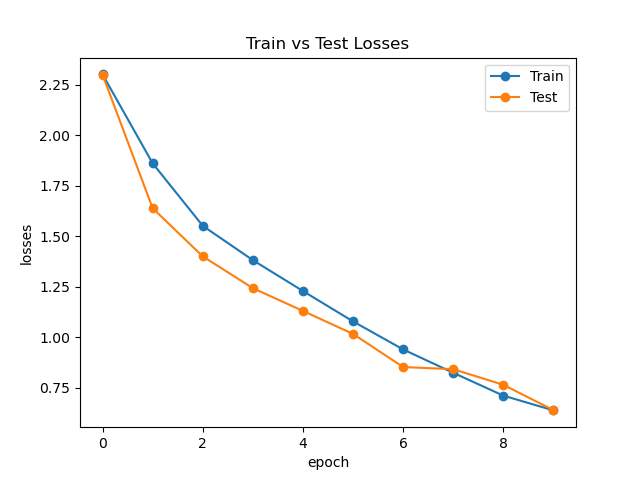

By default, the plots between train & test accuracy, train & test loss is stored in plot folder for each model.

| Model | Train vs Test Accuracy | Train vs Test Loss |

|---|---|---|

| AlexNet |  |

|

| ResNet |  |

|

| ViT |  |

|

| MLP-Mixer |  |

|

| ResMLP |  |

|

| SENet |  |

|