Net2Net

Code accompanying the NeurIPS 2020 oral paper

tl;dr Our approach distills the residual information of one model with respect to another's and thereby enables translation between fixed off-the-shelf expert models such as BERT and BigGAN without having to modify or finetune them.

Requirements

A suitable conda environment named net2net can be created

and activated with:

conda env create -f environment.yaml

conda activate net2net

Datasets

- CelebA: Create a symlink 'data/CelebA' pointing to a folder which contains the following files:

These files can be obtained here.. ├── identity_CelebA.txt ├── img_align_celeba ├── list_attr_celeba.txt └── list_eval_partition.txt - CelebA-HQ: Create a symlink

data/celebahqpointing to a folder containing

the.npyfiles of CelebA-HQ (instructions to obtain them can be found in

the PGGAN repository). - FFHQ: Create a symlink

data/ffhqpointing to theimages1024x1024folder

obtained from the FFHQ repository. - Anime Faces: First download the face images from the Anime Crop dataset and then apply

the preprocessing of FFHQ to those images. We only keep images

where the underlying dlib face recognition model recognizes

a face. Finally, create a symlinkdata/animewhich contains the processed anime face images. - Oil Portraits: Download here.

Unpack the content and place the files indata/portraits.

ML4Creativity Demo

We include a streamlit demo, which utilizes our

approach to demonstrate biases of datasets and their creative applications.

More information can be found in our paper A Note on Data Biases in Generative

Models from the Machine Learning for Creativity and Design at NeurIPS 2020. Download the models from

- 2020-11-30T23-32-28_celeba_celebahq_ffhq_256

- 2020-12-02T13-58-19_anime_photography_256

- 2020-12-02T16-19-39_portraits_photography_256

and place them into logs. Run the demo with

streamlit run ml4cad.py

Training

Our code uses Pytorch-Lightning and thus natively supports

things like 16-bit precision, multi-GPU training and gradient accumulation. Training details for any model need to be specified in a dedicated .yaml file.

In general, such a config file is structured as follows:

model:

base_learning_rate: 4.5e-6

target: <path/to/lightning/module>

params:

...

data:

target: translation.DataModuleFromConfig

params:

batch_size: ...

num_workers: ...

train:

target: <path/to/train/dataset>

params:

...

validation:

target: <path/to/validation/dataset>

params:

...

Any Pytorch-Lightning model specified under model.target is then trained on the specified data

by running the command:

python translation.py --base <path/to/yaml> -t --gpus 0,

All available Pytorch-Lightning trainer arguments can be added via the command line, e.g. run

python translation.py --base <path/to/yaml> -t --gpus 0,1,2,3 --precision 16 --accumulate_grad_batches 2

to train a model on 4 GPUs using 16-bit precision and a 2-step gradient accumulation.

More details are provided in the examples below.

Training a cINN

Training a cINN for network-to-network translation usually utilizes the Lighnting Module net2net.models.flows.flow.Net2NetFlow

and makes a few further assumptions on the configuration file and model interface:

model:

base_learning_rate: 4.5e-6

target: net2net.models.flows.flow.Net2NetFlow

params:

flow_config:

target: <path/to/cinn>

params:

...

cond_stage_config:

target: <path/to/network1>

params:

...

first_stage_config:

target: <path/to/network2>

params:

...

Here, the entries under flow_config specifies the architecture and parameters of the conditional INN;

cond_stage_config specifies the first network whose representation is to be translated into another network

specified by first_stage_config. Our model net2net.models.flows.flow.Net2NetFlow expects that the first

network has a .encode() method which produces the representation of interest, while the second network should

have an encode() and a decode() method, such that both of them applied sequentially produce the networks output. This allows for a modular combination of arbitrary models of interest. For more details, see the examples below.

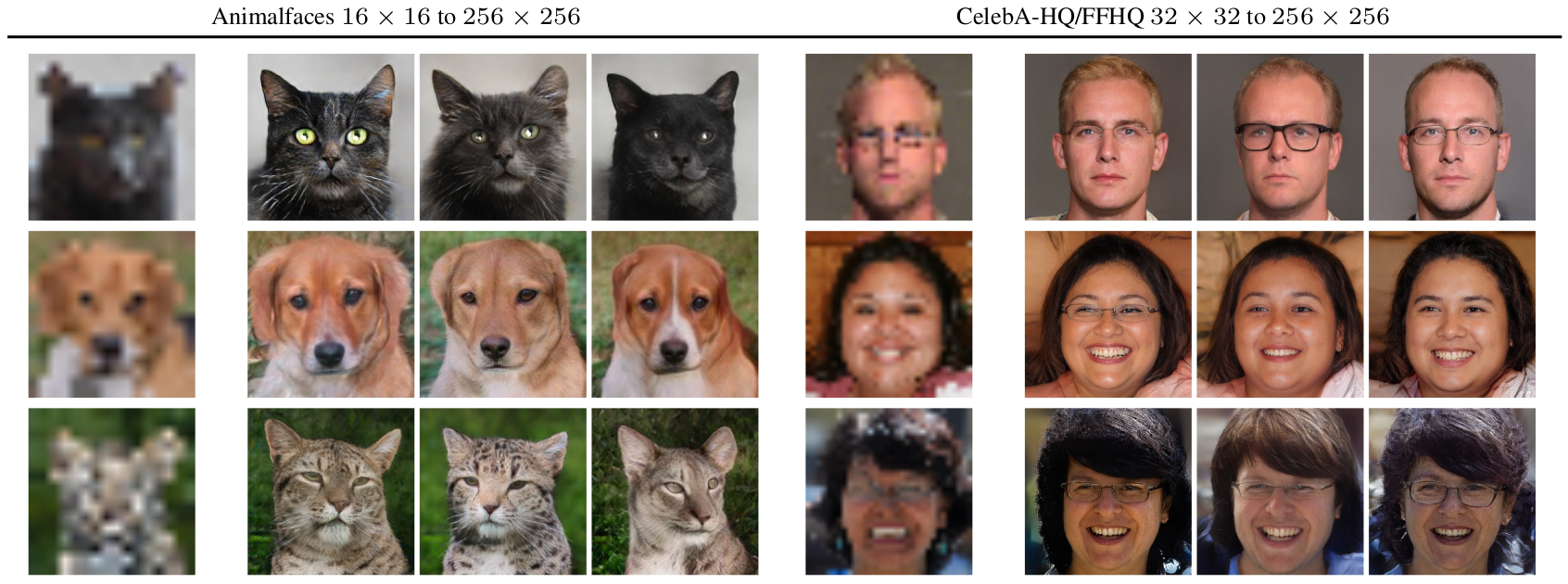

Training a cINN - Superresolution

Training details for a cINN to concatenate two autoencoders from different image scales for stochastic

superresolution are specified in configs/translation/faces32-to-256.yaml.

To train a model for translating from 32 x 32 images to 256 x 256 images on GPU 0, run

python translation.py --base configs/translation/faces32-to-faces256.yaml -t --gpus 0,

and specify any additional training commands as described above. Note that this setup requires two

pretrained autoencoder models, one on 32 x 32 images and the other on 256 x 256. If you want to

train them yourself on a combination of FFHQ and CelebA-HQ, run

python translation.py --base configs/autoencoder/faces32.yaml -t --gpus <n>,

for the 32 x 32 images; and

python translation.py --base configs/autoencoder/faces256.yaml -t --gpus <n>,

for the model on 256 x 256 images. After training, adopt the corresponding model paths in configs/translation/faces32-to-faces256.yaml. Additionally, we provide weights of pretrained autoencoders for both settings:

Weights 32x32; Weights256x256.

To run the training as described above, put them into

logs/2020-10-16T17-11-42_FacesFQ32x32/checkpoints/last.ckptand

logs/2020-09-16T16-23-39_FacesXL256z128/checkpoints/last.ckpt, respectively.

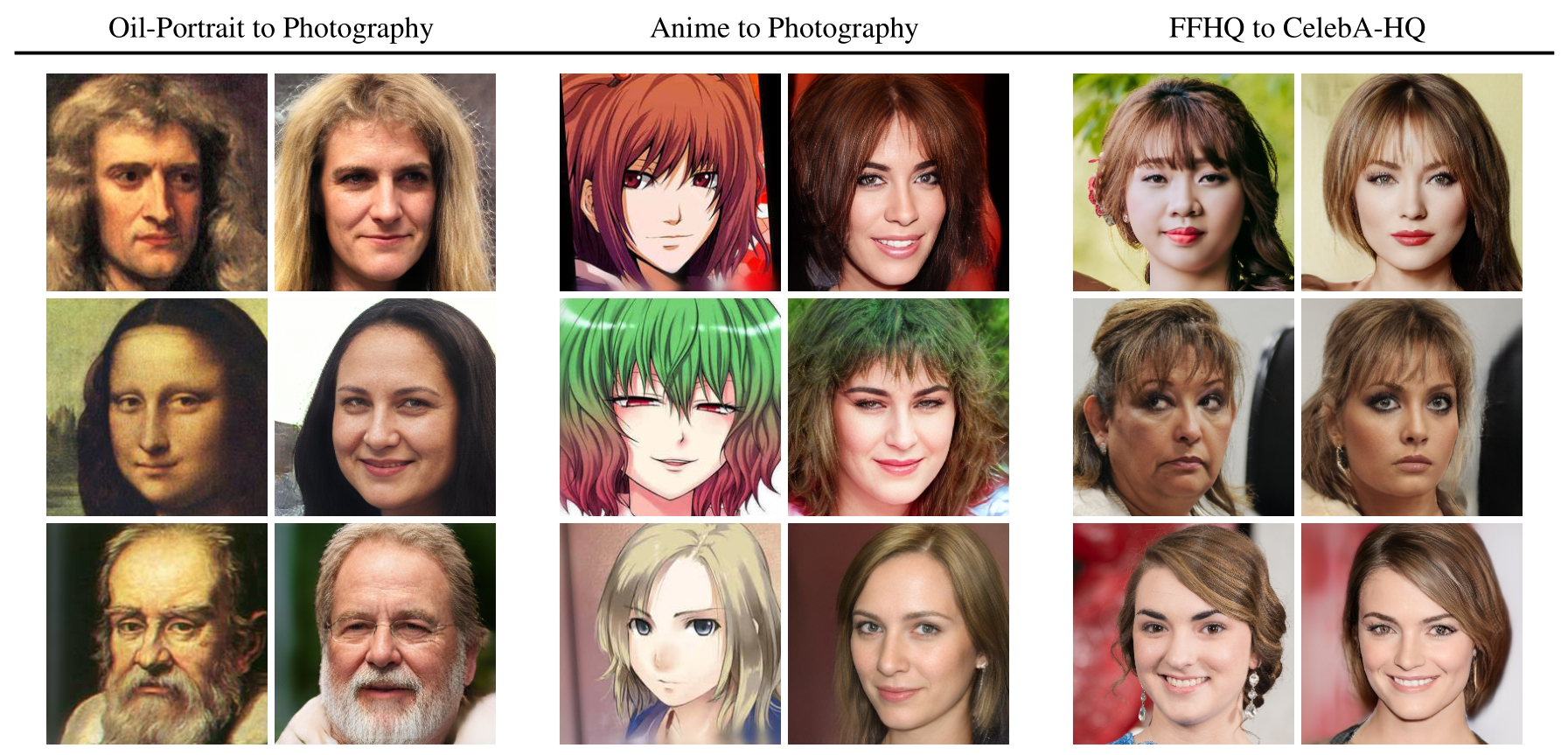

Training a cINN - Unpaired Translation

All training scenarios for unpaired translation are specified in the configs in configs/creativity.

We provide code and pretrained autoencoder models for three different translation tasks:

- Anime ⟷ Photography; see

configs/creativity/anime_photography_256.yaml.

Download autoencoder checkpoint (Download Anime+Photography) and place intologs/2020-09-30T21-40-22_AnimeAndFHQ/checkpoints/epoch=000007.ckpt. - Oil-Portrait ⟷ Photography; see

configs/creativity/portraits_photography_256.yaml

Download autoencoder checkpoint (Download Portrait+Photography) and place intologs/2020-09-29T23-47-10_PortraitsAndFFHQ/checkpoints/epoch=000004.ckpt. - FFHQ ⟷ CelebA-HQ ⟷ CelebA; see

configs/creativity/celeba_celebahq_ffhq_256.yaml

Download autoencoder checkpoint (Download FFHQ+CelebAHQ+CelebA) and place intologs/2020-09-16T16-23-39_FacesXL256z128/checkpoints/last.ckpt.

Note that this is the same autoencoder checkpoint as for the stochastic superresolution experiment.

To train a cINN on one of these unpaired transfer tasks using the first GPU, simply run

python translation.py --base configs/translation/<task-of-interest>.yaml -t --gpus 0,

where <task-of-interest>.yaml is one of portraits_photography_256.yaml, celeba_celebahq_ffhq_256.yaml

or anime_photography_256.yaml. Providing additional arguments to the pytorch-lightning

trainer object is also possible and described above.

BibTeX

@misc{rombach2020networktonetwork,

title={Network-to-Network Translation with Conditional Invertible Neural Networks},

author={Robin Rombach and Patrick Esser and Björn Ommer},

year={2020},

eprint={2005.13580},

archivePrefix={arXiv},

primaryClass={cs.CV}

}