Less is More: Pay Less Attention in Vision Transformers

Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers.

By Zizheng Pan, Bohan Zhuang, Haoyu He, Jing Liu and Jianfei Cai.

In our paper, we present a novel Less attention vIsion Transformer (LIT), building upon the fact that convolutions, fully-connected (FC) layers, and self-attentions have almost equivalent mathematical expressions for processing image patch sequences. LIT uses pure multi-layer perceptrons (MLPs) to encode rich local patterns in the early stages while applying self-attention modules to capture longer dependencies in deeper layers. Moreover, we further propose a learned deformable token merging module to adaptively fuse informative patches in a non-uniform manner.

If you use this code for a paper please cite:

@article{pan2021less,

title={Less is More: Pay Less Attention in Vision Transformers},

author={Pan, Zizheng and Zhuang, Bohan and He, Haoyu and Liu, Jing and Cai, Jianfei},

journal={arXiv preprint arXiv:2105.14217},

year={2021}

}

Usage

First, clone this repository.

git clone https://github.com/MonashAI/LIT

Next, create a conda virtual environment.

# Make sure you have a NVIDIA GPU.

cd LIT/classification

bash setup_env.sh [conda_install_path] [env_name]

# For example

bash setup_env.sh /home/anaconda3 lit

Note: We use PyTorch 1.7.1 with CUDA 10.1 for all experiments. The setup_env.sh has illustrated all dependencies we used in our experiments. You may want to edit this file to install a different version of PyTorch or any other packages.

Image Classification on ImageNet

We provide baseline LIT models pretrained on ImageNet-1K. For training and evaluation code, please refer to classification.

| Name | Params (M) | FLOPs (G) | Top-1 Acc. (%) | Model | Log |

|---|---|---|---|---|---|

| LIT-Ti | 19 | 3.6 | 81.1 | google drive/github | log |

| LIT-S | 27 | 4.1 | 81.5 | google drive/github | log |

| LIT-M | 48 | 8.6 | 83.0 | google drive/github | log |

| LIT-B | 86 | 15.0 | 83.4 | google drive/github | log |

Object Detection on COCO

For training and evaluation code, please refer to detection.

RetinaNet

| Backbone | Params (M) | Lr schd | box mAP | Config | Model | Log |

|---|---|---|---|---|---|---|

| LIT-Ti | 30 | 1x | 41.6 | config | github | log |

| LIT-S | 39 | 1x | 41.6 | config | github | log |

Mask R-CNN

| Backbone | Params (M) | Lr schd | box mAP | mask mAP | Config | Model | Log |

|---|---|---|---|---|---|---|---|

| LIT-Ti | 40 | 1x | 42.0 | 39.1 | config | github | log |

| LIT-S | 48 | 1x | 42.9 | 39.6 | config | github | log |

Semantic Segmentation on ADE20K

For training and evaluation code, please refer to segmentation.

Semantic FPN

| Backbone | Params (M) | Iters | mIoU | Config | Model | Log |

|---|---|---|---|---|---|---|

| LIT-Ti | 24 | 8k | 41.3 | config | github | log |

| LIT-S | 32 | 8k | 41.7 | config | github | log |

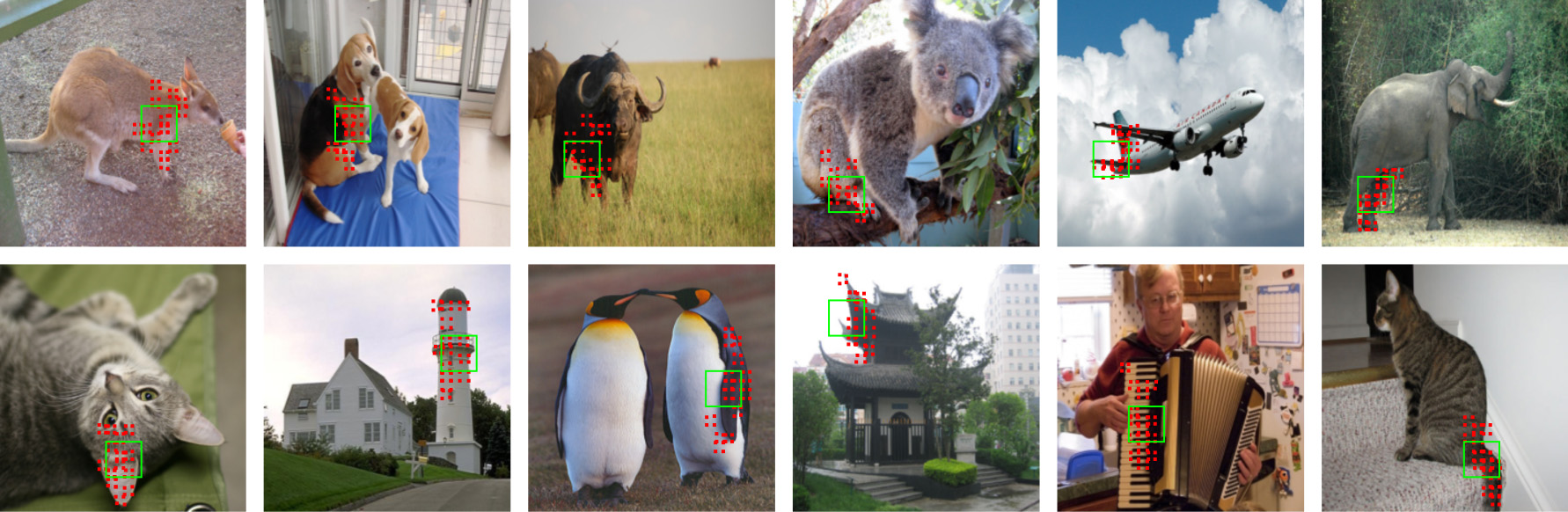

Offsets Visualisation

We provide a script for visualising the learned offsets by the proposed deformable token merging modules (DTM). For example,

# activate your virtual env

conda activate lit

cd classification/code_for_lit_ti

# visualize

python visualize_offset.py --model lit_ti --resume [path/to/lit_ti.pth] --vis_image visualization/demo.JPEG

The plots will be automatically saved under visualization/, with a folder named by the name of the example image.