Point-Spatio-Temporal-Convolution

PSTNet: Point Spatio-Temporal Convolution on Point Cloud Sequences.

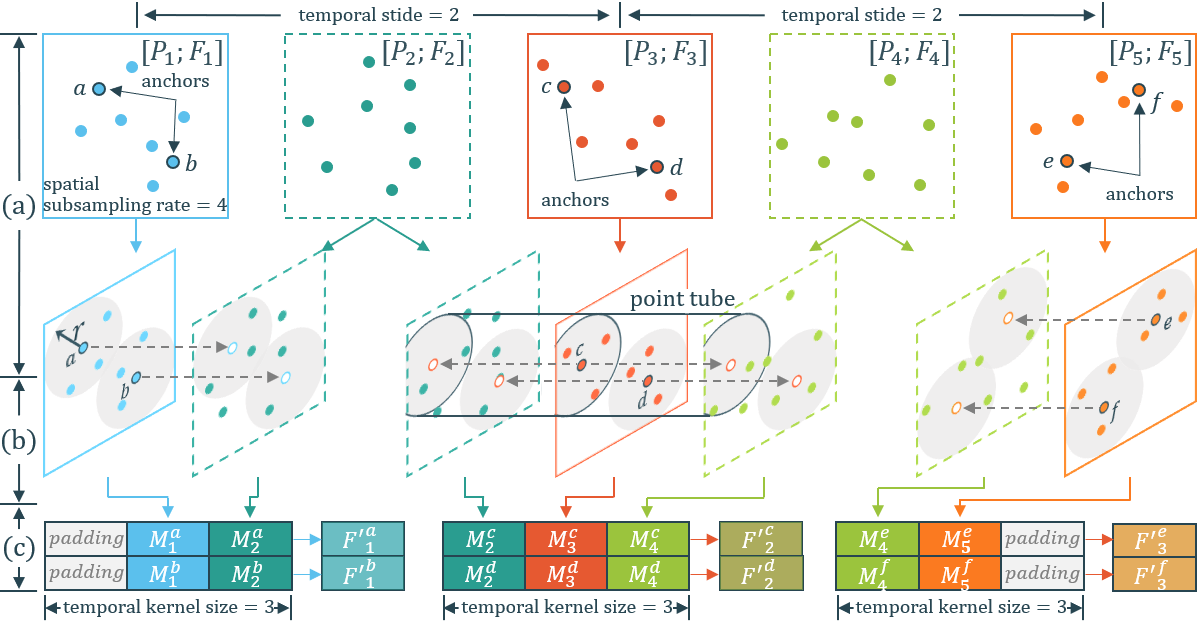

Point cloud sequences are irregular and unordered in the spatial dimension while exhibiting regularities and order in the temporal dimension. Therefore, existing grid based convolutions for conventional video processing cannot be directly applied to spatio-temporal modeling of raw point cloud sequences. In the paper, we propose a point spatio-temporal (PST) convolution to achieve informative representations of point cloud sequences. The proposed PST convolution first disentangles space and time in point cloud sequences. Then, a spatial convolution is employed to capture the local structure of points in the 3D space, and a temporal convolution is used to model the dynamics of the spatial regions along the time dimension.

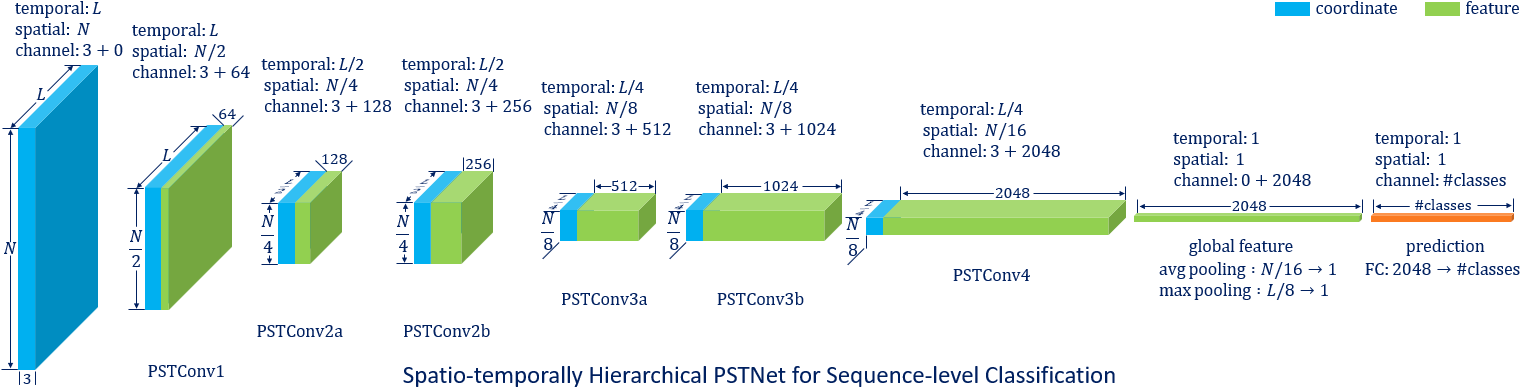

Furthermore, we incorporate the proposed PST convolution into a deep network, namely PSTNet, to extract features of 3D point cloud sequences in a spatio-temporally hierarchical manner.

Installation

The code is tested with Red Hat Enterprise Linux Workstation release 7.7 (Maipo), g++ (GCC) 8.3.1, PyTorch v1.2, CUDA 10.2 and cuDNN v7.6.

Install PyTorch v1.2:

pip install torch==1.2.0 torchvision==0.4.0

Compile the CUDA layers for PointNet++, which we used for furthest point sampling (FPS) and radius neighbouring search:

cd modules

python setup.py install

To see if the compilation is successful, try to run python modules/pst_convolutions.py to see if a forward pass works.

Install Mayavi for point cloud visualization (optional). Desktop is required.

Citation

If you find our work useful in your research, please consider citing:

@inproceedings{fan2021pstnet,

title={PSTNet: Point Spatio-Temporal Convolution on Point Cloud Sequences},

author={Hehe Fan and Xin Yu and Yuhang Ding and Yi Yang and Mohan Kankanhalli},

booktitle={International Conference on Learning Representations},

year={2021}

}

GitHub

https://github.com/hehefan/Point-Spatio-Temporal-Convolution