pvcnn

[NeurIPS 2019, Spotlight] Point-Voxel CNN for Efficient 3D Deep Learning.

@inproceedings{liu2019pvcnn,

title={Point-Voxel CNN for Efficient 3D Deep Learning},

author={Liu, Zhijian and Tang, Haotian and Lin, Yujun and Han, Song},

booktitle={Advances in Neural Information Processing Systems},

year={2019}

}

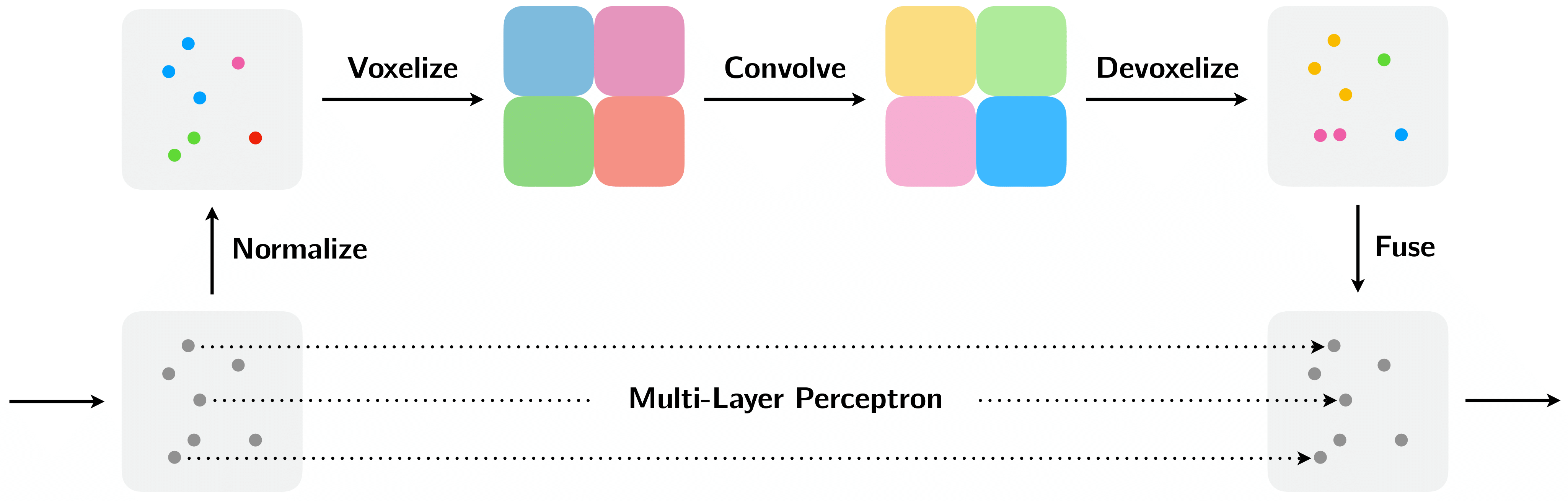

Overview

We release the PyTorch code of the Point-Voxel CNN.

Prerequisites

The code is built with following libraries:

- Python >= 3.6

- PyTorch >= 1.3

- tensorboardX >= 1.2

- h5py >= 2.9.0

- numba

- tqdm

For point data pre-processing, you may need plyfile.

Data Preparation

S3DIS

We follow the data pre-processing in PointCNN.

The code for preprocessing the S3DIS dataset is located in scripts/s3dis/.

You should first download the dataset from here, then run

python scripts/s3dis/prepare_data.py -d [path to unzip dataset dir]

Code

This code is based on PointCNN and Pointnet2_PyTorch.

We modified the code for PyTorch-style data layout.

The core code to implement PVConv is modules/pvconv.py. Its key idea costs only a few lines of code:

voxel_features, voxel_coords = voxelize(features, coords)

voxel_features = voxel_layers(voxel_features)

voxel_features = trilinear_devoxelize(voxel_features, voxel_coords, resolution)

fused_features = voxel_features + point_layers(features)

Pretrained Models

Here we provide some of the pretrained models. The accuracy might vary a little bit compared to the paper, since we re-train some of the models for reproducibility.

S3DIS

We compare the 3D-UNet and PointCNN performance reported in the following table.

The accuracy is tested following here. The list is keeping updating.

| Overall Acc | mIoU | |

|---|---|---|

| 3D-UNet | 85.12 | 54.93 |

| PVCNN | 86.16 | 56.17 |

| PointCNN | 85.91 | 57.26 |

| PVCNN++ | 87.14 | 58.33 |

Testing Pretrained Models

For example, to test the downloaded pretrained models on S3DIS, you can run

python train.py [config-file] --devices [gpu-ids] --evaluate --configs.train.best_checkpoint_path [path to your models]

For instance, if you want to evaluate PVCNN on GPU 0,1 (with 4096 points on Area 1-4 & 6), you can run

python train.py configs/s3dis/pvcnn/area5.py --devices 0,1 --evaluate --configs.train.best_checkpoint_path s3dis.pvcnn.area5.pth.tar

Training

We provided several examples to train PVCNN with this repo:

- To train PVCNN on S3DIS holding out Area 5, you can run

python train.py configs/s3dis/pvcnn/area5.py --devices 0,1

- To train PVCNN++ on S3DIS holding out Area 5, you can run

python train.py configs/s3dis/pvcnnpp/area5.py --devices 0,1

In general, to train a model, you can run

python train.py [config-file] --devices [gpu-ids]

To evaluate trained models, you can do inference by running:

python train.py [config-file] --devices [gpu-ids] --evaluate