PhoBERT

Pre-trained PhoBERT models are the state-of-the-art language models for Vietnamese (Pho, i.e. "Phở", is a popular food in Vietnam):

- Two versions of PhoBERT "base" and "large" are the first public large-scale monolingual language models pre-trained for Vietnamese. PhoBERT pre-training approach is based on RoBERTa which optimizes the BERT pre-training method for more robust performance.

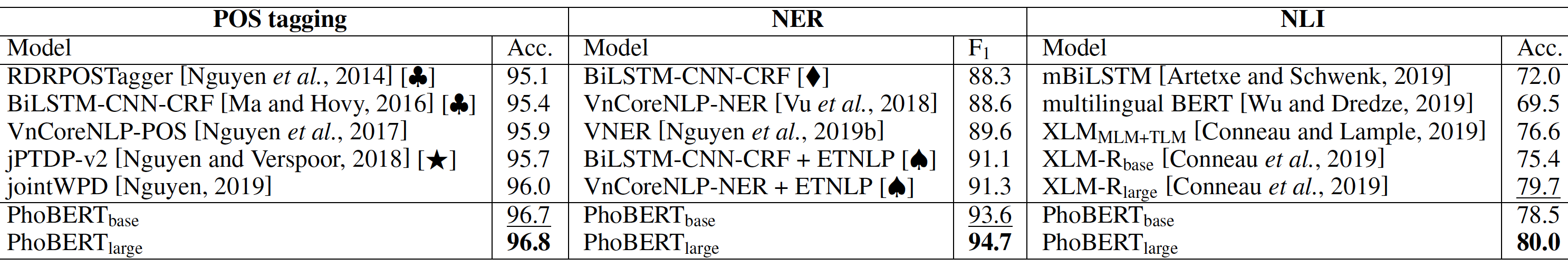

- PhoBERT outperforms previous monolingual and multilingual approaches, obtaining new state-of-the-art performances on three downstream Vietnamese NLP tasks of Part-of-speech tagging, Named-entity recognition and Natural language inference.

The general architecture and experimental results of PhoBERT can be found in our following paper:

@article{phobert,

title = {{PhoBERT: Pre-trained language models for Vietnamese}},

author = {Dat Quoc Nguyen and Anh Tuan Nguyen},

journal = {arXiv preprint},

volume = {arXiv:2003.00744},

year = {2020}

}

Please cite our paper when PhoBERT is used to help produce published results or incorporated into other software.

Experimental results

Experiments show that using a straightforward finetuning manner (i.e. using AdamW with a fixed learning rate of 1.e-5 and a batch size of 32) as we use for PhoBERT can lead to state-of-the-art results. We might boost our downstream task performances even further by doing a more careful hyper-parameter fine-tuning.

Using VnCoreNLP's word segmenter to pre-process input raw texts

In case the input texts are raw, i.e. without word segmentation, a word segmenter must be applied to produce word-segmented texts before feeding to PhoBERT. As PhoBERT employed the RDRSegmenter from VnCoreNLP to pre-process the pre-training data, it is recommended to also use VnCoreNLP-RDRSegmenter for PhoBERT-based downstream applications w.r.t. the input raw texts.

Installation

# Install the vncorenlp python wrapper

pip3 install vncorenlp

# Download VnCoreNLP-1.1.1.jar & its word segmentation component (i.e. RDRSegmenter)

mkdir -p vncorenlp/models/wordsegmenter

wget https://github.com/vncorenlp/VnCoreNLP/raw/master/VnCoreNLP-1.1.1.jar

wget https://github.com/vncorenlp/VnCoreNLP/raw/master/models/wordsegmenter/vi-vocab

wget https://github.com/vncorenlp/VnCoreNLP/blob/master/models/wordsegmenter/wordsegmenter.rdr

mv VnCoreNLP-1.1.1.jar vncorenlp/

mv vi-vocab vncorenlp/models/wordsegmenter/

mv wordsegmenter.rdr vncorenlp/models/wordsegmenter/

VnCoreNLP-1.1.1.jar (27MB) and folder models must be placed in the same working folder, here is vncorenlp!

Example usage

# See more details at: https://github.com/vncorenlp/VnCoreNLP

# Load rdrsegmenter from VnCoreNLP

from vncorenlp import VnCoreNLP

rdrsegmenter = VnCoreNLP("/Absolute-path-to/vncorenlp/VnCoreNLP-1.1.1.jar", annotators="wseg", max_heap_size='-Xmx500m')

# Input

text = "Ông Nguyễn Khắc Chúc đang làm việc tại Đại học Quốc gia Hà Nội. Bà Lan, vợ ông Chúc, cũng làm việc tại đây."

# To perform word segmentation only

word_segmented_text = rdrsegmenter.tokenize(text)

print(word_segmented_text)

[['Ông', 'Nguyễn_Khắc_Chúc', 'đang', 'làm_việc', 'tại', 'Đại_học', 'Quốc_gia', 'Hà_Nội', '.'], ['Bà', 'Lan', ',', 'vợ', 'ông', 'Chúc', ',', 'cũng', 'làm_việc', 'tại', 'đây', '.']]

Using PhoBERT in fairseq

Installation

Pre-trained models

| Model | #params | size | Download |

|---|---|---|---|

PhoBERT-base |

135M | 1.2GB | PhoBERT_base_fairseq.tar.gz |

PhoBERT-large |

370M | 3.2GB | PhoBERT_large_fairseq.tar.gz |

PhoBERT-base:

wget https://public.vinai.io/PhoBERT_base_fairseq.tar.gztar -xzvf PhoBERT_base_fairseq.tar.gz

PhoBERT-large:

wget https://public.vinai.io/PhoBERT_large_fairseq.tar.gztar -xzvf PhoBERT_large_fairseq.tar.gz

Example usage

Assume that the input texts are already word-segmented!

# Load PhoBERT-base in fairseq

from fairseq.models.roberta import RobertaModel

phobert = RobertaModel.from_pretrained('/Absolute-path-to/PhoBERT_base_fairseq', checkpoint_file='model.pt')

phobert.eval() # disable dropout (or leave in train mode to finetune)

# Incorporate the BPE encoder into PhoBERT-base

from fairseq.data.encoders.fastbpe import fastBPE

from fairseq import options

parser = options.get_preprocessing_parser()

parser.add_argument('--bpe-codes', type=str, help='path to fastBPE BPE', default="/Absolute-path-to/PhoBERT_base_fairseq/bpe.codes")

args = parser.parse_args()

phobert.bpe = fastBPE(args) #Incorporate the BPE encoder into PhoBERT

# Extract the last layer's features

line = "Tôi là sinh_viên trường đại_học Công_nghệ ." # INPUT TEXT IS WORD-SEGMENTED!

subwords = phobert.encode(line)

last_layer_features = phobert.extract_features(subwords)

assert last_layer_features.size() == torch.Size([1, 9, 768])

# Extract all layer's features (layer 0 is the embedding layer)

all_layers = phobert.extract_features(subwords, return_all_hiddens=True)

assert len(all_layers) == 13

assert torch.all(all_layers[-1] == last_layer_features)

# Extract features aligned to words

words = phobert.extract_features_aligned_to_words(line)

for word in words:

print('{:10}{} (...)'.format(str(word), word.vector[:5]))

# Filling marks

masked_line = 'Tôi là <mask> trường đại_học Công_nghệ .'

topk_filled_outputs = phobert.fill_mask(masked_line, topk=5)

print(topk_filled_outputs)

Using VnCoreNLP's RDRSegmenter with PhoBERT in fairseq

text = "Tôi là sinh viên trường đại học Công nghệ."

sentences = rdrsegmenter.tokenize(text)

# Extract the last layer's features

for sentence in sentences:

subwords = phobert.encode(sentence)

last_layer_features = phobert.extract_features(subwords)

Using PhoBERT in HuggingFace transformers

Installation

- Prerequisites: Installation w.r.t.

fairseq, andVnCoreNLP-RDRSegmenterif processing raw texts transformers:pip3 install transformers

Pre-trained models

| Model | #params | size | Download |

|---|---|---|---|

PhoBERT-base |

135M | 307MB | PhoBERT_base_transformers.tar.gz |

PhoBERT-large |

370M | 834MB | PhoBERT_large_transformers.tar.gz |

PhoBERT-base:

wget https://public.vinai.io/PhoBERT_base_transformers.tar.gztar -xzvf PhoBERT_base_transformers.tar.gz

PhoBERT-large:

wget https://public.vinai.io/PhoBERT_large_transformers.tar.gztar -xzvf PhoBERT_large_transformers.tar.gz

Example usage

import torch

import argparse

from transformers import RobertaConfig

from transformers import RobertaModel

from fairseq.data.encoders.fastbpe import fastBPE

from fairseq.data import Dictionary

# Load model

config = RobertaConfig.from_pretrained(

"/Absolute-path-to/PhoBERT_base_transformers/config.json"

)

phobert = RobertaModel.from_pretrained(

"/Absolute-path-to/PhoBERT_base_transformers/model.bin",

config=config

)

# Load BPE encoder

parser = argparse.ArgumentParser()

parser.add_argument('--bpe-codes',

default="/Absolute-path-to/PhoBERT_base_transformers/bpe.codes",

required=False,

type=str,

help='path to fastBPE BPE'

)

args = parser.parse_args()

bpe = fastBPE(args)

# INPUT TEXT IS WORD-SEGMENTED!

line = "Tôi là sinh_viên trường đại_học Công_nghệ ."

# Load the dictionary

vocab = Dictionary()

vocab.add_from_file("/Absolute-path-to/PhoBERT_base_transformers/dict.txt")

# Encode the line using fast BPE & Add prefix <s> and suffix </s>

subwords = '<s> ' + bpe.encode(line) + ' </s>'

# Map subword tokens to corresponding indices in the dictionary

input_ids = vocab.encode_line(subwords, append_eos=False, add_if_not_exist=False).long().tolist()

# Convert into torch tensor

all_input_ids = torch.tensor([input_ids], dtype=torch.long)

# Extract features

features = phobert(all_input_ids)