DA_detection

Progressive Domain Adaptation for Object Detection

Implementation of our paper Progressive Domain Adaptation for Object Detection, based on pytorch-faster-rcnn and PyTorch-CycleGAN.

Paper

Progressive Domain Adaptation for Object Detection

Han-Kai Hsu, Chun-Han Yao, Yi-Hsuan Tsai, Wei-Chih Hung, Hung-Yu Tseng, Maneesh Singh and Ming-Hsuan Yang

IEEE Winter Conference on Applications of Computer Vision (WACV), 2020.

Please cite our paper if you find it useful for your research.

@inproceedings{hsu2020progressivedet,

author = {Han-Kai Hsu and Chun-Han Yao and Yi-Hsuan Tsai and Wei-Chih Hung and Hung-Yu Tseng and Maneesh Singh and Ming-Hsuan Yang},

booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

title = {Progressive Domain Adaptation for Object Detection},

year = {2020}

}

Dependencies

This code is tested with Pytorch 0.4.1 and CUDA 9.0

# Pytorch via pip: Download and install Pytorch 0.4.1 wheel for CUDA 9.0

# from https://download.pytorch.org/whl/cu90/torch_stable.html

# Pytorch via conda:

conda install pytorch=0.4.1 cuda90 -c pytorch

# Other dependencies:

pip install -r requirements.txt

sh ./lib/make.sh

Data Preparation

KITTI

- Download the data from here.

- Extract the files under

data/KITTI/

Cityscapes

- Download the data from here.

- Extract the files under

data/CityScapes/

Foggy Cityscapes

- Follow the instructions here to request for the dataset download.

- Locate the data under

data/CityScapes/leftImg8bit/asfoggytrainandfoggyval.

BDD100k

- Download the data from here.

- Extract the files under

data/bdd100k/

Generate synthetic data with CycleGAN

Generate the synthetic data with the PyTorch-CycleGAN implementation.

git clone https://github.com/aitorzip/PyTorch-CycleGAN

Dataset loader code

Import the dataset loader code in ./cycleGAN_dataset_loader/ to train/test the CycleGAN on corresponding image translation task.

Generate from pre-trained weight:

Follow the testing instructions on PyTorch-CycleGAN and download the weight below to generate synthetic images. (Remember to change to the corresponding output image size)

- KITTI with Cityscapes style (KITTI->Cityscapes): size=(376,1244)

Locate the generated data underdata/KITTI/training/synthCity_image_2/with same naming and folder structure as original KITTI data. - Cityscapes with FoggyCityscapes style (Cityscapes->FoggyCityscapes): size=(1024,2048)

Locate the generated data underdata/CityScapes/leftImg8bit/synthFoggytrainwith same naming and folder structure as original Cityscapes data. - Cityscapes with BDD style (Cityscpaes->BDD100k): size=(1024,1280)

Locate the generated data underdata/CityScapes/leftImg8bit/synthBDDdaytrainanddata/CityScapes/leftImg8bit/synthBDDdayvalwith same naming and folder structure as original Cityscapes data.

Train your own CycleGAN:

Please follow the training instructions on PyTorch-CycleGAN.

Test the adaptation model

Download the following adapted weights to ./trained_weights/adapt_weight/

./experiments/scripts/test_adapt_faster_rcnn_stage1.sh [GPU_ID] [Adapt_mode] vgg16

# Specify the GPU_ID you want to use

# Adapt_mode selection:

# 'K2C': KITTI->Cityscapes

# 'C2F': Cityscapes->Foggy Cityscapes

# 'C2BDD': Cityscapes->BDD100k_day

# Example:

./experiments/scripts/test_adapt_faster_rcnn_stage2.sh 0 K2C vgg16

Train your own model

Stage one

./experiments/scripts/train_adapt_faster_rcnn_stage1.sh [GPU_ID] [Adapt_mode] vgg16

# Specify the GPU_ID you want to use

# Adapt_mode selection:

# 'K2C': KITTI->Cityscapes

# 'C2F': Cityscapes->Foggy Cityscapes

# 'C2BDD': Cityscapes->BDD100k_day

# Example:

./experiments/scripts/train_adapt_faster_rcnn_stage1.sh 0 K2C vgg16

Download the following pretrained detector weights to ./trained_weights/pretrained_detector/

Stage two

./experiments/scripts/train_adapt_faster_rcnn_stage2.sh 0 K2C vgg16

Discriminator score files:

- netD_synthC_score.json

- netD_CsynthFoggyC_score.json

- netD_CsynthBDDday_score.json

Extract the pretrained CycleGAN discriminator scores to ./trained_weights/

or

Save a dictionary of CycleGAN discriminator scores with image name as key and score as value

Ex: {'jena_000074_000019_leftImg8bit.png': 0.64}

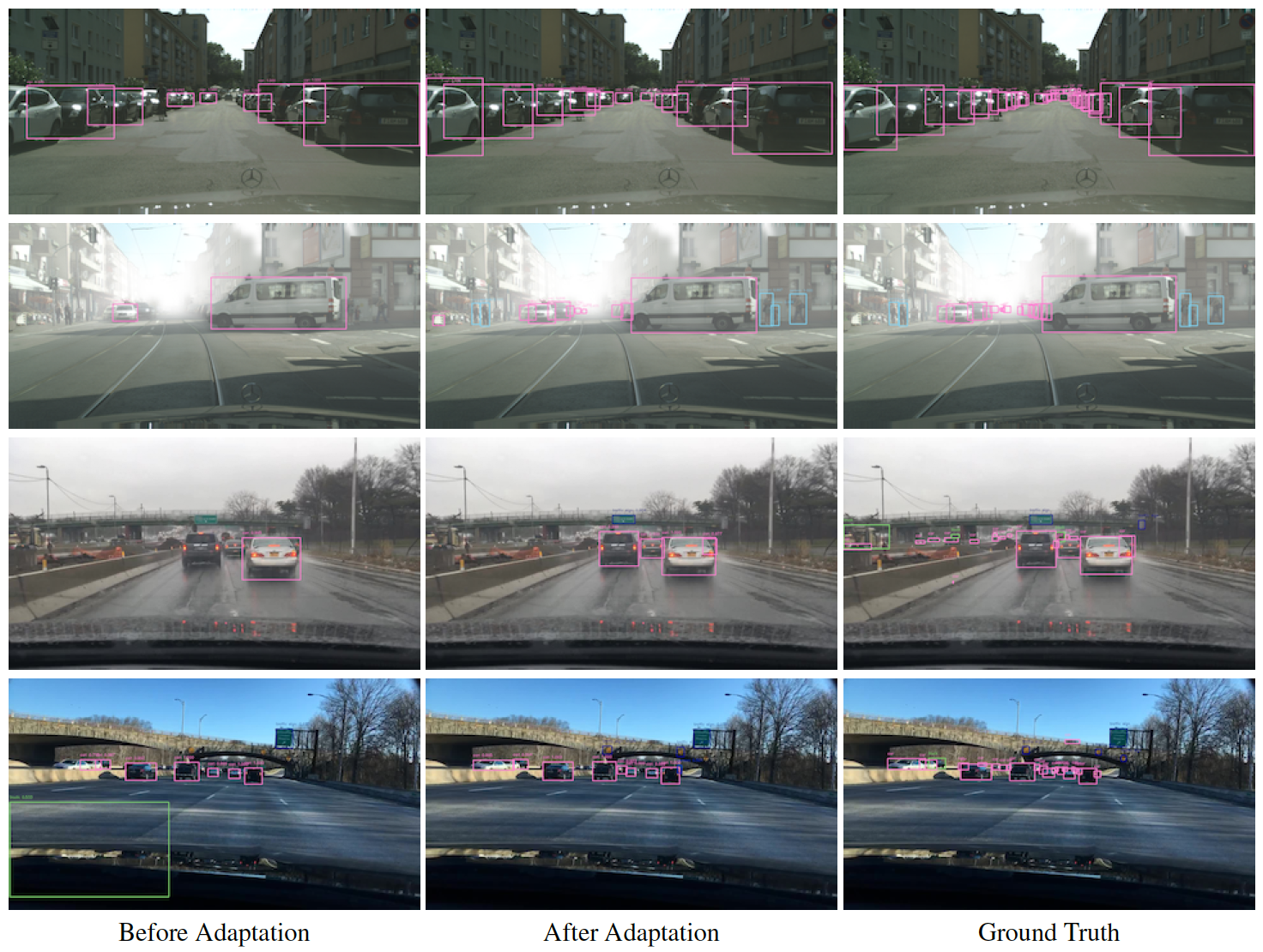

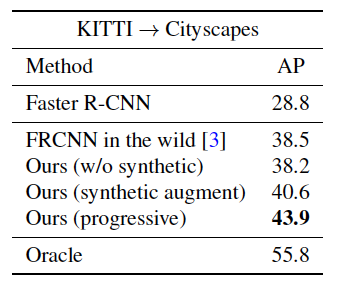

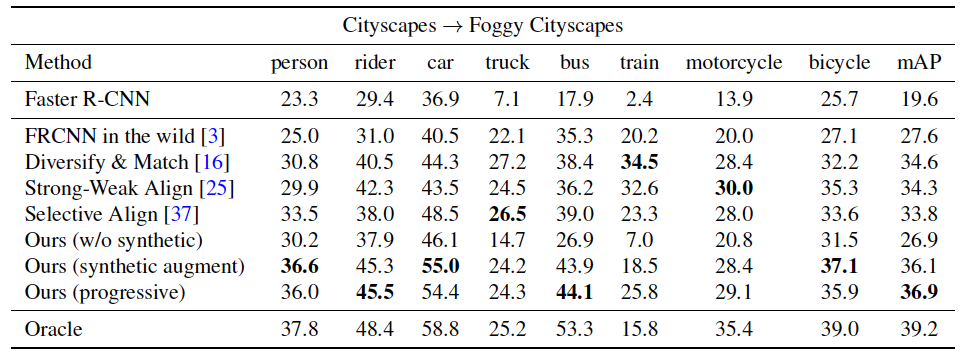

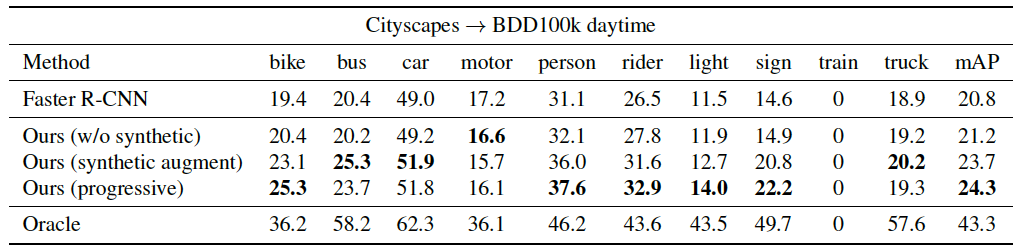

Detection results

Adaptation results

Acknowledgement

Thanks to the awesome implementations from pytorch-faster-rcnn and PyTorch-CycleGAN.

GitHub

https://github.com/kevinhkhsu/DA_detection#progressive-domain-adaptation-for-object-detection