Hierarchically-nested Adversarial Network (Pytorch implementation)

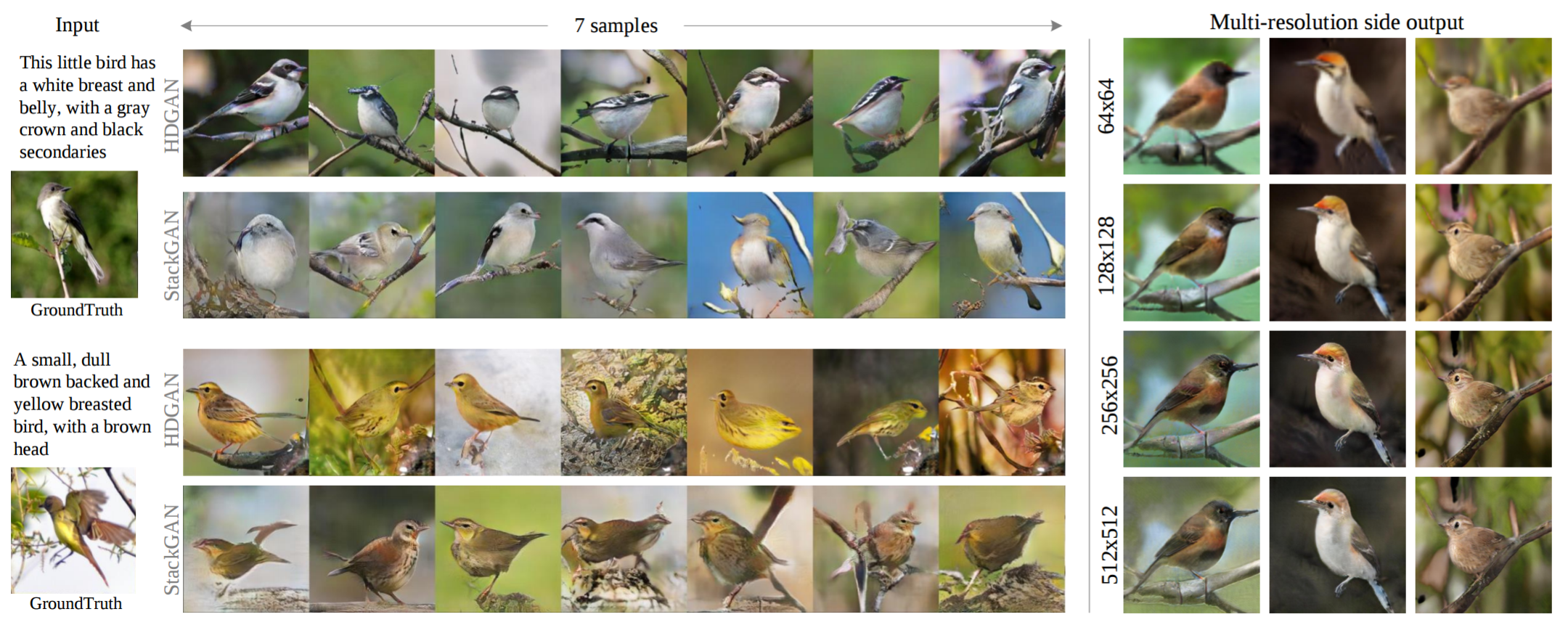

We call our method HDGAN, referring to High-Definition results and the idea of Hierarchically-nested Discriminators.

Dependencies

- Python 3

- Pytorch 0.3.1

- Anaconda 3.6

- Tensorflow 1.4.1 (for evaluation only)

Data

Download preprocessed data in /Data.

- Download birds to Data/birds

- Download flowers Data/flowers

- Download coco Data/coco. Also download COCO images to Data/coco/coco_official

Training

- For bird: goto train/train_gan:

device=0 sh train_birds.sh - For flower: goto train/train_gan:

device=0 sh train_flower.sh - For coco: goto train/train_gan:

device=0,1 sh train_coco.sh

To use multiple GPUs, simply set device='0,1,..' as a set of gpu ids.

Monitor your training in two ways

- Launch Visdom (see here):

python -m visdom.server -port 43426(keep the same port id with _port defined in plot_utils.py). Then access http://localhost:43426 from the browser. - Check fixed sample results per epoch in the checkpoint folder.

Testing

- Go to test/test_gan:

sh test_birds.shfor birdssh test_flowers.shfor flowersh test_coco.shfor coco

Evaluation

We provide multiple evaluation tools to ease test. Evaluation needs the sampled results obtained in Testing and saved in ./Results.

- Go to /Evaluation

Inception score

- Download inception models to the Evaluation/inception_score/inception_finetuned_models folder compute inception scores.

- Compute the inception score:

sh compute_inception_score.sh

MS-SSIM

- Compute the MS-SSIM score:

sh compute_ms_ssim.sh

VS-Similarity

- Download models to the Evaluation/neudist/neudist_[dataset] folder.

- Evaluate the VS-smilarity score:

sh compute_neudist_score.sh

Pretrained Models

We provide pretrained models for birds, flowers, and coco.

- Download the pretrained models. Save them to the Models/ folder.

- It contains HDGAN for birds and flowers, visual similarity model for birds and flowers

Acknowlegements

- StakGAN Tensorflow implementation

- MS-SSIM Python implementation

- Inception score Tensorfow implementation

- Process COCO data Torch implementation

Citation

If you find HDGAN useful in your research, please cite:

@inproceedings{zhang2018hdgan,

Author = {Zizhao Zhang and Yuanpu Xie and Lin Yang},

Title = {Photographic Text-to-Image Synthesis with a Hierarchically-nested Adversarial Network},

Year = {2018},

booktitle = {CVPR},

}