RankSortLoss

Official PyTorch Implementation of Rank & Sort Loss [ICCV2021]

Rank & Sort Loss for Object Detection and Instance Segmentation

The official implementation of Rank & Sort Loss. Our implementation is based on mmdetection.

Rank & Sort Loss for Object Detection and Instance Segmentation,

Kemal Oksuz, Baris Can Cam, Emre Akbas, Sinan Kalkan, ICCV 2021 (Oral Presentation). (arXiv pre-print)

Summary

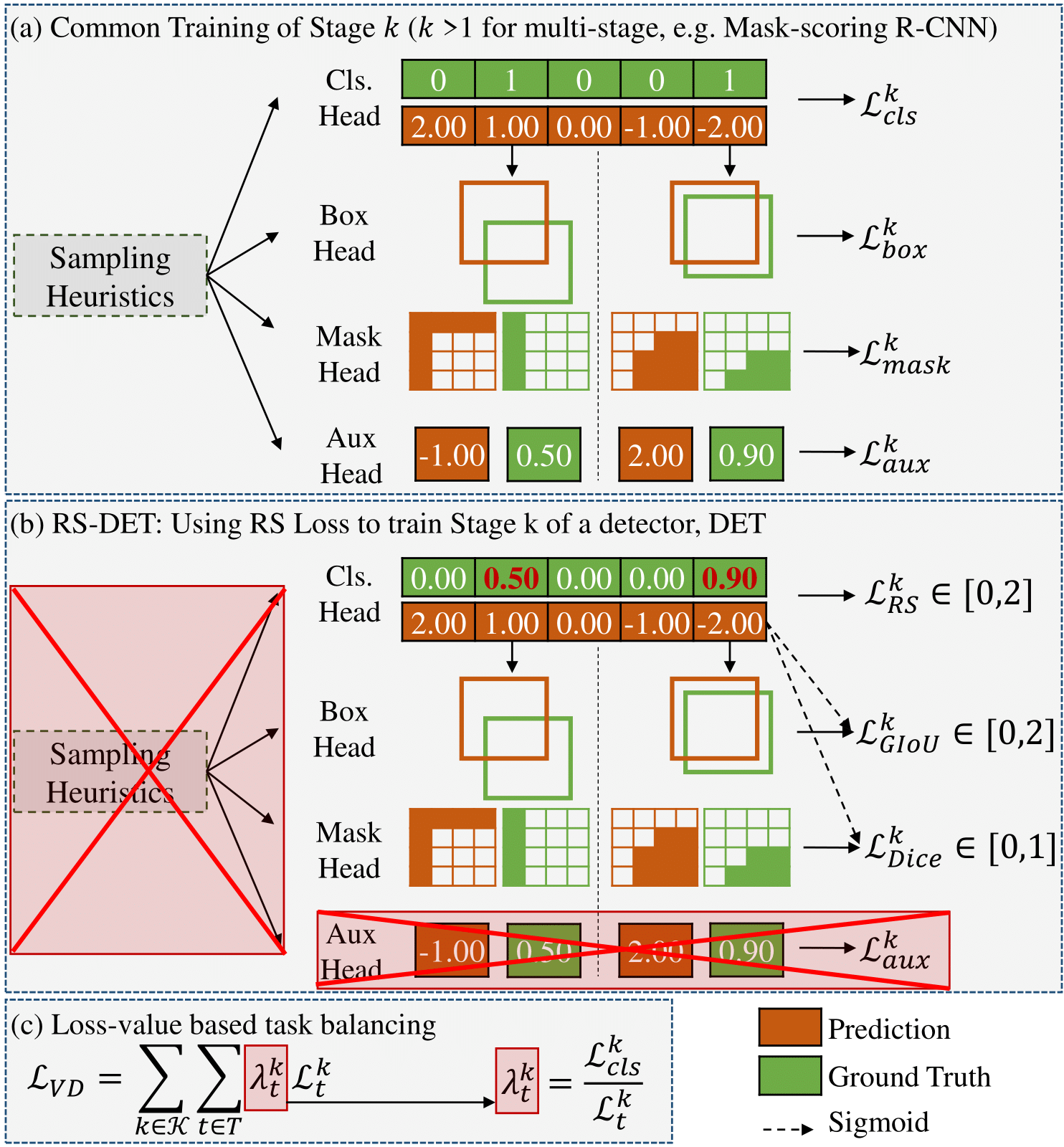

What is Rank & Sort (RS) Loss? Rank & Sort (RS) Loss supervises object detectors and instance segmentation methods to (i) rank the scores of the positive anchors above those of negative anchors, and at the same time (ii) sort the scores of the positive anchors with respect to their localisation qualities.

Benefits of RS Loss on Simplification of Training. With RS Loss, we significantly simplify training: (i) Thanks to our sorting objective, the positives are prioritized by the classifier without an additional auxiliary head (e.g. for centerness, IoU, mask-IoU), (ii) due to its ranking-based nature, RS Loss is robust to class imbalance, and thus, no sampling heuristic is required, and (iii) we address the multi-task nature of visual detectors using tuning-free task-balancing coefficients.

Benefits of RS Loss on Improving Performance. Using RS Loss, we train seven diverse visual detectors only by tuning the learning rate, and show that it consistently outperforms baselines: e.g. our RS Loss improves (i) Faster R-CNN by ~3 box AP and aLRP Loss (ranking-based baseline) by ~2 box AP on COCO dataset, (ii) Mask R-CNN with repeat factor sampling by 3.5 mask AP (~7 AP for rare classes) on LVIS dataset.

How to Cite

Please cite the paper if you benefit from our paper or the repository:

@inproceedings{RSLoss,

title = {Rank & Sort Loss for Object Detection and Instance Segmentation},

author = {Kemal Oksuz and Baris Can Cam and Emre Akbas and Sinan Kalkan},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2021}

}

Specification of Dependencies and Preparation

- Please see get_started.md for requirements and installation of mmdetection.

- Please refer to introduction.md for dataset preparation and basic usage of mmdetection.

Trained Models

Here, we report minival results in terms of AP and oLRP.

Multi-stage Object Detection

RS-R-CNN

| Backbone | Epoch | Carafe | MS train | box AP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 12 | 39.6 | 67.9 | log | config | model | ||

| ResNet-50 | 12 | + | 40.8 | 66.9 | log | config | model | |

| ResNet-101-DCN | 36 | [480,960] | 47.6 | 61.1 | log | config | model | |

| ResNet-101-DCN | 36 | + | [480,960] | 47.7 | 60.9 | log | config | model |

RS-Cascade R-CNN

| Backbone | Epoch | box AP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|

| ResNet-50 | 12 | 41.3 | 66.6 | Coming soon |

One-stage Object Detection

| Method | Backbone | Epoch | box AP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|---|

| RS-ATSS | ResNet-50 | 12 | 39.9 | 67.9 | log | config | model |

| RS-PAA | ResNet-50 | 12 | 41.0 | 67.3 | log | config | model |

Multi-stage Instance Segmentation

RS-Mask R-CNN on COCO Dataset

| Backbone | Epoch | Carafe | MS train | mask AP | box AP | mask oLRP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 12 | 36.4 | 40.0 | 70.1 | 67.5 | log | config | model | ||

| ResNet-50 | 12 | + | 37.3 | 41.1 | 69.4 | 66.6 | log | config | model | |

| ResNet-101 | 36 | [640,800] | 40.3 | 44.7 | 66.9 | 63.7 | log | config | model | |

| ResNet-101 | 36 | + | [480,960] | 41.5 | 46.2 | 65.9 | 62.6 | log | config | model |

| ResNet-101-DCN | 36 | + | [480,960] | 43.6 | 48.8 | 64.0 | 60.2 | log | config | model |

| ResNeXt-101-DCN | 36 | + | [480,960] | 44.4 | 49.9 | 63.1 | 59.1 | Coming Soon | config | model |

RS-Mask R-CNN on LVIS Dataset

| Backbone | Epoch | MS train | mask AP | box AP | mask oLRP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 12 | [640,800] | 25.2 | 25.9 | Coming Soon | Coming Soon | Coming Soon | Coming soon | Coming soon |

One-stage Instance Segmentation

RS-YOLACT

| Backbone | Epoch | mask AP | box AP | mask oLRP | box oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 55 | 29.9 | 33.8 | 74.7 | 71.8 | log | config | model |

RS-SOLOv2

| Backbone | Epoch | mask AP | mask oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|

| ResNet-34 | 36 | 32.6 | 72.7 | Coming soon | Coming soon | Coming soon |

| ResNet-101 | 36 | 39.7 | 66.9 | Coming soon | Coming soon | Coming soon |

Running the Code

Training Code

The configuration files of all models listed above can be found in the configs/ranksort_loss folder. You can follow get_started.md for training code. As an example, to train Faster R-CNN with our RS Loss on 4 GPUs as we did, use the following command:

./tools/dist_train.sh configs/ranksort_loss/ranksort_faster_rcnn_r50_fpn_1x_coco.py 4

Test Code

The configuration files of all models listed above can be found in the configs/ranksort_loss folder. You can follow get_started.md for test code. As an example, first download a trained model using the links provided in the tables below or you train a model, then run the following command to test an object detection model on multiple GPUs:

./tools/dist_test.sh configs/ranksort_loss/ranksort_faster_rcnn_r50_fpn_1x_coco.py ${CHECKPOINT_FILE} 4 --eval bbox

and use the following command to test an instance segmentation model on multiple GPUs:

./tools/dist_test.sh configs/ranksort_loss/ranksort_mask_rcnn_r50_fpn_1x_coco.py ${CHECKPOINT_FILE} 4 --eval bbox segm

You can also test a model on a single GPU with the following example command:

python tools/test.py configs/ranksort_loss/ranksort_faster_rcnn_r50_fpn_1x_coco.py ${CHECKPOINT_FILE} 4 --eval bbox