Banana Cloud

This repository is about our project, deploying a Deep Learning Model for the classification of ripeness state of bananas as a Function-as-a-Service using OpenFaas. Keep reading to find out how to do it. Explore the docs »

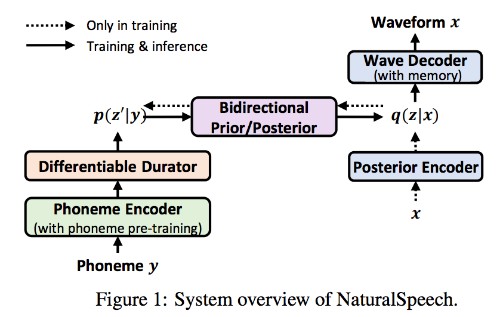

Table of Contents

About The Project

In this work we took a pre-trained Convolutional Neural Network model that classifies the ripeness state of bananas and we made it serverless as a Function-as-a-Service using OpenFaas. In doing so we gained some advantages: the images to classify aren’t saved locally on disk, but only kept in memory as we have defined the function handler that only takes images in a HTTP request.

We deployed our function on a Cloud machine provided by the italian “Gestione Ampliamento Rete Ricerca” (GARR) institute, and to invoke it we set up a small Flask webApp, in which clients make a request to the service by sending images through a browser, after which the webserver will forward the request to the instance on the GARR, as shown in the following figure:

To learn more about the project see the Project Report

An example of use:

Built With

Getting Started

Before starting, keep in mind that the model provided hasn’t the weigths files, so if you want to use our model, you have to train it from scratch.

To make the project work not locally you have to provide the WebApp the url where the serverless function is running and waiting for HTTP requests.

Prerequisites

- Install Docker

- Install kubectl

- Install k3sup ver. 0.11.3

- Install the faas-cli

- Use arkade to install all the above

- Set this environment variable, we’ll need it for the next commands

export IP="YOUR CLOUD MACHINE'S PUBLIC IP"

- using k3sup install k3s on the cloud machine, using the IP we’ve set before

k3sup install --ip $IP --user ubuntu --ssh-key $HOME/key.pem

Note that the username is the one on our machine, and the key.pem file is the key to access our cluster via ssh. Use the one you need.

- Check if all went well using the following commands

export KUBECONFIG=/home/<username>/kubeconfig kubectl config set-context linode-openfaas kubectl get nodes -o wide

- Install OpenFaas using arkade

arkade install openfaas

- Check if openfaas was successfully installed

kubectl get deploy --namespace openfaas

- Forward all requests made to http://localhost:8080 to the pod running the gateway service.

kubectl port-forward -n openfaas svc/gateway 8080:8080 &

Creation and Configuration of the function

- Clone the repository

git clone https://github.com/BananaCloud-CC2022-Parthenope/BananaCloud.git

- Move to the OpenFaaS_function folder

- Edit the banana-cloud.yml updating the “<docker_account>” line in the image field with your DockerHub username

- Login to your docker account in the shell

- Build the function

faas-cli build -f banana-cloud.yml

- Push the builded image to DockerHub

faas-cli push -f banana-cloud.yml

- Deploy the DockerHub image to the kubernetes cluster on the cloud machine

faas-cli deploy -f banana-cloud.yml

- To make the function accessible

export OPENFAAS_URL=http://CLOUD_MACHINE_IP:PORT

Now the function is running and you can send the image you want to classify via an HTTP request to http://YOUR_CLOUD_MACHINE_IP:PORT/function/banana-cloud

Metrics

To check the state of the function we to monitor some metrics using Prometheus and Grafana.

-

Expose the OpenFaas service Prometheus

kubectl expose deployment prometheus -n openfaas --type=NodePort --name=prometheus-ui

-

Forward all the requests made to http://localhost:9090 to the pod running the prometheus-ui service:

kubectl port-forward -n openfaas svc/prometheus-ui 9090:9090 & -

To execute Grafana, install this image

kubectl run grafana -n openfaas --image=stefanprodan/faas-grafana:4.6.3 --port=3000

-

Expose Grafana Pod, making it an active service

kubectl expose pods grafana -n openfaas --type=NodePort --name=grafana

-

Forward all the requests made to http://localhost:3000 to the pod running the grafana service:

kubectl port-forward -n openfaas svc/grafana 3000:3000 & -

Go to the Grafana dashboard visiting http://localhost:3000 usr/pswd are admin/admin and then Dashboard->Import

-

In this page there is a text field “Grafana.com Dashboard” paste there this link https://grafana.com/grafana/dashboards/3434 to attach Grafana to the Prometheus metrics

-

After clicking Loading, in the “faas” field of the next window use the “faas” attribute.

Now you should be monitoring all the metrics of the running function on the Cloud Machine.

Autoscaling

To enable scaling of the feature we used the Horizontal Pod Autoscaler from the Metric Server package of Kubernetes.

You can find the installation and configuration guide for this autoscaler here

Follows example of Grafana Dashboard with metrics and autoscaling.

Web App

To easily see the responses of the function, we made a simple Flask based web application. To run it go to the WebApp folder in another shell and, after installing Flask set the following environment variables.

-

Set these environment variables

export FLASK_APP=server export FLASK_ENV=deployment

-

In the server.py file of the WebApp don’t forget to place “http://CLOUD_MACHINE_IP:PORT/function/banana-cloud” in the url variable

-

Run the flask app with

flask run

-

Now you should visit http://localhost:5000 in your browser to load bananas images and send requests to the function !

Contributing

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag “enhancement”. Don’t forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

License

Distributed under the MIT License. See LICENSE.txt for more information.

Contacts

Antonio Di Marino – University of Naples Parthenope – email – LinkedIn

Vincenzo Bevilacqua – University of Naples Parthenope – email

Michele Zito – University of Naples Parthenope – email

Acknowledgments

- We thank the italian “Gestione Ampliamento Rete Ricerca” (GARR) institute for letting us use one of their Cloud machines as a cluster for this project – Learn More