RoRD

Rotation-Robust Descriptors and Orthographic Views for Local Feature Matching

Evaluation and Datasets

- MMA : Training on PhotoTourism and testing on HPatches and proposed Rotated HPatches

- Pose Estimation : Training on same PhotoTourism datasets as used for MMA and testing on proposed DiverseView

- Visual Place Recognition : Oxford RobotCar training sequence and testing sequence

Pretrained Models

Download models from Google Drive (73.9 MB) in the base directory.

Evaluating RoRD

You can evaluate RoRD on demo images or replace it with your custom images.

- Dependencies can be installed in a

condaofvirtualenvby running:pip install -r requirements.txt

python extractMatch.py <rgb_image1> <rgb_image2> --model_file <path to the model file RoRD>- Example:

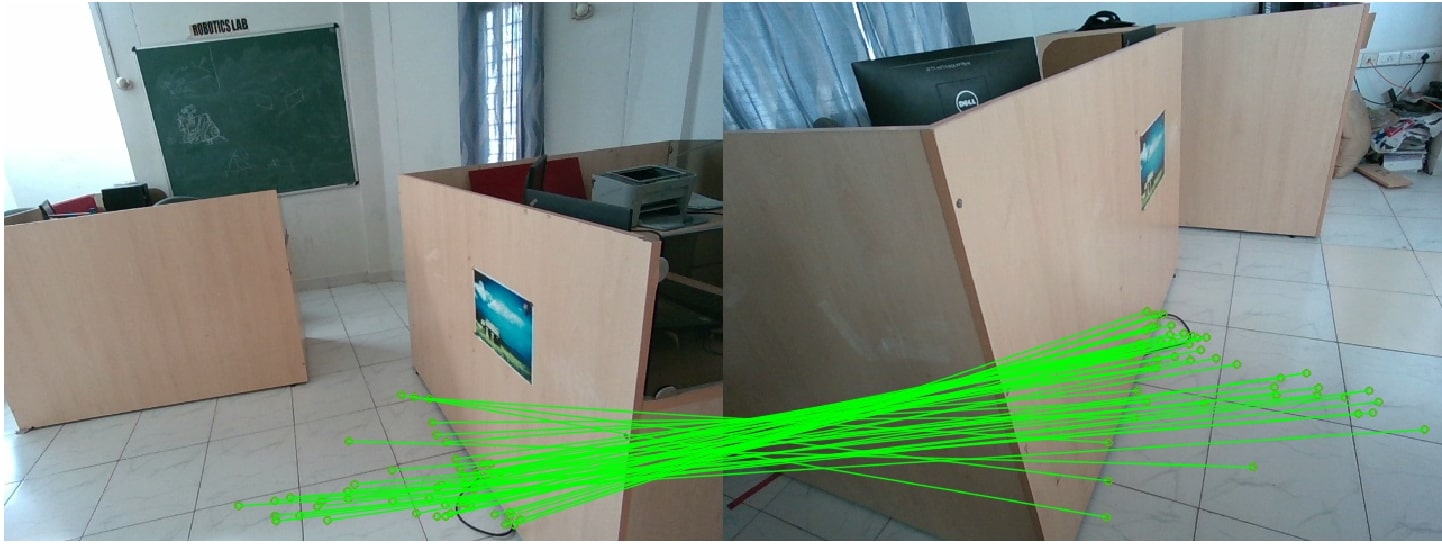

python extractMatch.py demo/rgb/rgb1_1.jpg demo/rgb/rgb1_2.jpg --model_file models/rord.pth - This should give you output like this:

RoRD

SIFT

DiverseView Dataset

Download dataset from Google Drive (97.8 MB) in the base directory (only needed if you want to evaluate on DiverseView Dataset).

Evaluation on DiverseView Dataset

The DiverseView Dataset is a custom dataset consisting of 4 scenes with images having high-angle camera rotations and viewpoint changes.

- Pose estimation on single image pair of DiverseView dataset:

cd demopython register.py --rgb1 <path to rgb image 1> --rgb2 <path to rgb image 2> --depth1 <path to depth image 1> --depth2 <path to depth image 2> --model_rord <path to the model file RoRD>- Example:

python register.py --rgb1 rgb/rgb2_1.jpg --rgb2 rgb/rgb2_2.jpg --depth1 depth/depth2_1.png --depth2 depth/depth2_2.png --model_rord ../models/rord.pth - This should give you output like this:

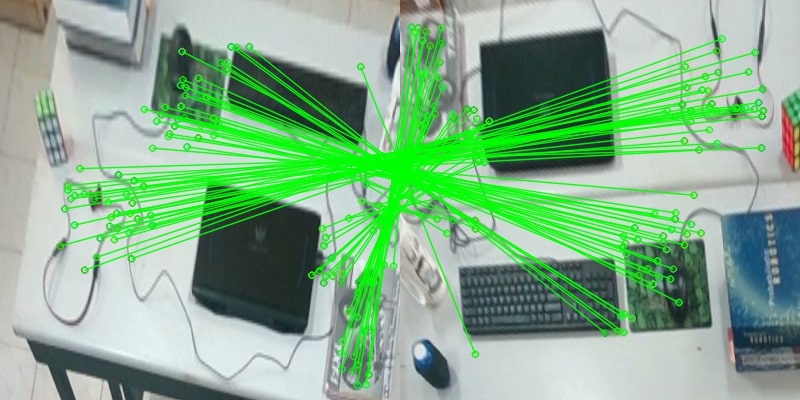

RoRD matches in perspective view

RoRD matches in orthographic view

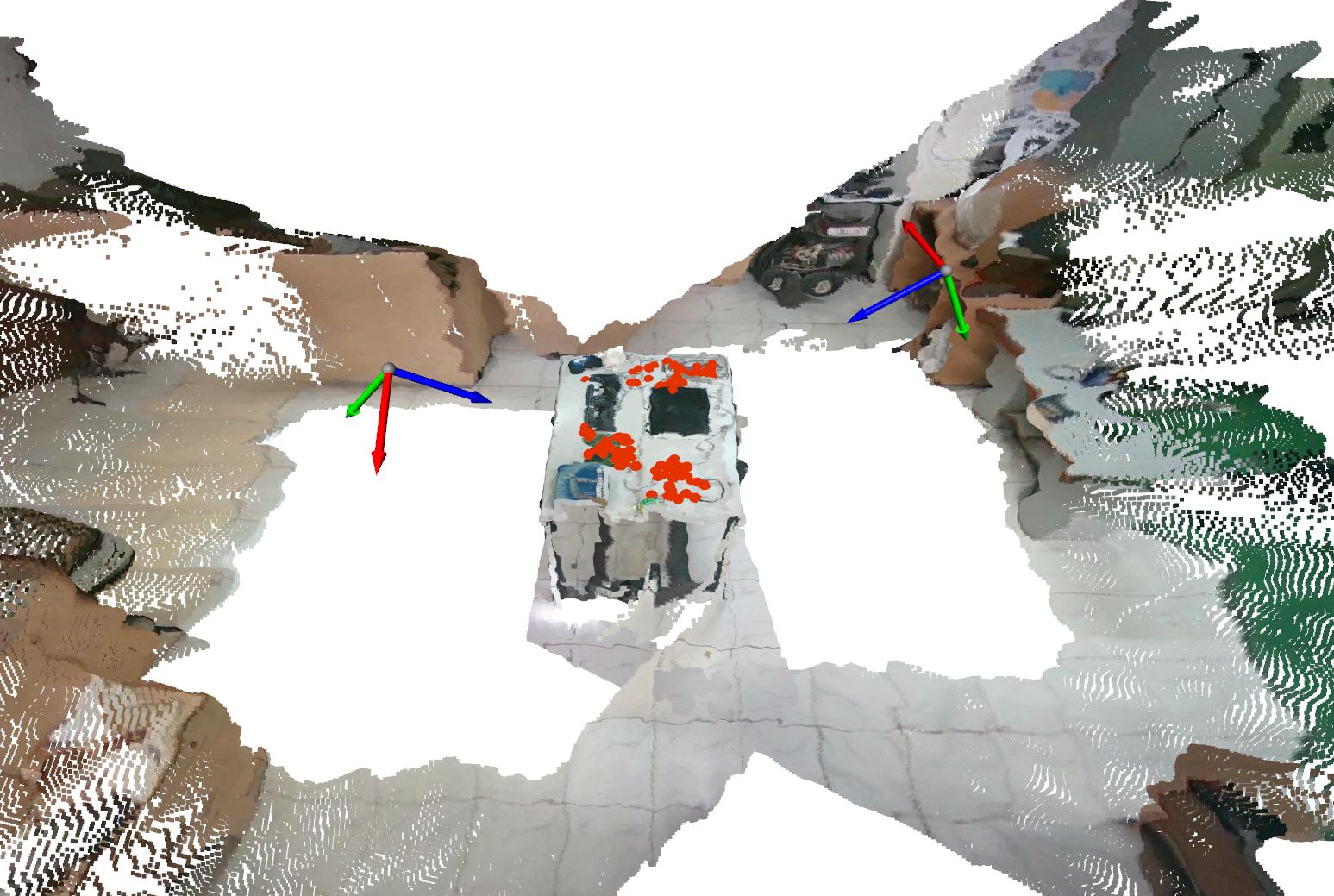

- To visualize the registered point cloud, use

--viz3d command:python register.py --rgb1 rgb/rgb2_1.jpg --rgb2 rgb/rgb2_2.jpg --depth1 depth/depth2_1.png --depth2 depth/depth2_2.png --model_rord ../models/rord.pth --viz3d

PointCloud registration using correspondences

- Pose estimation on a sequence of DiverseView dataset:

cd evaluation/DiverseView/python evalRT.py --dataset <path to DiverseView dataset> --sequence <sequence name> --model_rord <path to RoRD model> --output_dir <name of output dir>- Example:

python evalRT.py --dataset /path/to/preprocessed/ --sequence data1 --model_rord ../../models/rord.pth --output_dir out

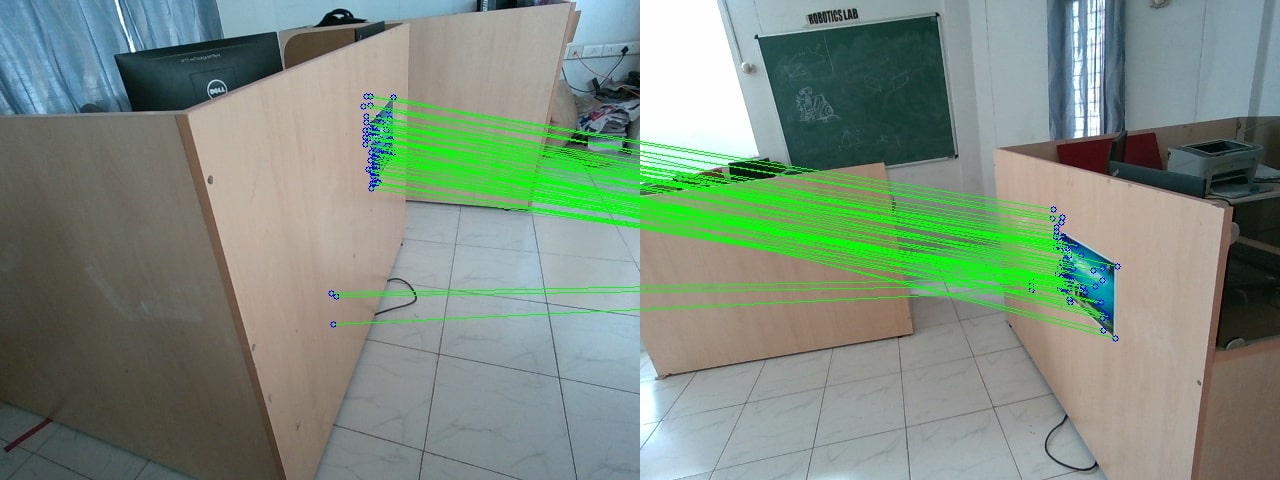

- This would generate

outfolder containing predicted transformations and matching results inout/visfolder, containing images like below:

RoRD

Training RoRD on PhotoTourism Images

-

Training using rotation homographies with initialization from D2Net weights (Download base models as mentioned in Pretrained Models).

-

Download branderburg_gate dataset that is used in the

configs/train_scenes_small.txtfrom here(5.3 Gb) inphototourismfolder. -

Folder stucture should be:

phototourism/ ___ brandenburg_gate ___ ___ dense ___ ___ ___ images ___ ___ ___ stereo ___ ___ ___ sparse -

python trainPT_ipr.py --dataset_path <path_to_phototourism_folder> --init_model models/d2net.pth --plot

TO-DO

- [ ] Provide VPR code

- [ ] Provide combine training of RoRD + D2Net

- [ ] Provide code for calculating error in Diverseview Dataset

Credits

Our base model is borrowed from D2-Net.

BibTex

If you use this code in your project, please cite the following paper:

@misc{rord2021,

title={RoRD: Rotation-Robust Descriptors and Orthographic Views for Local Feature Matching},

author={Udit Singh Parihar and Aniket Gujarathi and Kinal Mehta and Satyajit Tourani and Sourav Garg and Michael Milford and K. Madhava Krishna},

year={2021},

eprint={2103.08573},

archivePrefix={arXiv},

primaryClass={cs.CV}

}