CameraTraps

Tools for training and running detectors and classifiers for wildlife images collected from motion-triggered cameras.

This repo contains the tools for training, running, and evaluating detectors and classifiers for images collected from motion-triggered camera traps. The core functionality provided is:

- Data parsing from frequently-used camera trap metadata formats into a common format

- Training and evaluation of detectors, particularly our “MegaDetector”, which does a pretty good job finding terrestrial animals in a variety of ecosystems

- Training and evaluation of species-level classifiers for specific data sets

- A Web-based demo that runs our models via a REST API that hosts them on a Web endpoint

- Miscellaneous useful tools for manipulating camera trap data

- Research experiments we’re doing around camera trap data (i.e., some directories are highly experimental and you should take them with a grain of salt)

Classifiers and detectors are trained using TensorFlow.

This repo is maintained by folks in the Microsoft AI for Earth program who like looking at pictures of animals. I mean, we want to use machine learning to support conservation too, but we also really like looking at pictures of animals.

Data

This repo does not directly host camera trap data, but we work with our collaborators to make data and annotations available whenever possible on lila.science.

Models

This repo does not extensively host models, though we will release models when they are at a level of generality that they might be useful to other people.

MegaDetector

Speaking of models that might be useful to other people, we have trained a one-class animal detector trained on several hundred thousand bounding boxes from a variety of ecosystems. Lots more information – including download links – on the MegaDetector page.

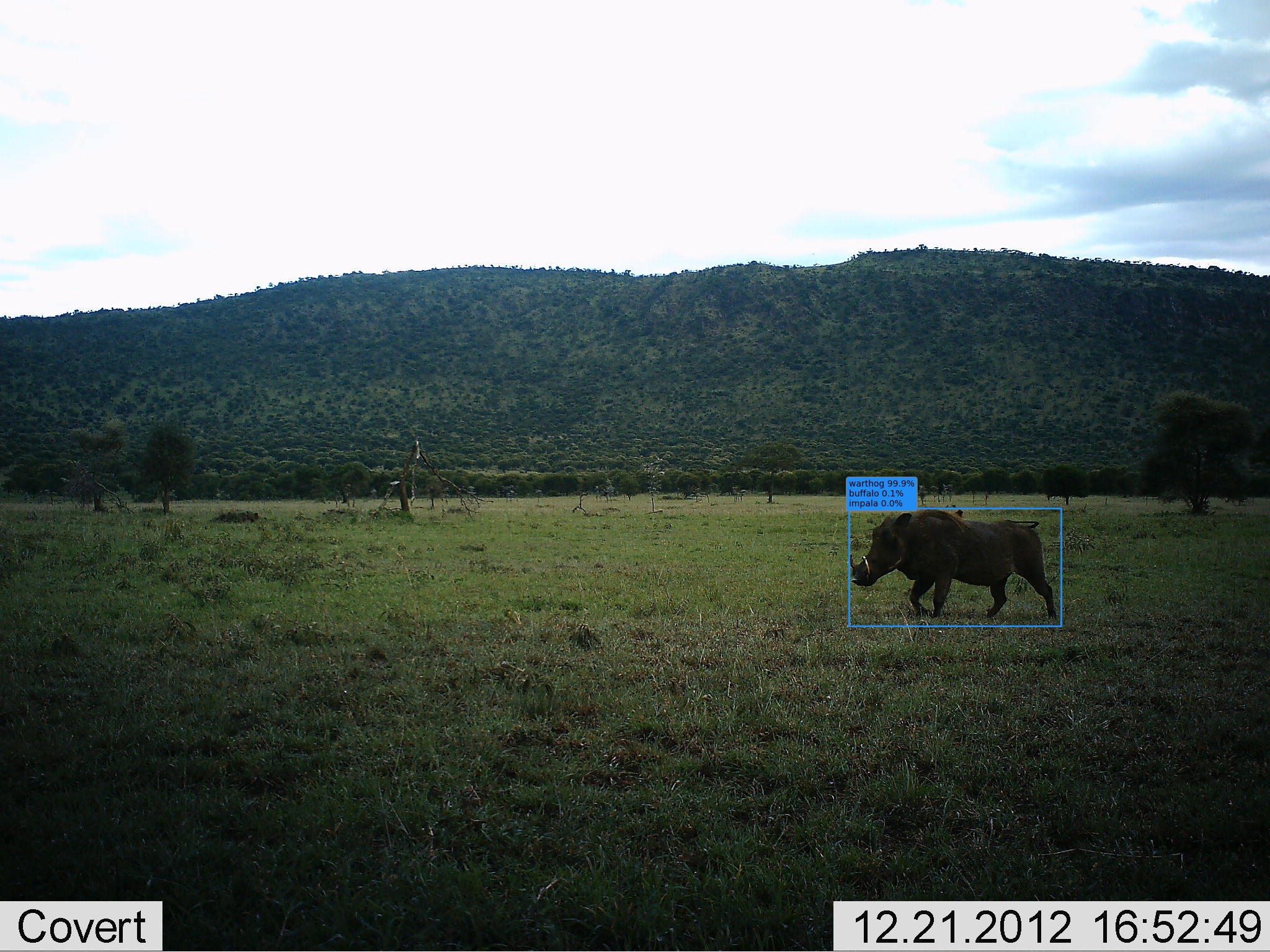

Here’s a “teaser” image of what detector output looks like:

Image credit University of Washington.

Contact

For questions about this repo, contact [email protected].

Contents

This repo is organized into the following folders...

api

Code for hosting our models as an API, either for synchronous operation (e.g. for real-time inference or for our Web-based demo) or as a batch process (for large biodiversity surveys).

classification

Code for training species classifiers on new data sets, generally trained on crops generated via an existing detector. We’ll release some classifiers soon, but more importantly, here’s a tutorial on training your own classifier using our detector and our training pipeline.

Oh, and here’s another “teaser image” of what you get at the end of training a classifier:

data_management

Code for:

- Converting frequently-used metadata formats to COCO Camera Traps format

- Creating, visualizing, and editing COCO Camera Traps .json databases

- Generating tfrecords

demo

Source for the Web-based demo of our MegaDetector model (we’ll release the demo soon!).

detection

Code for training and evaluating detectors.

research

Ongoing research projects that use this repository in one way or another; as of the time I’m editing this README, there are projects in this folder around active learning and the use of simulated environments for training data augmentation.

sandbox

Random things that don’t fit in any other directory. Currently contains a single file, a not-super-useful but super-duper-satisfying and mostly-successful attempt to use OCR to pull metadata out of image pixels in a fairly generic way, to handle those pesky cases when image metadata is lost.

Installation

The required Python packages for running utility scripts in this repo are listed in requirements.txt. Scripts in some folders including api,detection and classification may require additional setup.

If you are not experienced in managing Python environments, we suggest that you start a conda virtual environment and use our utility scripts within that environment. Conda is a package manager for Python (among other things). You can install a lightweight distribution of conda (Miniconda) for your OS via the installers at https://docs.conda.io/en/latest/miniconda.html.

At the terminal, issue the following commands to create a virtual environment via conda called cameratraps, activate it, upgrade the Python package manager pip, and install the required packages:

conda create -n cameratraps python=3.5

conda activate cameratraps

pip install --upgrade pip

pip install -r requirements.txt

Python >= 3.5 should work.

When prompted, press 'y' to proceed with installing the packages and their dependencies.

If you run into an error (e.g. 'ValueError... cannot be negative') while creating the environment, make sure to update conda to version 4.5.11 or above. Check the version of conda using conda --version; upgrade it using conda update conda.

To exit the conda environment, issue conda deactivate cameratraps.

In some scripts, we also assume that you have the AI for Earth utilities repo cloned and its path appended to PYTHONPATH.

Gratuitous pretty camera trap picture

Image credit USDA, from the NACTI data set.