MARVIN

Multi-Agent Routing Value Iteration Network (MARVIN)

In this work, we address the problem of routing multiple agents in a coordinated manner, specifically in the context of autonomous mapping. We created a simulated environment to mimic realistic mapping performed by autonomous vehicles with unknown minimum edge coverage and traffic conditions. Here you can find our training code both for training with reinforcement learning and with imitation learning.

Requirements

- Python>=3.6

- tqdm>=4.36.1

- torch==1.4.0

- tensorboardX>=1.9

- numpy>=1.18.1

- cvxpy==1.1.0a1

- scipy==1.4.1

Setup

In a new python environment, run the following command to install all dependencies.

python setup.py install

Training

- Step 1: Download the ground truth model

bash download.sh

It should be able to be found in the marvin/data folder

- Step 1: Begin training

python train.py --tag <your-unique-tag>

This will train the system on a randomly generated set of toy graphs. In order to add in our realistic traffic conditions, run

python train.py --tag <your-unique-tag> --traffic --pass_range 3

If you want to train the system using reinforcement learning, add the --rl tag as an argument

python train.py --tag <your-unique-tag> --traffic --pass_range 3 --rl

Note that the kind of trainer and many of the other hyperparameters can be found in marvin/utils/trainer_parameters.py. Feel free to play around with them as you see fit. In order to set the seed for the entire setup for reproducibility, use the --seed argument.

Testing

In order to evaluate a model, simple add the --eval and --load <path-to-model.pb> tags to your original training command. This will skip the training loop and only run the validation loop on the testset instead. The validation loop will also produce records of the previous set of runs in the logs/testing_data for visualization and future qualitative analysis.

python train.py --tag <your-unique-tag> --traffic --pass_range 3 --rl --eval --load <your-weights>

If you want to evaluate using our pretrained models, then run the following for our imitation learning model:

python train.py --tag <your-unique-tag> --traffic --pass_range 3 --eval --load marvin/pretrained/il.pb --seed 1

or the following to run our reinforcement learning model

python train.py --tag <your-unique-tag> --traffic --pass_range 3 --rl --eval --load marvin/pretrained/rl.pb --seed 1

Results

We are currently working to release our street graph dataset. Currently we are in the licensing phase and we will be providing updates on its status as we receive them. As it stands, these are the average runtime costs when we train our system on our toy dataset with realistic conditions with a seed of 0.

| Model | 2 Agents 25 Nodes | 5 Agents 50 nodes |

|---|---|---|

| MARVIN IL | 12.06 | 19.86 |

| GVIN | 12.28 | 20.21 |

| GAT | 22.85 | 47.80 |

Training Curves

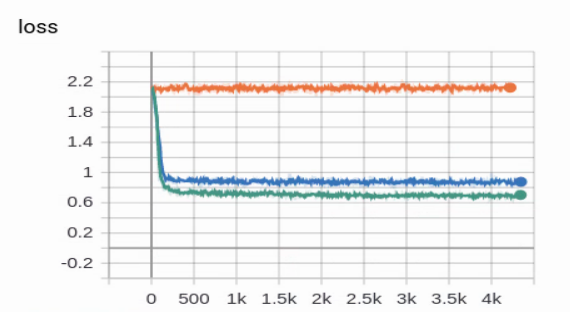

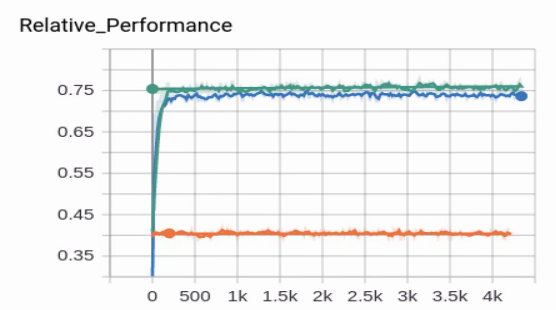

Here we illustrate what training should look like for imitation learning (left) and reinforcement learning (right) when the seed is set to zero.

Loss

Here is the loss function (orange is the Graph Attention Network, blue is the generalized value iteration network, and green is MARVIN)

Relative Performance

Here is the relative performance of the above models, and how they evolve during the training process.

Visualization

The value function that each agent creates can be visualized to determine what their intentions are and how they can evolve over time.

TODO

- Release dataset and dataset description

- Parallelize training

- Generalize training environment

- Port to tensorflow

- Integrate path visualization functions into the codebase