Pointly-supervised 3D Scene Parsing with Viewpoint Bottleneck

Created by Liyi Luo, Beiwen Tian, Hao Zhao and Guyue Zhou from Institute for AI Industry Research (AIR), Tsinghua University, China.

Introduction

Semantic understanding of 3D point clouds is important for various robotics applications. Given that point-wise semantic annotation is expensive, in our paper, we address the challenge of learning models with extremely sparse labels. The core problem is how to leverage numerous unlabeled points.

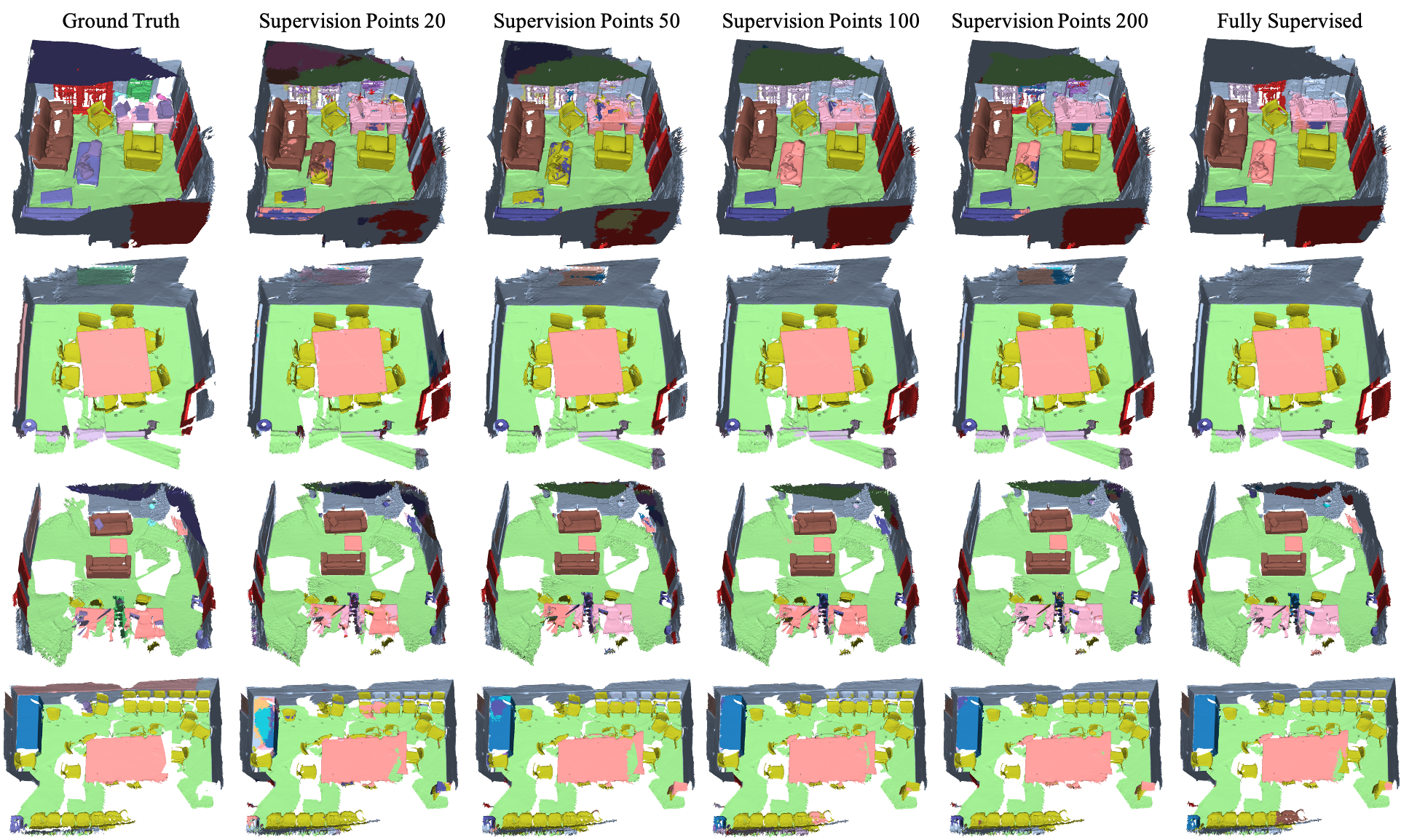

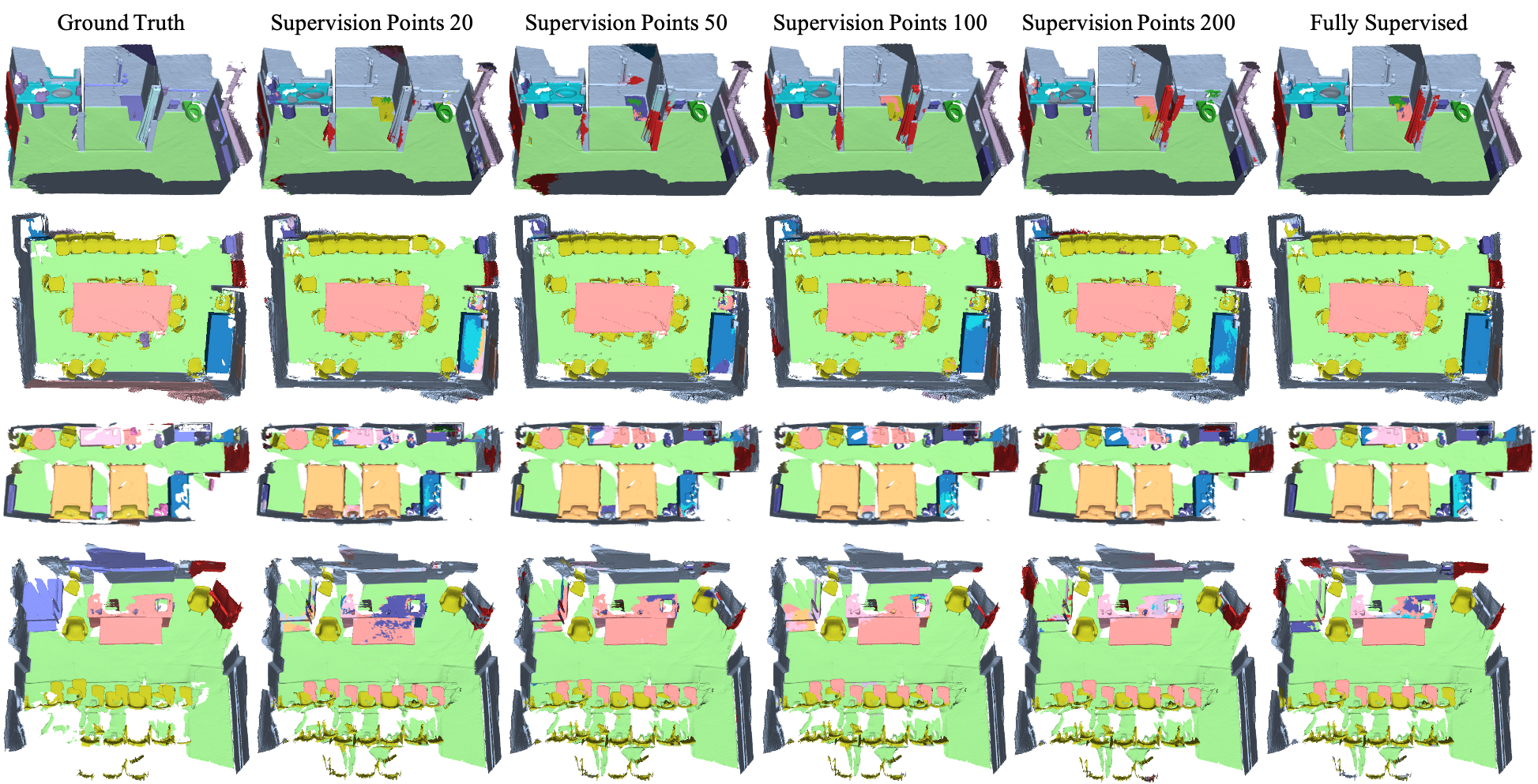

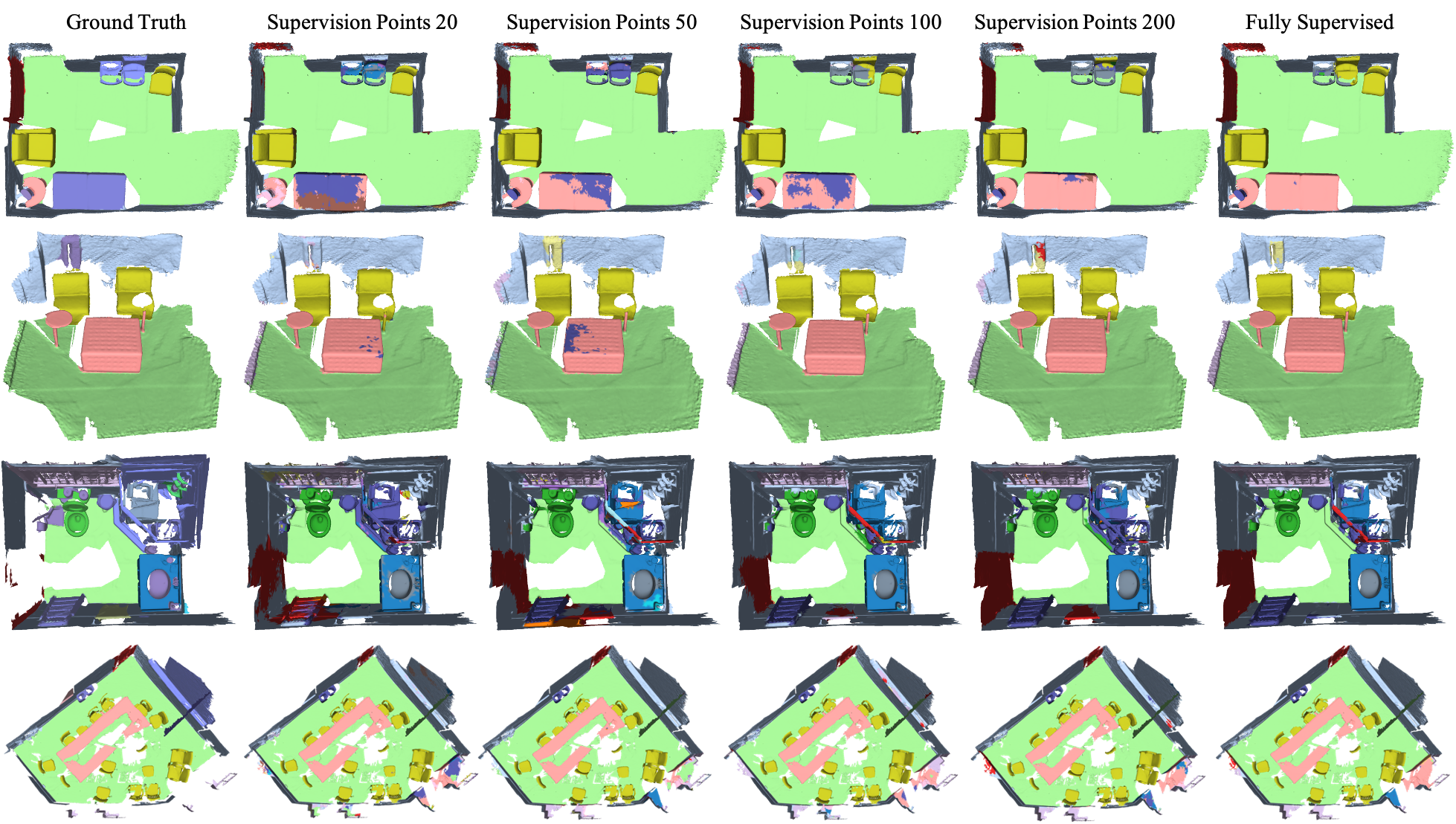

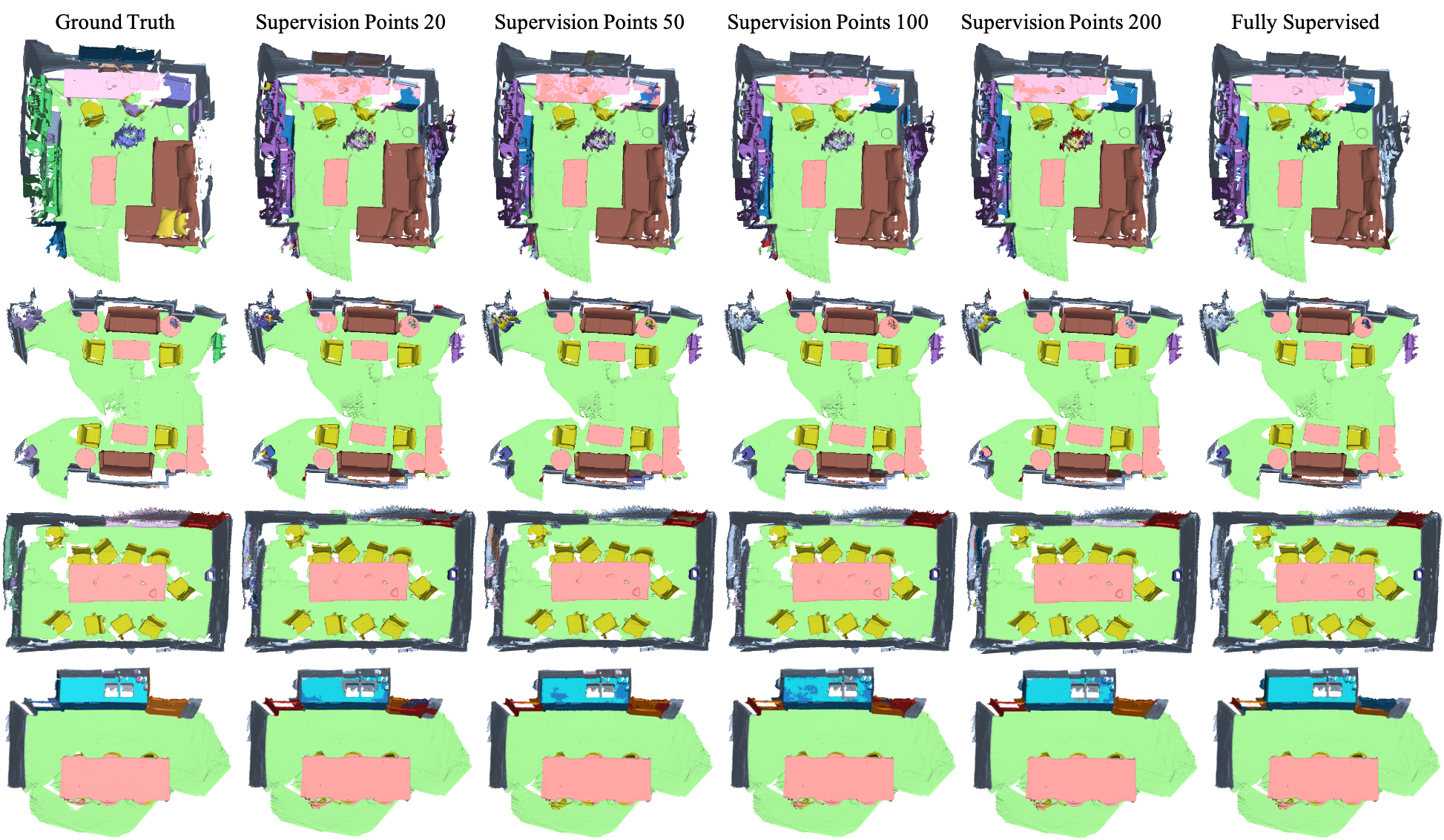

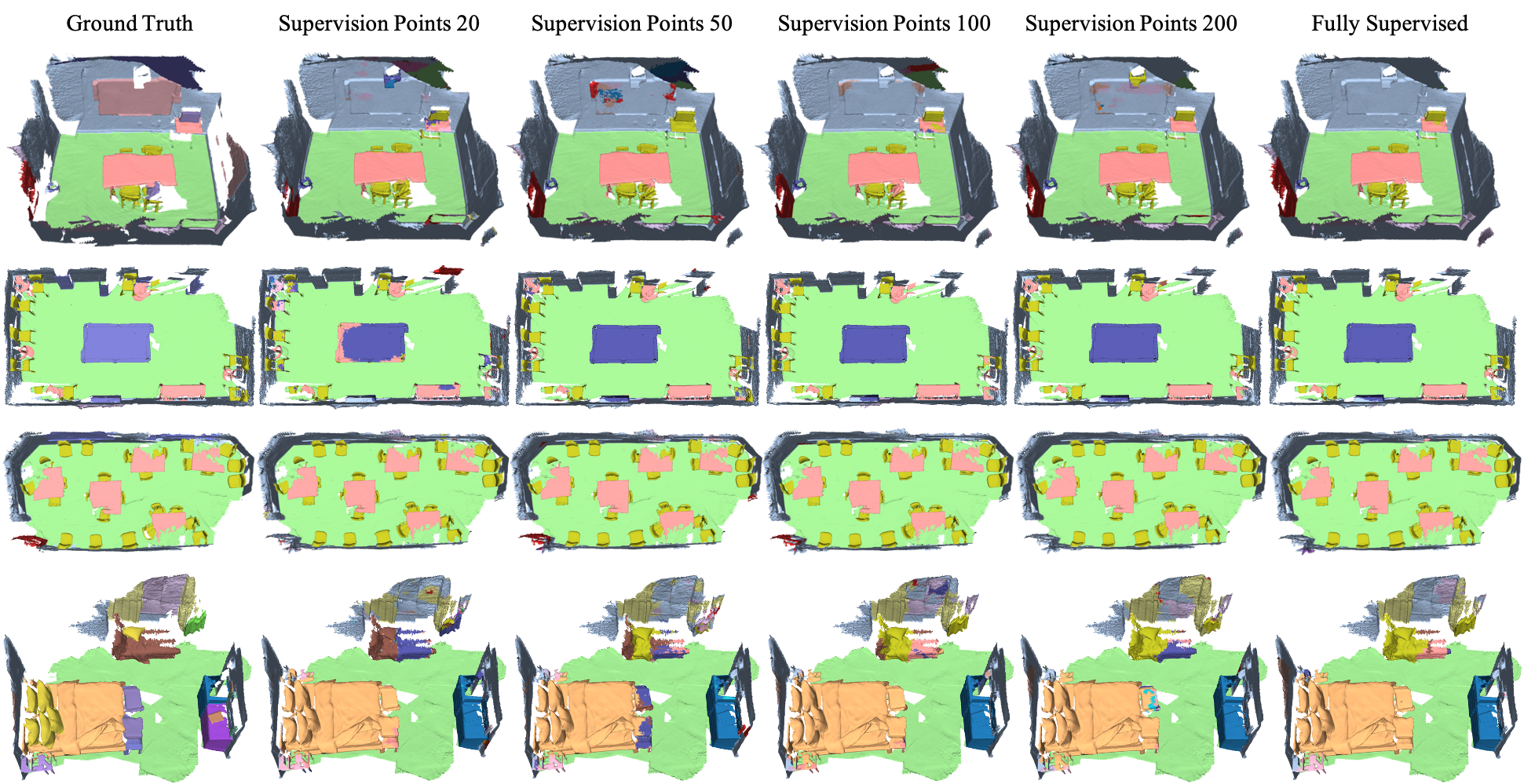

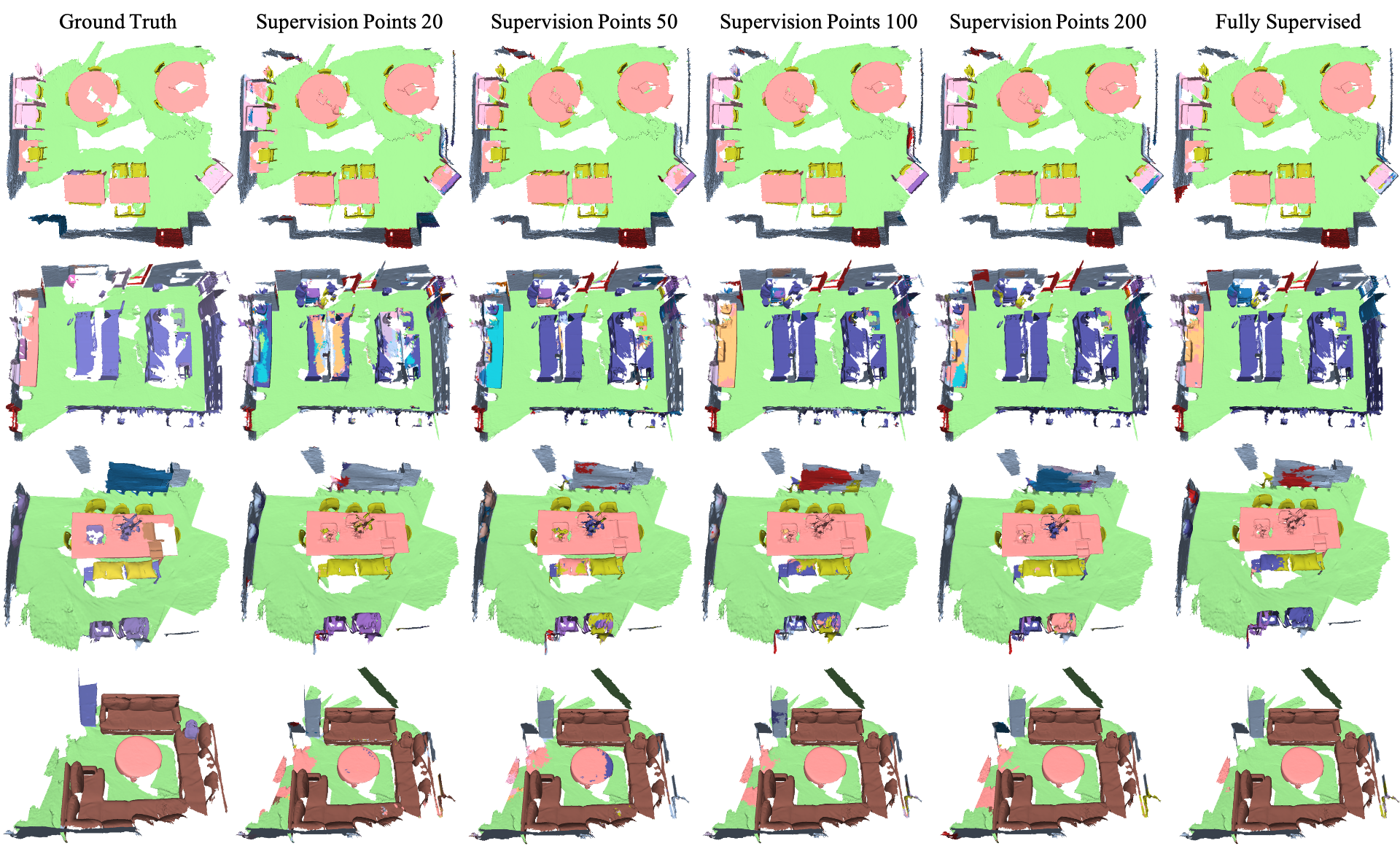

In this repository, we propose a self-supervised 3D representation learning framework named viewpoint bottleneck. It optimizes a mutual-information based objective, which is applied on point clouds under different viewpoints. A principled analysis shows that viewpoint bottleneck leads to an elegant surrogate loss function that is suitable for large-scale point cloud data. Compared with former arts based upon contrastive learning, viewpoint bottleneck operates on the feature dimension instead of the sample dimension. This paradigm shift has several advantages: It is easy to implement and tune, does not need negative samples and performs better on our goal down-streaming task. We evaluate our method on the public benchmark ScanNet, under the pointly-supervised setting. We achieve the best quantitative results among comparable solutions. Meanwhile we provide an extensive qualitative inspection on various challenging scenes. They demonstrate that our models can produce fairly good scene parsing results for robotics applications.

Citation

If you find our work useful in your research, please consider citing:

@misc{

}

Preparation

Requirements

- Python 3.6 or higher

- CUDA 11.1

It is strongly recommended to proceed in a virtual environment (venv, conda)

Installation

Clone the repository and install the rest of the requirements

git clone https://github.com/OPEN-AIR-SUN/ViewpointBottleneck/

cd ViewpointBottlencek

# Uncomment following commands to create & activate a conda env

# conda create -n env_name python==3.8

# conda activate env_name

pip install -r requirements.txt

Data Preprocess

Download ScanNetV2 dataset and data-efficient setting HERE .

Extract point clouds and annotations by running

# From root of the repo

# Fully-supervised:

python data_preprocess/scannet.py

# Pointly supervised:

python data_preprocess/scannet_eff.py

Pretrain the model

# From root of the repo

cd pretrain/

chmod +x ./run.sh

./run.sh

You can modify some details with environment variables:

SHOTS=50 FEATURE_DIM=512 \

LOG_DIR=logs \

PRETRAIN_PATH=actual/path/to/pretrain.pth \

DATASET_PATH=actual/directory/of/dataset \

./run.sh

Fine-tune the model with pretrained checkpoint

# From root of the repo

cd finetune/

chmod +x ./run.sh

./run.sh

You can modify some details with environment variables:

SHOTS=50 \

LOG_DIR=logs \

PRETRAIN_PATH=actual/path/to/pretrain.pth \

DATASET_PATH=actual/directory/of/dataset \

./run.sh

Model Zoo

| Pretrained Checkpoints | Feature Dimension | 256 | 512 | 1024 | |

|---|---|---|---|---|---|

| Final checkpoints mIOU(%) on val split |

Supervised points | ||||

| 20 | 56.2 | 57.0 | 56.3 | ||

| 50 | 63.3 | 63.6 | 63.7 | ||

| 100 | 66.5 | 66.8 | 66.5 | ||

| 200 | 68.4 | 68.5 | 68.4 | ||

Acknowledgements

We appreciate the work of ScanNet and SpatioTemporalSegmentation.

We are grateful to Anker Innovations for supporting this project.