TecoGAN-PyTorch

This is a PyTorch reimplementation of TecoGAN: Temporally Coherent GAN for Video Super-Resolution (VSR). Please refer to the official TensorFlow implementation TecoGAN-TensorFlow for more information.

Features

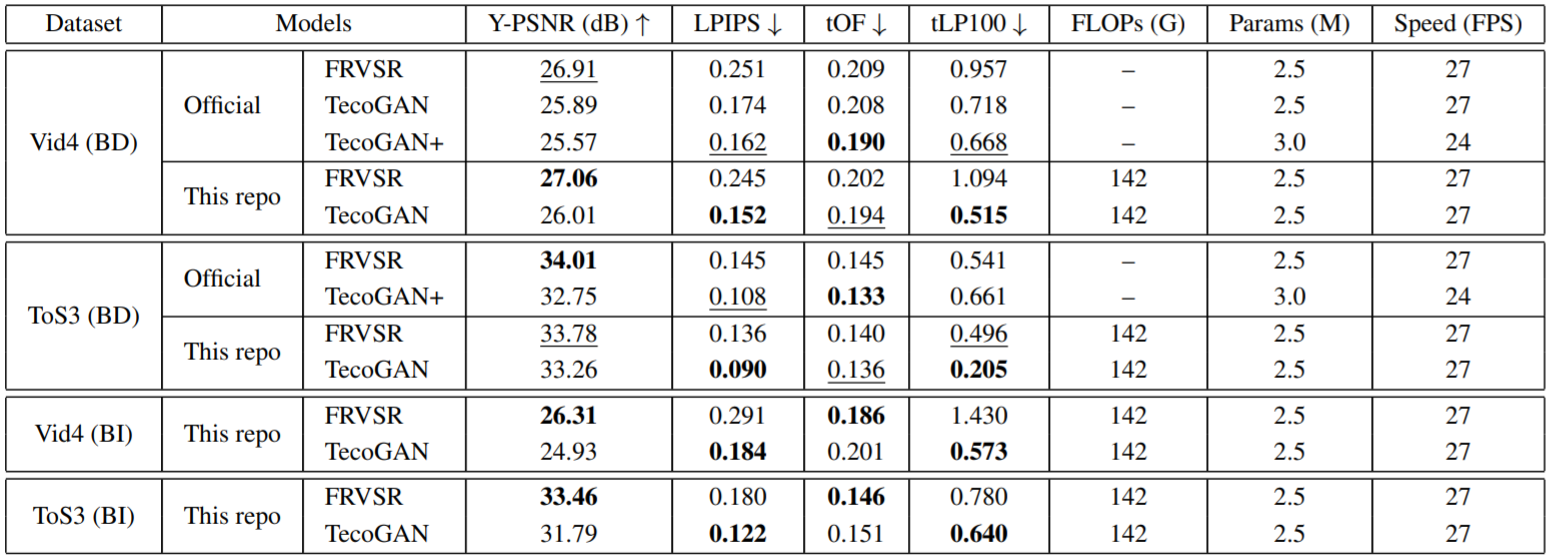

- Better Performance: This repo provides model with smaller size yet better performance than the official repo. See our Benchmark on Vid4 and ToS3 datasets.

- Multiple Degradations: This repo supports two types of degradation, i.e., BI & BD. Please refer to this wiki for more details about degradation types.

- Unified Framework: This repo provides a unified framework for distortion-based and perception-based VSR methods.

Dependencies

- Ubuntu >= 16.04

- NVIDIA GPU + CUDA

- Python 3

- PyTorch >= 1.0.0

- Python packages: numpy, matplotlib, opencv-python, pyyaml, lmdb

- (Optional) Matlab >= R2016b

Test

Note: We apply different models according to the degradation type of the data. The following steps are for 4x upsampling in BD degradation. You can switch to BI degradation by replacing all BD to BI below.

-

Download the official Vid4 and ToS3 datasets.

bash ./scripts/download/download_datasets.sh BD

If the above command doesn't work, you can manually download these datasets from Google Drive, and then unzip them under

./data.

- Vid4 Dataset [Ground-Truth Data] [Low Resolution Data (BD)] [Low Resolution Data (BI)]

- ToS3 Dataset [Ground-Truth Data] [Low Resolution Data (BD)] [Low Resolution Data (BI)]

The dataset structure is shown as below.

data

├─ Vid4

├─ GT # Ground-Truth (GT) video sequences

└─ calendar

├─ 0001.png

└─ ...

├─ Gaussian4xLR # Low Resolution (LR) video sequences in BD degradation

└─ calendar

├─ 0001.png

└─ ...

└─ Bicubic4xLR # Low Resolution (LR) video sequences in BI degradation

└─ calendar

├─ 0001.png

└─ ...

└─ ToS3

├─ GT

├─ Gaussian4xLR

└─ Bicubic4xLR

-

Download our pre-trained TecoGAN model. Note that this model is trained with lesser training data compared with the official one, since we can only retrieve 212 out of 308 videos from the official training dataset.

bash ./scripts/download/download_models.sh BD TecoGAN

Again, you can download the model from [BD degradation] or [BI degradation], and put it under

./pretrained_models.

-

Super-resolute the LR videos with TecoGAN. The results will be saved at

./results.bash ./test.sh BD TecoGAN

-

Evaluate SR results using the official metrics. These codes are borrowed from TecoGAN-TensorFlow, with minor modifications to adapt to BI mode.

python ./codes/official_metrics/evaluate.py --model TecoGAN_BD_iter500000

-

Check out model statistics (FLOPs, parameters and running speed). You can modify the last argument to specify the video size.

bash ./profile.sh BD TecoGAN 3x134x320

Training

-

Download the official training dataset based on the instructions in TecoGAN-TensorFlow, rename to

VimeoTecoGANand then place under./data. -

Generate LMDB for GT data to accelerate IO. The LR counterpart will then be generated on the fly during training.

python ./scripts/create_lmdb.py --dataset VimeoTecoGAN --data_type GT

The following shows the dataset structure after completing the above two steps.

data

├─ VimeoTecoGAN # Original (raw) dataset

├─ scene_2000

├─ col_high_0000.png

├─ col_high_0001.png

└─ ...

├─ scene_2001

├─ col_high_0000.png

├─ col_high_0001.png

└─ ...

└─ ...

└─ VimeoTecoGAN.lmdb # LMDB dataset

├─ data.mdb

├─ lock.mdb

└─ meta_info.pkl # each key has format: [vid]_[total_frame]x[h]x[w]_[i-th_frame]

-

(Optional, this step is needed only for BI degradation) Manually generate the LR sequences with Matlab's imresize function, and then create LMDB for them.

Generate the raw LR video sequences. Results will be saved at ./data/Bicubic4xLR

matlab -nodesktop -nosplash -r "cd ./scripts; generate_lr_BI"

Create LMDB for the raw LR video sequences

python ./scripts/create_lmdb.py --dataset VimeoTecoGAN --data_type Bicubic4xLR

-

Train a FRVSR model first. FRVSR has the same generator as TecoGAN, but without GAN training. When the training is finished, copy and rename the last checkpoint weight from

./experiments_BD/FRVSR/001/train/ckpt/G_iter400000.pthto./pretrained_models/FRVSR_BD_iter400000.pth. This step offers a better initialization for the TecoGAN training.bash ./train.sh BD FRVSR

You can download and use our pre-trained FRVSR model [BD degradation] [BI degradation] without training from scratch.

bash ./scripts/download/download_models.sh BD FRVSR

-

Train a TecoGAN model. By default, the training is conducted in the background and the output info will be logged at

./experiments_BD/TecoGAN/001/train/train.log.bash ./train.sh BD TecoGAN

-

To monitor the training process and visualize the validation performance, run the following script.

python ./scripts/monitor_training.py --degradation BD --model TecoGAN --dataset Vid4

Note that the validation results are NOT the same as the test results mentioned above, because we use a different implementation of the metrics. The differences are caused by croping policy, LPIPS version and some other issues.

Benchmark

[1] FLOPs & speed are computed on RGB sequence with resolution 134*320 on NVIDIA GeForce GTX 1080Ti GPU.

[2] Both FRVSR & TecoGAN use 10 residual blocks, while TecoGAN+ has 16 residual blocks.

License & Citation

If you use this code for your research, please cite the following paper.

@article{tecogan2020,

title={Learning temporal coherence via self-supervision for GAN-based video generation},

author={Chu, Mengyu and Xie, You and Mayer, Jonas and Leal-Taix{\'e}, Laura and Thuerey, Nils},

journal={ACM Transactions on Graphics (TOG)},

volume={39},

number={4},

pages={75--1},

year={2020},

publisher={ACM New York, NY, USA}

}

Acknowledgements

This code is built on TecoGAN-TensorFlow, BasicSR and LPIPS. We thank the authors for sharing their codes.

If you have any questions, feel free to email [email protected]