PyParSVD: Python Parallel Singular Value Decomposition

Description

The PyParSVD library implements both a serial and a parallel singular value decomposition (SVD). The implementation of the library is conveniently:

- Distributed using

MPI4Py(for parallel SVD); - Streaming - data can be shown in batches to update the left singular vectors;

- Randomized - further acceleration of any serial components of the overall algorithm.

The distributed computation of the SVD follows (Wang et al 2016). The streaming algorithm used in this library is from (Levy and Lindenbaum 1998), where the parallel QR algorithm (the TSQR method) required for the streaming feature follows (Benson et al 2013). Finally, the randomized algorithm follows (Halko et al 2013).

The library is organized using a base class, pyparsvd/parsvd_base.py, that implements methods shared across the two derived classes, pyparsvd/parsvd_serial.py, and pyparsvd/parsvd_parallel.py. The former implements the serial SVD, while the latter implements the parallel SVD. We also provide a module that implements some postprocessing utilities, pyparsvd/postprocessing.py, that can be used as a standalone package or directly called from the derived classes pyparsvd/parsvd_serial.py, and pyparsvd/parsvd_parallel.py.

A simple case can be implementated following tutorials/basic.py, where we generate data for the the 1D Burgers' equation with a pre-written routine pre-stored data from tutorials/basic/data/data_splitter.py. The data generated by the data_splitter.py routine has been already splitted into different distributed ranks. In order to run this simple case, you need to run the data generation routine in tutorials/basic/data/, as follows:

python3 data_splitter.py

You can then go to tutorials/basic/, and use the following to ensure that there is no shared-memory acceleration by numpy using

export OPENBLAS_NUM_THREADS=1

Following this you may run the serial version of the streaming SVD using

python3 tutorial_basic_serial.py

and a parallel version of the same using

mpirun -np 6 python3 tutorial_basic_parallel.py

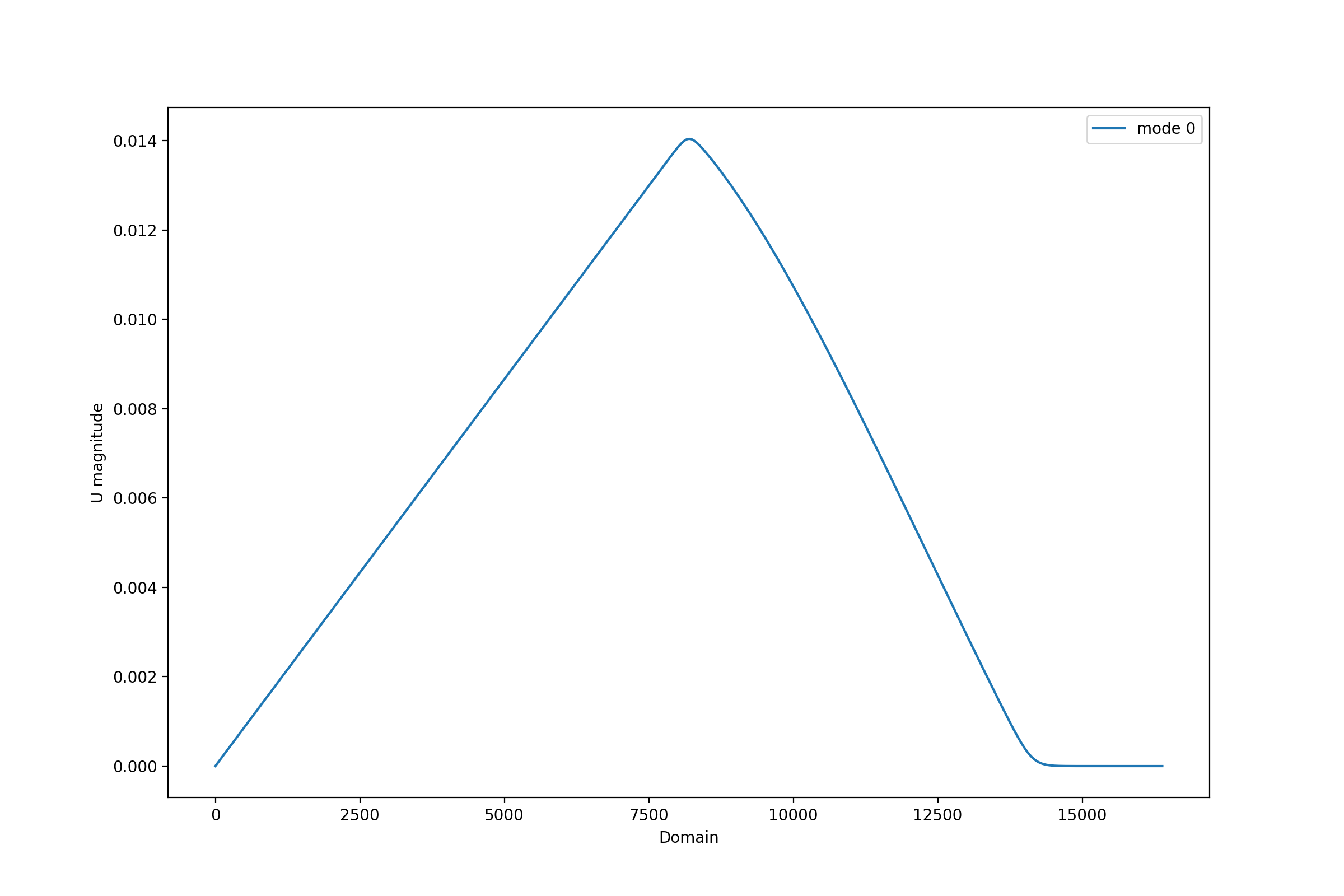

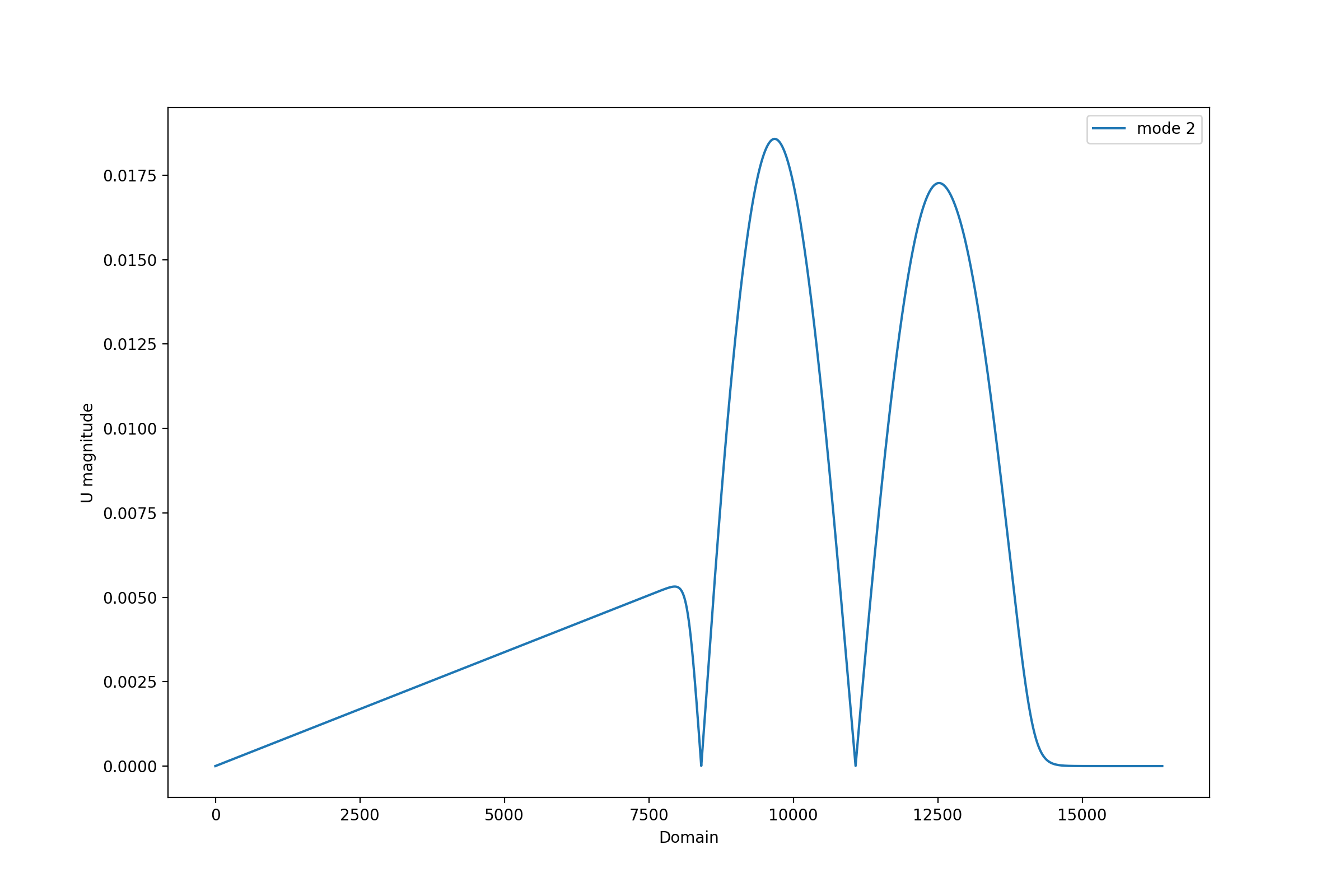

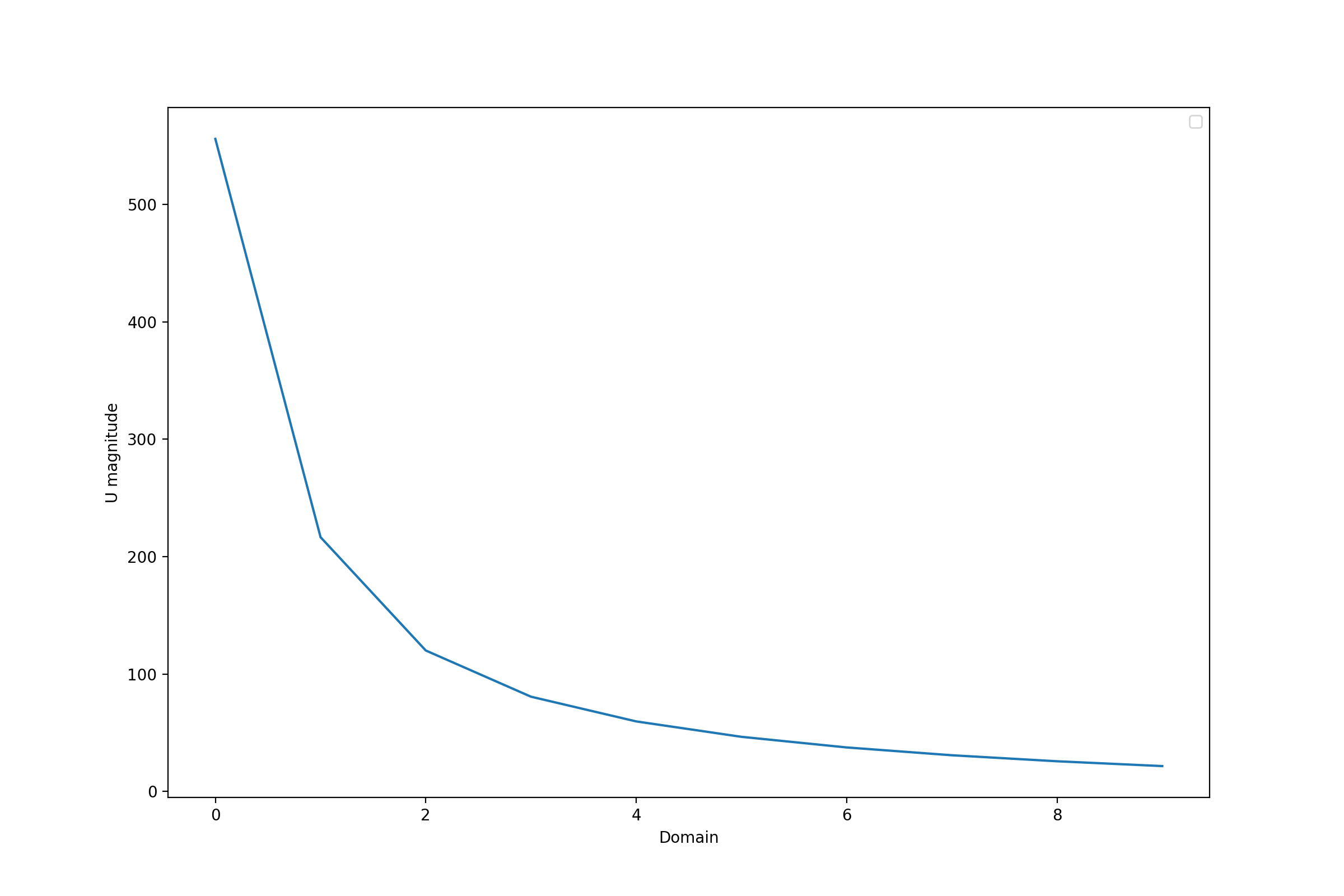

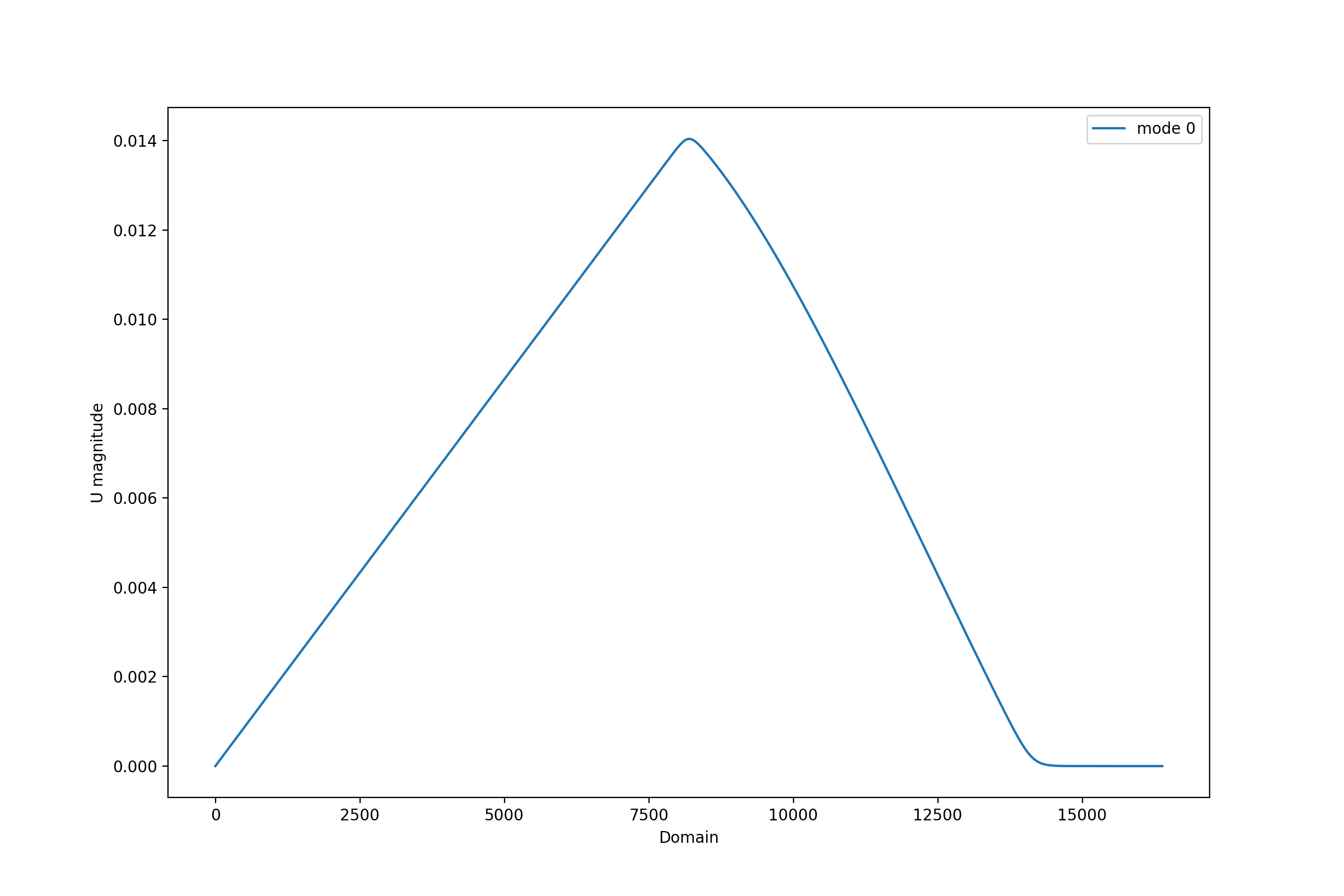

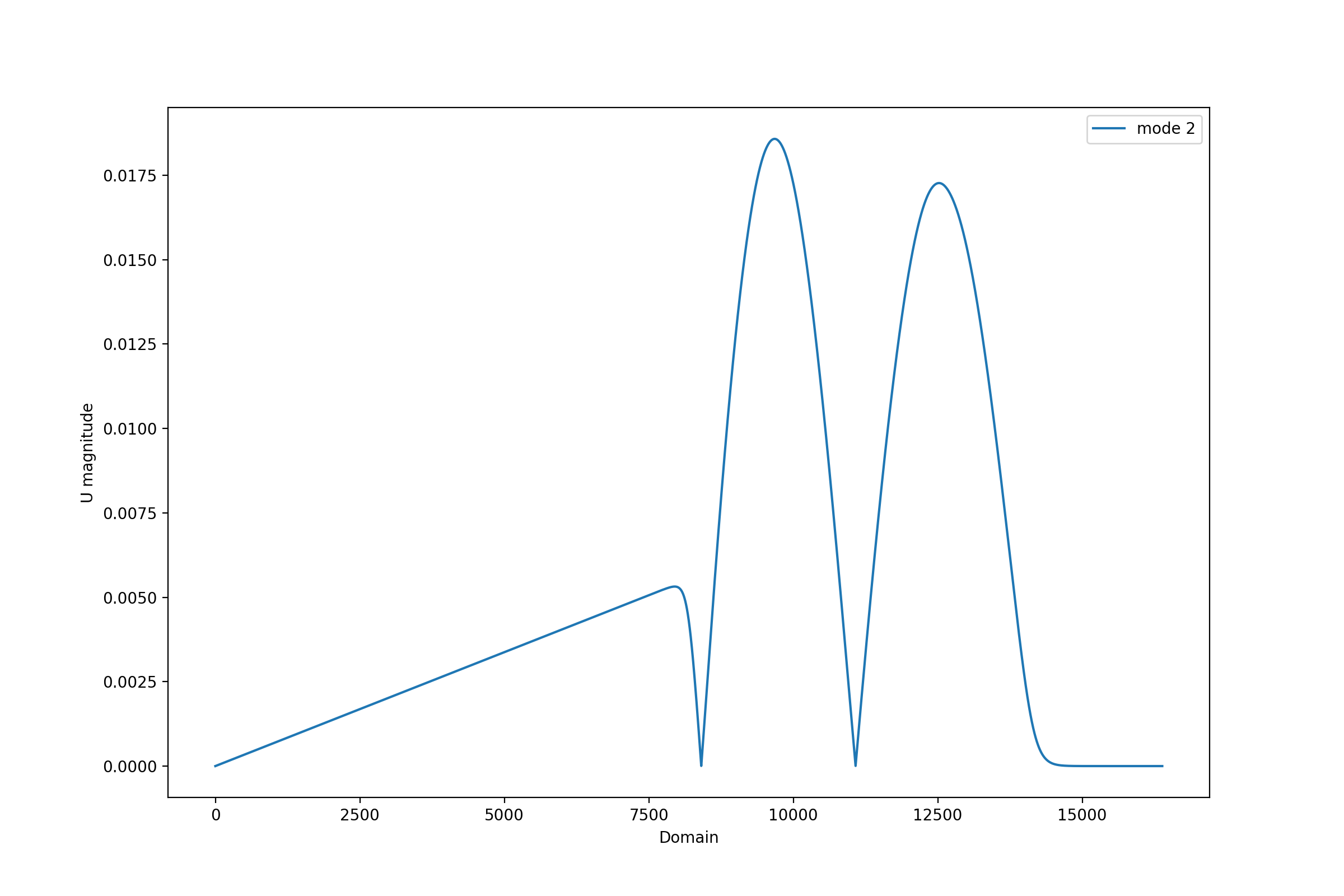

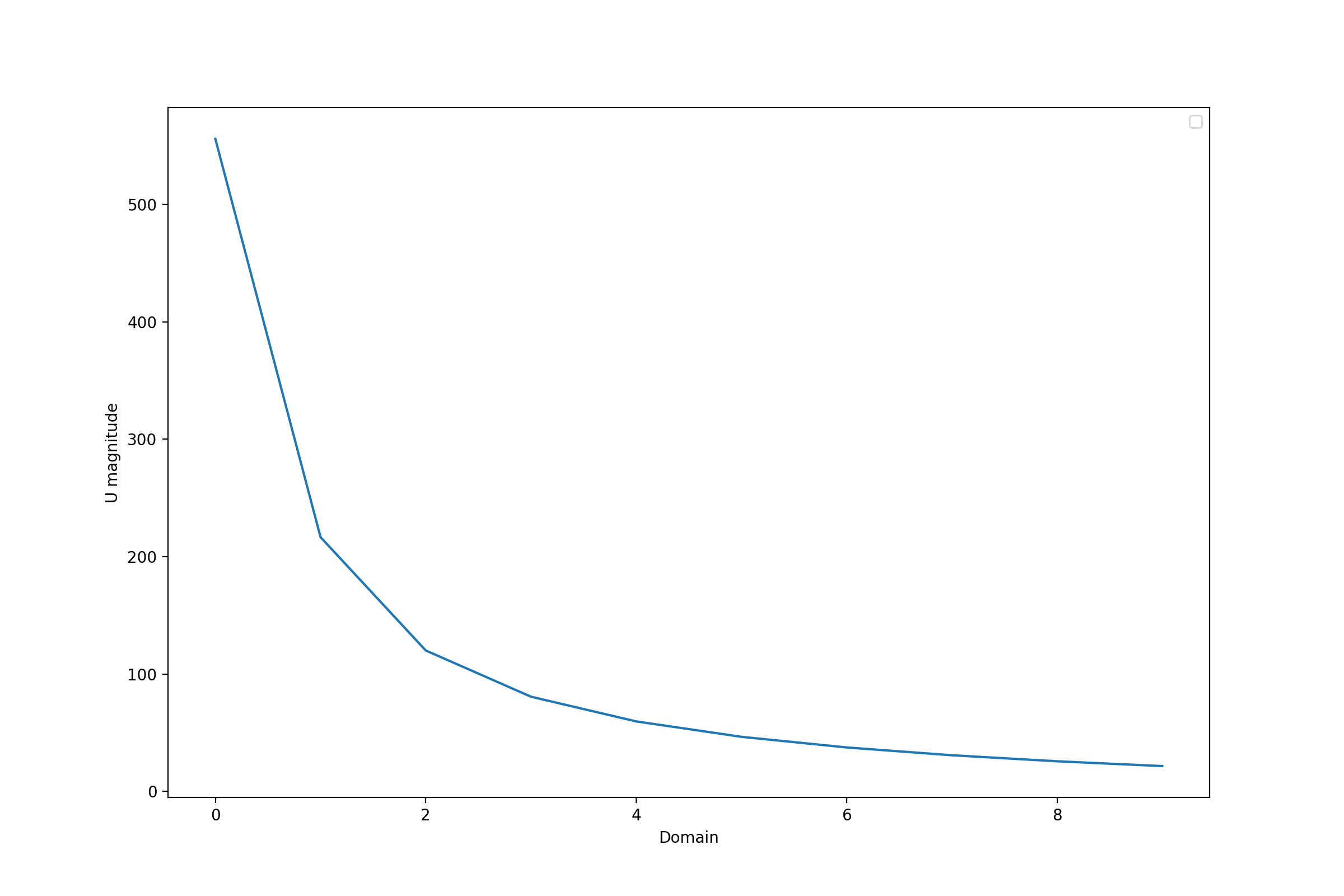

This should produce a set of figures, under a folder called results similar to the ones below

Serial

Parallel

Caution: Due to differences in the parallel and serial versions of the algorithm, singular vectors may be "flipped".

An orthogonality check is also deployed for an additional sanity check.

The main components of the implementation are as follows

- import of the libraries

import os

import sys

import numpy as np

# Import library specific modules

sys.path.append(os.path.join("../../"))

from pyparsvd.parsvd_serial import ParSVD_Serial

from pyparsvd.parsvd_parallel import ParSVD_Parallel

- instantiation of the serial and parallel SVD objects can be done respectively as

# Construct SVD objects

SerSVD = ParSVD_Serial(K=10, ff=1.0)

ParSVD = ParSVD_Parallel(K=10, ff=1.0, low_rank=True)

We note that the parameter K corresponds to the number of modes to truncate, the parameter ff is the forget factor, while the parameter low_rank=True allows for randomized SVD.

- reading of the data for both the serial and parallel SVD computations

# Path to data

path = os.path.join(CFD, '../../tests/data/')

# Serial data

initial_data_ser = np.load(os.path.join(path, 'Batch_0_data.npy'))

new_data_ser = np.load(os.path.join(path, 'Batch_1_data.npy'))

newer_data_ser = np.load(os.path.join(path, 'Batch_2_data.npy'))

newest_data_ser = np.load(os.path.join(path, 'Batch_3_data.npy'))

# Parallel data

initial_data_par = np.load(os.path.join(path, 'points_rank_' + str(ParSVD.rank) + '_batch_0.npy'))

new_data_par = np.load(os.path.join(path, 'points_rank_' + str(ParSVD.rank) + '_batch_1.npy'))

newer_data_par = np.load(os.path.join(path, 'points_rank_' + str(ParSVD.rank) + '_batch_2.npy'))

newest_data_par = np.load(os.path.join(path, 'points_rank_' + str(ParSVD.rank) + '_batch_3.npy'))

- serial SVD computation

# Do first modal decomposition -- Serial

SerSVD.initialize(initial_data_ser)

# Incorporate new data -- Serial

SerSVD.incorporate_data(new_data_ser)

SerSVD.incorporate_data(newer_data_ser)

SerSVD.incorporate_data(newest_data_ser)

- parallel SVD computation

# Do first modal decomposition -- Parallel

ParSVD.initialize(initial_data_par)

# Incorporate new data -- Parallel

ParSVD.incorporate_data(new_data_par)

ParSVD.incorporate_data(newer_data_par)

ParSVD.incorporate_data(newest_data_par)

- basic postprocessing where we plot the results

# Basic postprocessing

if ParSVD.rank == 0:

# Save results

SerSVD.save()

ParSVD.save()

# Visualize modes

SerSVD.plot_1D_modes(filename='serial_1d_modes.png')

ParSVD.plot_1D_modes(filename='parallel_1d_modes.png')

Parallel IO

PyParSVD also comes with parallel-IO capability by virtue of h5py and parallel-HDF5. Ensure adequate availability of requisite libraries - the easiest way is to use conda as follows (otherwise installing can get a bit tricky)

conda install -c conda-forge "h5py>=2.9=mpi*"

Once this step is complete you can run the parallel-IO tutorial in tutorials/parallel_io/ using

python data_splitter.py

from tutorials/parallel_io/data/ and then executing

mpirun -np 6 python tutorial_parallel_io.py

from tutorials/parallel_io/. In this example - it is assumed that your data is in h5 format.

Testing

Regression tests are deployed using Travis CI, that is a continuous intergration framework.

You can check out the current status of PyParSVD here.

IF you want to run tests locally, you can do so by:

> cd tests/

> mpirun -np 6 python3 -m pytest --with-mpi -v

References

(Levy and Lindenbaum 1998)

Sequential Karhunen–Loeve Basis Extraction and its Application to Images. [DOI]

(Wang et al 2016)

Approximate partitioned method of snapshots for POD. [DOI]

(Benson et al 2013)

Direct QR factorizations for tall-and-skinny matrices in MapReduce architectures. [DOI]

(Halko et al 2011)

Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. [DOI]

How to contribute

Contributions improving code and documentation, as well as suggestions about new features are more than welcome!

The guidelines to contribute are as follows:

- open a new issue describing the bug you intend to fix or the feature you want to add.

- fork the project and open your own branch related to the issue you just opened, and call the branch

fix/name-of-the-issueif it is a bug fix, orfeature/name-of-the-issueif you are adding a feature. - ensure to use 4 spaces for formatting the code.

- if you add a feature, it should be accompanied by relevant tests to ensure it functions correctly, while the code continue to be developed.

- commit your changes with a self-explanatory commit message.

- push your commits and submit a pull request. Please, remember to rebase properly in order to maintain a clean, linear git history.

License

See the LICENSE file for license rights and limitations (MIT).