Thompson-Greedy-Comparison-for-MultiArmed-Bandits

Repository Containing Comparison of two methods for dealing with Exploration-Exploitation dilemma for MultiArmed Bandits

In ths repo I implemented two techniques for tackling mentioned tradeoff. Methods Include:-

- Epsilon Greedy (With different epsilons)

- Thompson Sampling(also known as posterior sampling)

The reason for choosing these two only is to show the upper and lower bounds as epsilons are a starting point in dealing with these tradeoffs and Thompson Sampling is considered a recent state of the Art in this field.

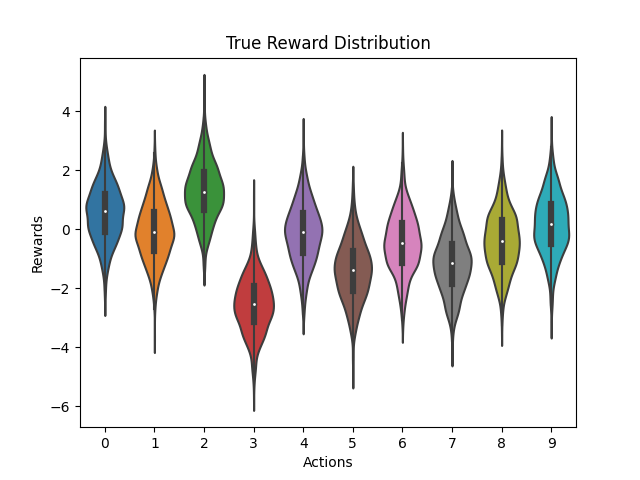

ENV SPECIFICATIONS - A 10 arm testbed is simulated as same demonstrated in Sutton-Barto Book.

True Reward distribution (Here Action-2 is best)

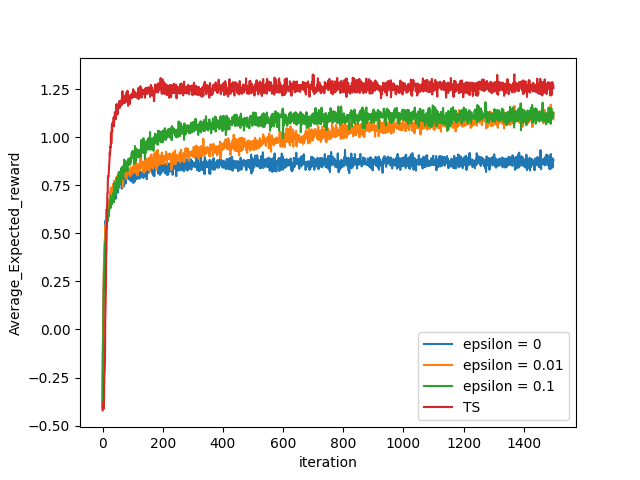

Comparison Greedy(or Epsilon Greedies and TS

we used three different epsilons here for testing i.e:

- epsilon = 0 => Greedy Agent

- epsilon = 0.01 => exploration with 1% probability

- epsilon = 0.1 => exploration with 10% probability

and TS

Averaged Over 2500 independent runs with 1500 timesteps

Comparison

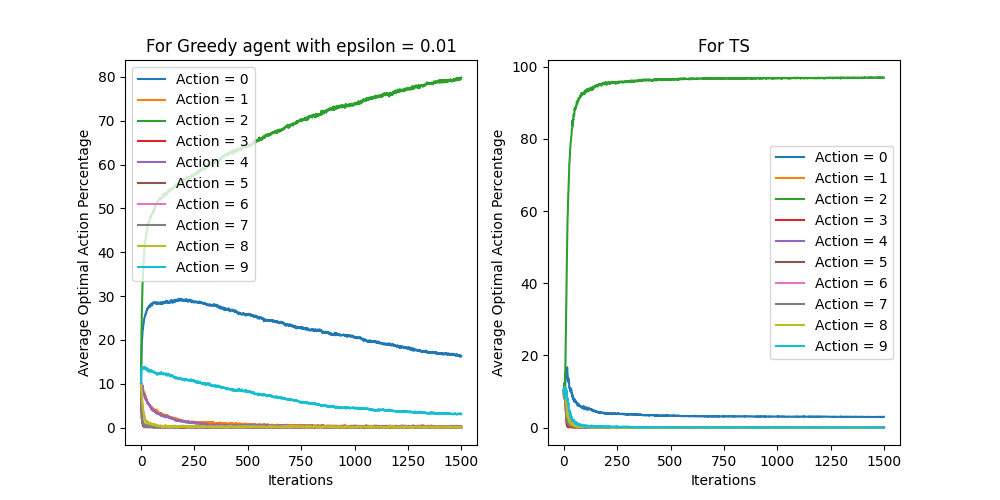

Percentage Actions selected for epsilon = 0.01 and TS

Conclusion -> epsilon = 0.01 can be considered best for eps-greedies as it is increasing but pretty slow and the percentage Optimal Actions for it is Around 80% in later stages, on the other hand Thomsan Sampling shows a significant improvement in these results as it quickly explores and then exploit the optimal one with percentage goes upto almost 100 even very early!!.

In case you want to know more about TS visit this Reference.

GitHub

https://github.com/Amshra267/Thompson-Greedy-Comparison-for-MultiArmed-Bandits